Robohub.org

Asimov’s laws of robotics are not the moral guidelines they appear to be

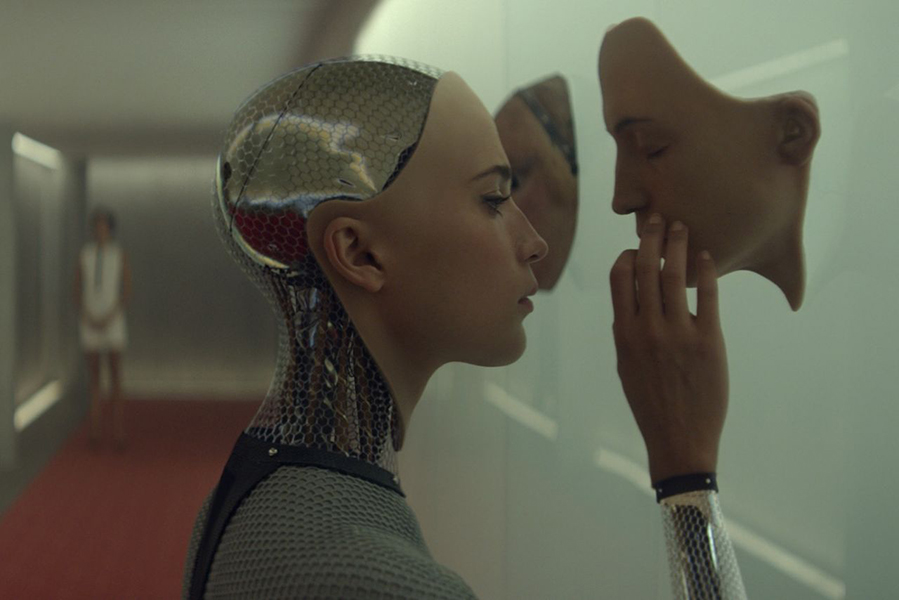

Credit: Ex Machina (2015).

Seventy-five years ago, the celebrated science fiction writer Isaac Asimov published a short story called Runaround. Set on Mercury, it features a sophisticated robot nicknamed Speedy that has been ordered to gather some of the chemical selenium for two human space adventurers.

Speedy gets near the selenium, but a toxic gas threatens to destroy the robot. When it retreats from the gas to save itself, the threat recedes and it feels obliged to go back for the selenium. It is left going round in circles.

Speedy is caught in a conflict between two of the laws that robots in Asimov’s stories follow as their core ethical programming: always obey human instructions and always protect your existence (as long as it doesn’t result in human injury).

Speedy’s custodians resolve the robot’s conflict by exploiting a third, overriding law: never let a human being come to any harm. They place themselves in harm’s way and tell Speedy if he does not get the selenium they will die. This information straightens out Speedy’s priorities, and the adventurers live to interfere with other planets.

Today, Asimov’s three laws of robotics are much better known than Runaround, the story in which they first appeared. The laws seem a natural response to the idea that robots will one day be commonplace and need internal programming to prevent them from hurting people.

Yet, while Asimov’s laws are organised around the moral value of preventing harm to humans, they are not easy to interpret. We need to stop viewing them as an adequate ethical basis for robotic interactions with people.

Part of the reason Asimov’s laws seem plausible is that fears about the harm robots could do to humans are not groundless. There have already been fatalities in the US due to malfunctioning autonomous cars.

Again, the capacities of artificial intelligence to adjust its routines to the things and people they interact with, makes some of its behaviour unpredictable. For example, machines that are capable of interacting with each other in a co-ordinated way can produce “swarm” behaviour. Swarm behaviour can be unpredictable because it can depend on adapting to random events.

So Asimov was right to worry about unexpected robot behaviour. But when we look more closely at how robots actually work and the tasks they are designed for, we find that Asimov’s laws fail to apply clearly. Take military drones. These are robots directed by humans to kill other humans. The whole idea of military drones seems to violate Asimov’s first law, which prohibits robot injury to humans.

But if a robot is being directed by a human controller to save the lives of its co-citzens by killing other attacking humans, it is both following and not following the first law. Nor is it clear if the drone is responsible when someone is killed in these circumstances. Perhaps the human controller of the drone is responsible. But a human cannot break Asimov’s laws, which are exclusively directed at robots.

Meanwhile, it may be that armies equipped with drones will vastly reduce the amount of human life lost overall. Not only is it better to use robots rather than humans as cannon fodder, but there is arguably nothing wrong with destroying robots in war, since they have no lives to lose and no personality or personal plans to sacrifice.

We need the freedom to harm ourselves

At the other end of the scale you have robots designed to provide social care to humans. At their most sophisticated, they act as companions, moving alongside their users as they fetch and carry, issue reminders about appointments and medication, and send out alarms if certain kinds of emergencies occur. Asimov’s laws are an appropriate ethics if keeping an elderly person safe is the robot’s main goal.

But often robotics fits into a range of “assistive” technology that helps elderly adults to be independent. Here the goal is to enable the elderly to prolong the period during which they can act on their own choices and lead their own lives, like any other competent adult. If this is the overriding purpose of a care robot, then, within limits, it needs to accommodate risk-taking, including its owner putting itself in harm’s way.

For example, falling is an everyday hazard of life after the age of 65. An elderly person can rationally judge that living with the after-effects of a fall is better than a regime in which they people are heavily monitored and insulated from all danger. But a robot that deferred to this judgement by not intefering when its user wants to take a steep walk would be violating the law that saved Asimov’s adventurers on Mercury.

So long as only minor injury results from a competent adult decision, it should be respected by everyone, robots and family alike, even when it is taken by an elderly person. Respect in these circumstances means not preventing the adult decision-maker from acting or informing others about their actions.

As robots become a bigger part of our society, we will undoubtedly need rules to govern how they operate. But Asimov’s laws either fail to recognise that robots get caught between human acts and cannot always avoid human injury. And that sometimes allowing humans to injure themselves is a way of respecting human autonomy.

tags: c-Politics-Law-Society, cx-Arts-Entertainment, opinion, roboethics, Social aspect