Robohub.org

Brain Computer Interface used to control the movement and actions of an android robot

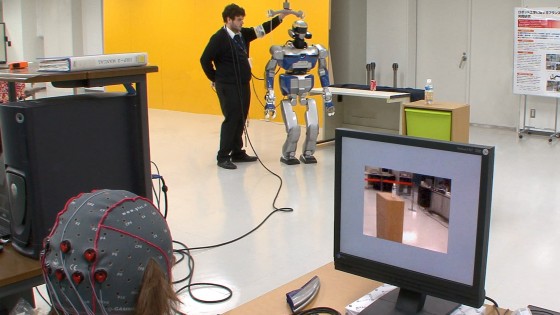

Researchers at the CNRS-AIST Joint Robotics Laboratory and the CNRS-LIRMM Interactive Digital Human group, are working on ways to control robots via thought alone.

“Basically we would like to create devices which would allow people to feel embodied, in the body of a humanoid robot. To do so we are trying to develop techniques from Brain Computer Interfaces (BCI) so that we can read the peoples thoughts and then try to see how far we can go from interpreting brain waves signals, to transform them into actions to be done by the robot.”

The interface uses flashing symbols to control where the robot moves and how it interacts with the environment around it.

“Basically what you see is how with one pattern, called the SSVEP, which is the ability to associate flickering things with actions, it’s what we call the affordance, means that we associate actions with objects and then we bring this object to the attention of the user and then by focussing their intention the user is capable of inducing which actions they would like with the robot, and then this is translated.”

“He is wearing a cap which is embedded with electrodes, and then we read the electric activities of the brain that are transferred to this PC, and then there is a signal processing unit which classifies what the user is thinking, and then as you see here there are several icons that can be associated with tasks or you can recognize an object that will flicker automatically, and with different frequencies we can recognize which frequency the user is focussing their attention on and then we can select this object and since the object is associated with a task then it’s easy to instruct the robot which task it has to perform.”

“And the applications targeted are for tetraplegics or paraplegics to use this technology to navigate using the robot, and for instance, a paraplegic patient in Rome would be able to pilot a humanoid robot for sightseeing in Japan.”

tags: brain-computer interface, c-Research-Innovation