Robohub.org

Does AI pose a threat to society?

Last week I had the pleasure of debating the question “does AI pose a threat to society?” with friends and colleagues Christian List, Maja Pantic and Samantha Payne. The event was organised by the British Academy and brilliantly chaired by the Royal Society’s director of science policy Claire Craig. Here follows my opening statement:

My opening statement

One Friday afternoon in 2009 I was called by a science journalist at, I recall, the Sunday Times. He asked me if I knew that there was to be a meeting of the AAAI to discuss robot ethics. I said no I don’t know of this meeting. He then asked “are you surprised they are meeting to discuss robot ethics” and my answer was no. We talked some more and agreed it was actually a rather dull story: a case of scientists behaving responsibly. I really didn’t expect the story to appear but checked the Sunday paper anyway, and there in the science section was the headline Scientists fear revolt of killer robots. (I then spent the next couple of days on the radio explaining that no, scientists do not fear a revolt of killer robots.)

So, fears of future super intelligence – robots taking over the world – are greatly exaggerated: the threat of an out-of-control super intelligence is a fantasy – interesting for a pub conversation perhaps. It’s true we should be careful and innovate responsibly, but that’s equally true for any new area of science and technology. The benefits of robotics and AI are so significant, the potential so great, that we should be optimistic rather than fearful. Of course robots and intelligent systems must be engineered to very high standards of safety for exactly the same reasons that we need our washing machines, cars and airplanes to be safe. If robots are not safe people will not trust them. To reach it’s full potential what robotics and AI needs is a dose of good old fashioned (and rather dull) safety engineering.

In 2011 I was invited to join a British Standards Institute working group on robot ethics, which drafted a new standard BS 8611 Guide to the ethical design of robots and robotic systems, published in April 2016. I believe this to be the world’s first standard on ethical robots.

Also in 2016 the very well regarded IEEE standards association – the same organization that gave us WiFi – launched a Global initiative on Ethical Considerations in AI and Autonomous Systems. The purpose of this Initiative is to ensure every technologist is educated and empowered to prioritize ethical considerations in the design and development of autonomous and intelligent systems; in a nutshell, to ensure ethics are baked in. In December we published Ethically Aligned Design: A Vision for Prioritizing Human Well Being with AI and Autonomous Systems. Within that initiative I’m also leading a new standard on transparency in autonomous systems, based on the simple principle that it should always be possible to find out why an AI or robot made a particular decision.

We need to agree ethical principles, because they are needed to underpin standards – ways of assessing and mitigating the ethical risks of robotics and AI. But standards needs teeth and in turn underpin regulation. Why do we need regulation? Think of passenger airplanes; the reason we trust them is because it’s a highly regulated industry with an amazing safety record, and robust, transparent processes of air accident investigation when things do go wrong. Take one example of a robot that we read a lot about in the news – the Driverless Car. I think there’s a strong case for a driverless car equivalent of the CAA, with a driverless car accident investigation branch. Without this it’s hard to see how driverless car technology will win public trust.

Does AI pose a threat to society? No. But we do need to worry about the down to earth questions of present day rather unintelligent AIs; the ones that are deciding our loan applications, piloting our driverless cars or controlling our central heating. Are those AIs respecting our rights, freedoms and privacy? Are they safe? When AIs make bad decisions, can we find out why? And I worry too about the wider societal and economic impacts of AI. I worry about jobs of course, but actually I think there is a bigger question: how can we ensure that the wealth created by robotics and AI is shared by all in society?

Thank you.

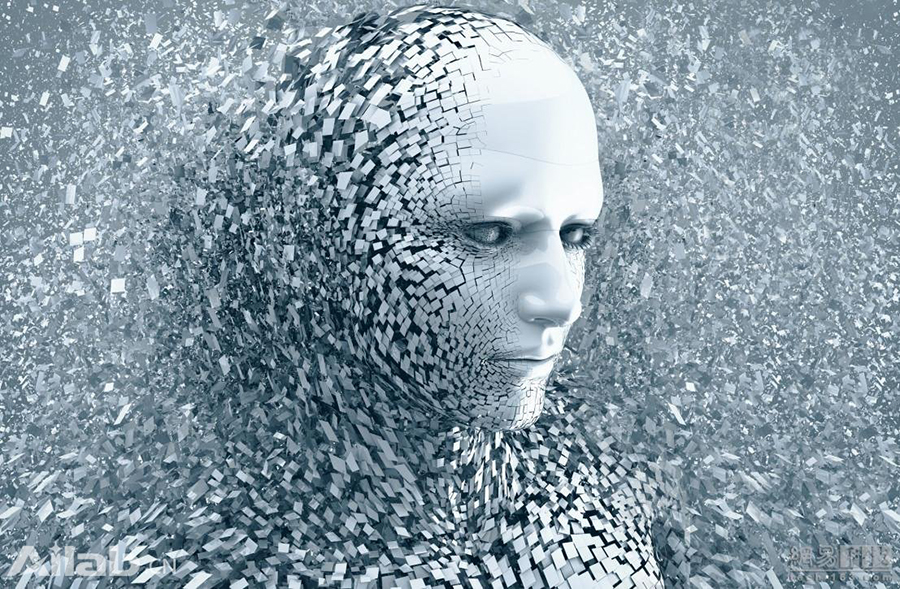

The featured image of this article was used to advertise the BA’s series of events on the theme Robotics, AI and Society. The reason I draw your attention to it here is that one of the many interesting questions to the panel was about the way that AI tends to be visualised in the media. This kind of human face coalescing (or perhaps emerging) from the atomic parts of the AI seems to have become a trope for AI. Is it a helpful visualisation of the human face of AI, or does it mislead to an impression that AI has human characteristics?

tags: AI, Algorithm AI-Cognition, Artificial Intelligence, c-Politics-Law-Society, cx-Education-DIY, human-robot interaction, machine learning, opinion, policy, roboethics