Robohub.org

EQ-Radio: Detecting emotions with wireless signals

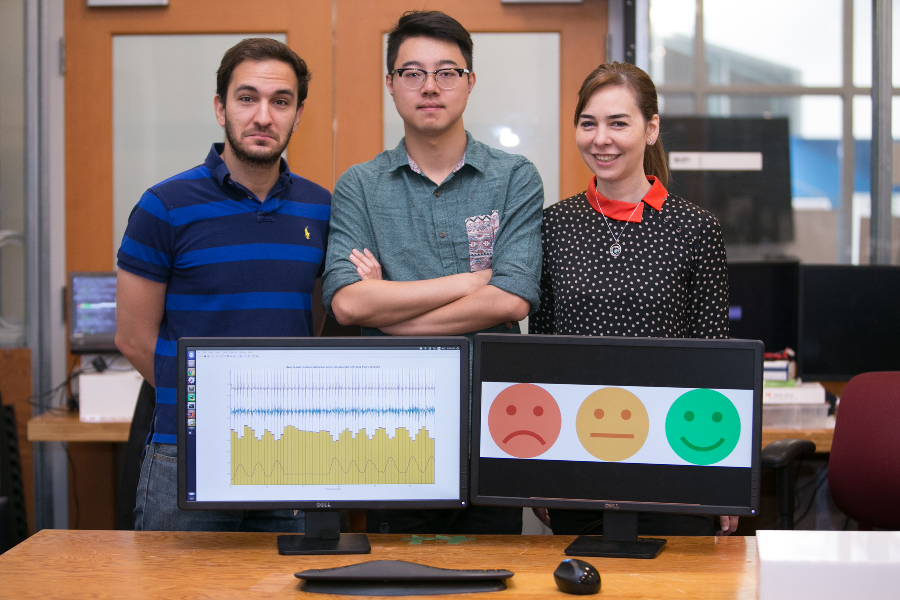

From L-R: PhD Fadel Adib, PhD Mingmin Zhao and Professor Dina Katabi demonstrating different ’emotions’ like the picture. Credit: Jason Dorfman, MIT CSAIL

By measuring your heartbeat and breath, this device from MIT’s Computer Science and Artificial Intelligence Lab can tell if you’re excited, happy, angry or sad .

As many a relationship book can tell you, understanding someone else’s emotions can be a difficult task. Facial expressions aren’t always reliable: a smile can conceal frustration, while a poker face might mask a winning hand.

But what if technology could tell us how someone is really feeling?

Researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have developed “EQ-Radio,” a device that can detect a person’s emotions using wireless signals.

By measuring subtle changes in breathing and heart rhythms, EQ-Radio is 87 percent accurate at detecting if a person is excited, happy, angry or sad – and can do so without on-body sensors or facial-recognition software.

MIT professor and project lead Dina Katabi envisions the system being used in entertainment, consumer behavior and health-care. Film studios and ad agencies could test viewers’ reactions in real-time, while smart homes could use information about your mood to adjust the heating or suggest that you get some fresh air.

Professor Dina Katabi (middle) explains how PhD Fadel Adib’s face (right) is neutral, but that EQ-Radio’s analysis of his heartbeat and breathing show that he is sad. Credit: Jason Dorfman MIT CSAIL

“Our work shows that wireless signals can capture information about human behavior that is not always visible to the naked eye,” says Katabi, who co-wrote a paper on the topic with PhD students Mingmin Zhao and Fadel Adib. “We believe that our results could pave the way for future technologies that could help monitor and diagnose conditions like depression and anxiety.”

EQ-Radio builds on Katabi’s continued efforts to use wireless to measure human behavior like breathing and falling. She says that she will incorporate emotion-detection into her spin-off company Emerald, which makes a device that is aimed at detecting and predicting falls among the elderly.

Using wireless signals reflected off people’s bodies, the device measures heartbeats as accurately as an ECG monitor, with a margin of error of approximately 0.3 percent. It then studies the waveforms within each heartbeat to match a person’s behavior to how they previously acted in one of the four emotion-states.

The team will present the work next month at the Association of Computing Machinery’s International Conference on Mobile Computing and Networking (MobiCom).

How it works

Existing emotion-detection methods rely on audiovisual cues or on-body sensors, but there are downsides to both techniques. Cues like facial expressions are famously unreliable, while on-body sensors like chest bands and ECG monitors are inconvenient to wear and become inaccurate if they change position over time.

EQ-Radio instead sends wireless signals that reflect off of a person’s body and back to the device. Its beat-extraction algorithms break the reflections into individual heartbeats and analyze the small variations in heartbeat intervals to determine their levels of arousal and positive affect.

These measurements are what allow EQ-Radio to detect emotion. For example, a person whose signals correlate to low arousal and negative affect is more likely to tagged as sad, while someone whose signals correlate to high arousal and positive affect would likely be tagged as excited.

The exact correlations vary from person to person, but are consistent enough that EQ-Radio could detect emotions with 70 percent accuracy even when it hadn’t previously measured the target person’s heartbeat.

“Just by generally knowing what human heartbeats look like in different emotional states, we can look at a random person’s heartbeat and reliably detect their emotions,” says Zhao.

For the experiments, subjects used videos or music to recall a series of memories that each evoked one the four emotions, as well as a no-emotion baseline. Trained just on those five sets of two-minute videos, EQ-Radio could then accurately classify the person’s behavior among the four emotions 87 percent of the time.

Compared to Microsoft’s vision-based “Emotion API”, which focuses on facial expressions, EQ-Radio was found to be significantly more accurate in detecting joy, sadness and anger. The two systems performed similarly with neutral emotions, since a face’s absence of emotion is generally easier to detect than its presence.

One of the CSAIL team’s toughest challenges was to tune out irrelevant data. In order to get the heart-rate, for example, the team had to dampen the breathing, since the distance that a person’s chest moves from breathing is much greater than the distance that their heart moves to beat.

To do so, the team focused on wireless signals that are based on acceleration rather than distance traveled, since the rise and fall of the chest with each breath tends to be much more consistent – and therefore have a lower acceleration – than the motion of the heartbeat.

Although the focus on emotion-detection meant analyzing the time between heartbeats, the team says that the algorithm’s ability to captured the heartbeat’s entire waveform means that in the future it could be used for non-invasive health monitoring and diagnostic settings.

“By recovering measurements of the heart valves actually opening and closing at a millisecond time-scale, this system can literally detect if someone’s heart skips a beat,” says Adib. “This opens up the possibility of learning more about conditions like arrhythmia, and potentially exploring other medical applications that we haven’t even thought of yet.”

Read more about the research here.

tags: AI, c-Research-Innovation, CSAIL, machine learning