Robohub.org

Headlights that see through rain; A robotic seamstress

Headlights That See Through Rain

|

| Graphic courtesy of Carnegie Mellon Illumination and Imaging Lab. |

A while back the Navy, NSF, Samsung and Intel collaborated in a research project to improve visibility in poor weather conditions. Tools were developed for removing fog, haze, mist, rain, snow, dust and murky waters from images and videos. But, and this was the original motivation for this project, they wanted helmsmen and drivers to see better with their own eyes in those very same poor weather conditions.

Hence the development at Carnegie Mellon University’s Robotics Institute of a smart headlight system. Last week CMU released papers and videos showing that their smart headlight system can improve visibility by constantly redirecting light to shine between particles of precipitation.

“If you’re driving in a thunderstorm, the smart headlights will make it seem like it’s a drizzle,” said Srinivasa Narasimhan, associate professor of robotics.

The system uses a camera to track the motion of raindrops and snowflakes and then applies a computer algorithm to predict where those particles will be just a few milliseconds later. The light projection system then adjusts to deactivate light beams that would otherwise illuminate the particles in their predicted positions.

“A human eye will not be able to see that flicker of the headlights,” Narasimhan said. “And because the precipitation particles aren’t being illuminated, the driver won’t see the rain or snow either.”

To people, rain can appear as elongated streaks that seem to fill the air. To high-speed cameras, however, rain consists of sparsely spaced, discrete drops. That leaves plenty of space between the drops where light can be effectively distributed if the system can respond rapidly, Narasimhan said.

The CMU team is now engineering a more compact version which will be able to be installed in a car for road testing.

Amazing! I can’t wait to get it installed on my new car.

A Factory To Make Uniforms

Recently there was a lot of news uproar over the fact that China was producing the uniforms and flags for the American Olympic team. The Pentagon is worried about the same situation regarding our country’s military uniforms. Every year, the Pentagon spends $4 billion on uniforms. In an effort to cut costs, increase efficiency, and keep production in America, DARPA has awarded a $1.25 million contract to SoftWear Automation, Inc. – a corporation started by a retired GA Tech professor who wrote a paper on the subject two years ago. The project is to develop “complete production facilities that produce garments with zero direct labor” – in other words, a robot factory that can make uniforms from beginning to end without human operators.

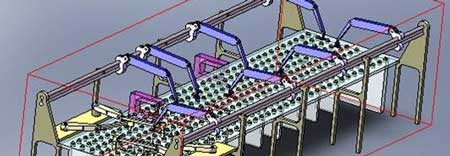

|

| Research version of a robotic sewing machine with high-speed vision and servo-controller. Photo: SoftWear Automation. |

SoftWear Automation’s solution is a fully automated work cell which will automatically make a sewn item, e.g., a pair of pants, shorts, a work shirt, etc. This involves controlling the cutting and sewing machines based on machine vision and moving coil servo motors (two are shown in magenta in the work cell image shown below). The sewing machines will have servo controlled rotations so that sewing can be dynamically changed in direction without fabric rotation.

|

| Rendering of automated sewing work cell. Image: SoftWear Automation. |

This is not an inexpensive undertaking. Like many industrial robotic solutions, there is serious up-front capital expenditure. But like most robot applications, payback is swift in steady product quality and output. Also like most industrial robotic applications, costs are dropping quickly as sensors, software and CPUs become more capable and available at very low cost.

The DoD, with this contract, may be leading the way to retrieving from offshore a good portion of the sewn items business that are presently produced in China, Vietnam and other low-labor-cost areas.

tags: SoftWear Automation, uniforms