Robohub.org

Interview with Huy Ha and Shuran Song: CoRL 2021 best system paper award winners

Congratulations to Huy Ha and Shuran Song who have won the CoRL 2021 best system paper award!

Their work, FlingBot: the unreasonable effectiveness of dynamic manipulations for cloth unfolding, was highly praised by the judging committee. “To me, this paper constitutes the most impressive account of both simulated and real-world cloth manipulation to date.”, commented one of the reviewers.

Below, the authors tell us more about their work, the methodology, and what they are planning next.

What is the topic of the research in your paper?

In my most recent publication with my advisor, Professor Shuran Song, we studied the task of cloth unfolding. The goal of the task is to manipulate a cloth from a crumpled initial state to an unfolded state, which is equivalent to maximizing the coverage of the cloth on the workspace.

Could you tell us about the implications of your research and why it is an interesting area for study?

Historically, most robotic manipulation research topics, such as grasp planning, are concerned with rigid objects, which have only 6 degrees of freedom since their geometry does not change. This allows one to apply the typical state estimation – task & motion planning pipeline in robotics. In contrast, deformable objects could bend and stretch in arbitrary directions, leading to infinite degrees of freedom. It’s unclear what the state of the cloth should even be. In addition, deformable objects such as clothes could experience severe self occlusion – given a crumpled piece of cloth, it’s difficult to identify whether it’s a shirt, jacket, or pair of pants. Therefore, cloth unfolding is a typical first step of cloth manipulation pipelines, since it reveals key features of the cloth for downstream perception and manipulation.

Despite the abundance of sophisticated methods for cloth unfolding over the years, they typically only address the easy case (where the cloth already starts off mostly unfolded) or take upwards of a hundred steps for challenging cases. These prior works all use single arm quasi-static actions, such as pick and place, which is slow and limited by the physical reach range of the system.

Could you explain your methodology?

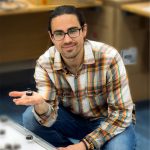

In our daily lives, humans typically use both hands to manipulate cloths, and with as little as a single high velocity fling or two, we can unfold an initially crumpled cloth. Based on this observation, our key idea is simple: Use dual arm dynamic actions for cloth unfolding.

FlingBot is a self-supervised framework for cloth unfolding which uses a pick, stretch, and fling primitive for a dual-arm setup from visual observations. There are three key components to our approach. First is the decision to use a high velocity dynamic action. By relying on cloths’ mass combined with a high-velocity throw to do most of its work, a dynamic flinging policy can unfold cloths much more efficiently than a quasi-static policy. Second is a dual-arm grasp parameterization which makes satisfying collision safety constraints easy. By treating a dual-arm grasp not as two points but as a line with a rotation and length, we can directly constrain the rotation and length of the line to ensure arms do not cross over each other and do not try to grasp too close to each other. Third is our choice of using Spatial Action Maps, which learns translational, rotational, and scale equivariant value maps, and allows for sample efficient learning.

What were your main findings?

We found that dynamic actions have three desirable properties over quasi-static actions for the task of cloth unfolding. First, they are efficient – FlingBot achieves over 80% coverage within 3 actions on novel cloths. Second, they are generalizable – trained on only square cloths, FlingBot also generalizes to T-shirts. Third, they expand the system’s effective reach range – even when FlingBot can’t fully lift or stretch a cloth larger than the system’s physical reach range, it’s able to use high velocity flings to unfold the cloth.

After training and evaluating our model in simulation, we deployed and finetuned our model on a real world dual-arm system, which achieves above 80% coverage for all cloth categories. Meanwhile, the quasi-static pick & place baseline was only able to achieve around 40% coverage.

What further work are you planning in this area?

Although we motivated cloth unfolding as a precursor for downstream modules such as cloth state estimation, unfolding could also benefit from state estimation. For instance, if the system is confident it has identified the shoulders of the shirt in its state estimation, the unfolding policy could directly grasp the shoulders and unfold the shirt in one step. Based on this observation, we are currently working on a cloth unfolding and state estimation approach which can learn in a self-supervised manner in the real world.

About the authors

|

Huy Ha is a Ph.D. student in Computer Science at Columbia University. He is advised by Professor Shuran Song and is a member of the Columbia Artificial Intelligence and Robotics (CAIR) lab. |

|

Shuran Song is an assistant professor in computer science department at Columbia University, where she directs the Columbia Artificial Intelligence and Robotics (CAIR) Lab. Her research focuses on computer vision and robotics. She’s interested in developing algorithms that enable intelligent systems to learn from their interactions with the physical world, and autonomously acquire the perception and manipulation skills necessary to execute complex tasks and assist people. |

Find out more

- Read the paper on arXiv.

- The videos of the real-world experiments and code are available here, as is a video of the authors’ presentation at CoRL.

- Read more about the winning and shortlisted papers for the CoRL awards here.

tags: c-Research-Innovation, Manipulation