Robohub.org

LIDAR (lasers) and cameras together: Which is more important?

Recently we’ve seen a series of startups arise hoping to make robocars with just computer vision, along with radar. That includes recently unstealthed AutoX, the off-again, on again efforts of comma.ai and at the non-startup end, the dedication of Tesla to not use LIDAR because it wants to sell cars today before LIDARs can be bought at automotive quantities and prices.

Their optimism is based on the huge progress being made in the use of machine learning, most notably convolutional neural networks, at solving the problems of computer vision. Milestones are dropping quickly in AI and particularly pattern matching and computer vision. (The CNNs can also be applied to radar and LIDAR data.)

There are reasons pushing some teams this way. First of all, the big boys, including Google, already have made tons of progress with LIDAR. There right niche for a startup can be the place that the big boys are ignoring. It might not work, but if it does, the payoff is huge. I fully understand the VCs investing in companies of this sort, that’s how VCs work. There is also the cost, and for Tesla and some others, the non-availability of LIDAR. The highest capability LIDARs today come from Velodyne, but they are expensive and in short supply — they can’t make them to keep up with the demand just from research teams!

Note, for more detailed analysis on this, read my article on cameras vs. lasers.

For the three key technologies, these trends seem assured:

- LIDAR will improve price/performance, eventually costing just hundreds of dollars for high-resolution units, and less for low-res units.

- Computer vision will improve until it reaches the needed levels of reliability, and the high-end processors for it will drop in cost and electrical power requirements.

- Radar will drop in cost to tens of dollars and software to analyse radar returns will improve.

In addition, there are some more speculative technologies whose trends are harder to predict, such as long-range LWIR LIDAR, new types of radar, and even a claimed lidar alternative that treats the photons like radio waves.

These trends are very likely. As a result, the likely winner continues to be a combination of all these technologies, and the question becomes which combination.

LIDAR’s problem is that it’s low resolution, medium in range and expensive today. Computer Vision (CV)’s problem is that it’s insufficiently reliable, depends on external lighting and needs expensive computers today. Radar’s problem is super low resolution.

Option one — high-end LIDAR with computer vision assist

High end LIDARs, like the 32 and 64 laser units favoured by the vast majority of teams, are extremely reliable at detecting potential obstacles on the road. They never fail (within their range) to differentiate something on the road from the background. But they often can’t tell you just what it is, especially at a distance. It won’t know a car from a pickup truck or 2 pedestrians from 3. It won’t read facial expressions or body language. It can read signs but only when they are close. It can’t see colours, such as traffic signals.

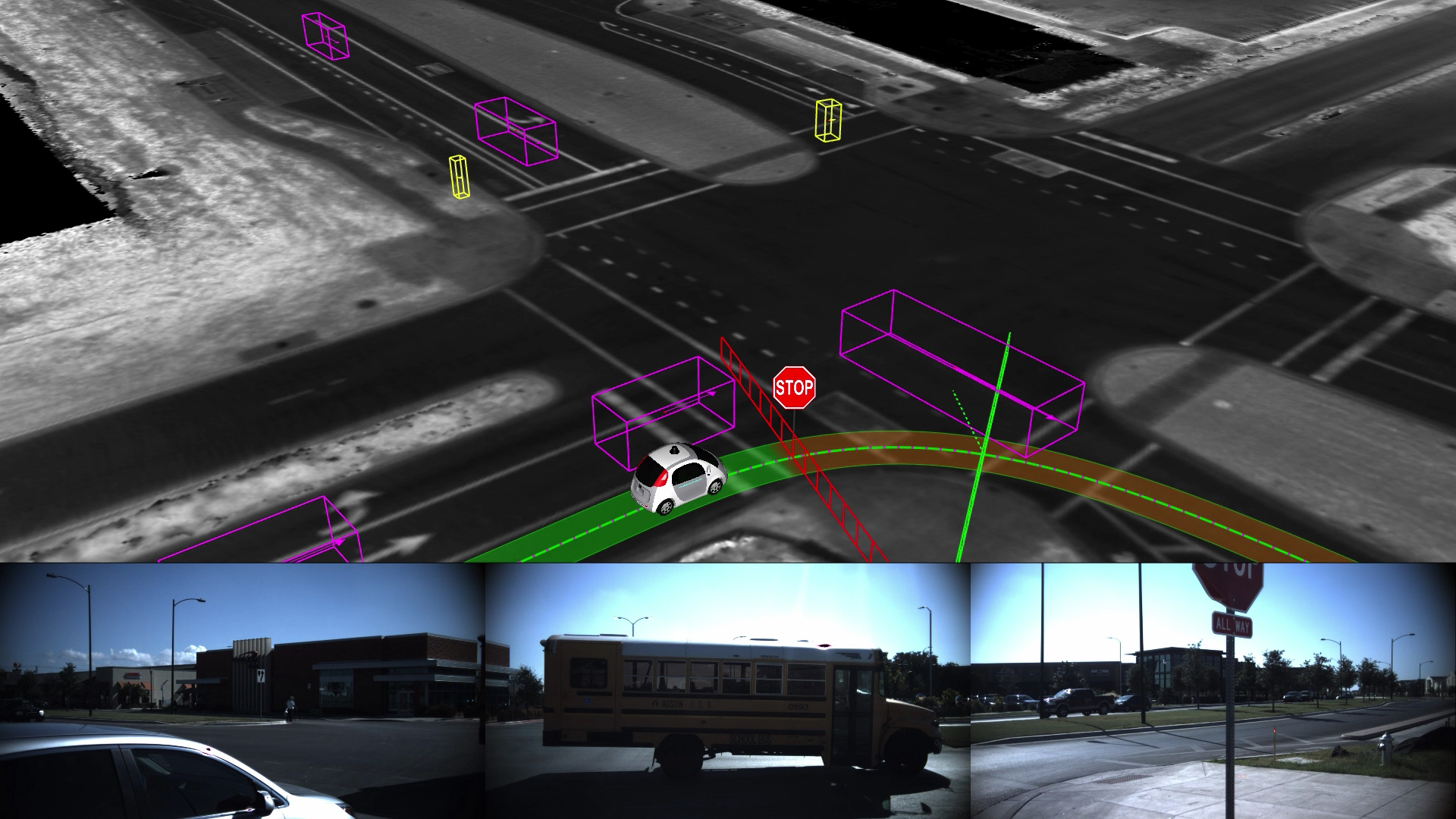

The fusion of the depth map of LIDAR with the scene understanding of neural net based vision systems is powerful. The LIDAR can pull the pedestrian image away from the background, and then make it much easier for the computer vision to reliably figure out what it is. The CV is not 100% reliable, but it doesn’t have to be. Instead, it can ideally just improve the result. LIDAR alone is good enough if you take the very simple approach of “If there’s something in the way, don’t hit it.” But that’s a pretty primitive result that may brake too much for things you should not brake for.

Consider a bird on the road or a blowing trash bag. It’s a lot harder for the LIDAR system to reliably identify those things. On the other hand, the visions systems will do a very good job at recognizing the birds. A vision system that makes errors 1 time every 10,000 is not adequate for driving. That’s too high an error rate as you encounter thousands of obstacles every hour. But missing 1 bird out of 10,000 means that you brake unnecessarily for a bird perhaps once every year or two, which is quite acceptable.

Option two — lower end LIDAR with more dependence on vision

Low end lidars, with just 4 or so scanning planes, cost a lot less. Today’s LIDAR designs basically need to have an independent laser, lens and sensor for each plane, and so the more planes, the more cost. But that’s not enough to identify a lot of objects, and will be pretty deficient on things low to the ground or high up, or very small objects.

The interesting question is, can the flaws of current computer vision systems be made up for by a lower-end, lower cost LIDAR. Those flaws, of course, include not always discerning things in their field. They also include needing illumination at night. This is a particular issue when you want a 360-degree view — one can project headlights forward and see as far as they see, but you can’t project headlights backwards or to the side without distracting drivers.

It’s possible one could use infrared headlights in the other directions (or forward for that matter.) After all, the LIDAR sends out infrared laser beams. There are eye safety limits (your iris does not contract and you don’t blink to IR light) but the heat output is also not very high.

Once again, the low end lidar will eliminate most of the highly feared false negatives (when the sensor doesn’t see something that’s there) but may generate additional false positives (ghosts that make the vehicle brake for nothing.) False negatives are almost entirely unacceptable. False positives can be tolerated but if there are too many, the system does not satisfy the customer.

This option is cheaper but still demands computer vision even better than we have today. But not much better, which makes it interesting.

Other options

Tesla has said they are researching what they can do with radar to supplement cameras. Radar is good for obstacles in front of you, especially moving ones. Better radar is coming that does better with stationary objects and pulls out more resolution. Advanced tricks (including with neural networks) can look at radar signals over time to identify things like walking pedestrians.

Radar sees cars very well (especially licence plates) but is not great on pedestrians. On the other hand, for close objects like pedestrians, stereo vision can help the computer vision systems a lot. You mostly need long range for higher speeds, such as the highways, where vehicles are your only concern.

Who wins?

Cost will eventually be a driver of robocar choices, but not today. Today, safety is the only driver. Get it safe, before your competitors do, at almost any cost. Later make it cheap. That’s why most teams have chosen the use of higher end LIDAR and are supplementing in with vision.

There is an easy mistake to make, though, and sometimes the press and perhaps some teams are making it. It’s “easy” on the grand scale to make a car that can do basic driving and have a nice demo. You can do it with just LIDAR or just vision. The hard part is the last 1%, which takes 99% of the time, if not more. Google had a car drive 1,000 miles of different roads and 100,000 total roads in the first 2 years of their project back in 2010, and even in 2017 with by far the largest and most skilled team, they do not feel their car is ready. It gets easier every day, as tech advances, to get the demo working, but that should not be mistaken for the real success that is required.

This post originally appeared on robocars.com.

tags: Automotive, Autonomous Cars, autonomous vehicles, LIDAR, robocars, robohub focus on autonomous driving