Robohub.org

Robot know thyself

How can we measure self-awareness in artificial systems?

This was a question that came up during a meeting of the Awareness project advisory board two weeks ago at Edinburgh Napier University. Awareness is a project bringing together researchers and projects interested in self-awareness in autonomic systems. In philosophy and psychology self-awareness refers to the ability of an animal to recognise itself as an individual, separate from other individuals and the environment. Self-awareness in humans is, arguably, synonymous with sentience. A few other animals, notably elephants, dolphins and some apes appear to demonstrate self-awareness. I think far more species may well experience self-awareness – but in ways that are impossible for us to discern.

In artificial systems it seems we need a new and broader definition of self-awareness – but what that definition is remains an open question. Defining artificial self-awareness as self-recognition assumes a very high level of cognition, equivalent to sentience perhaps. But we have no idea how to build sentient systems, which suggests we should not set the bar so high. And lower levels of self-awareness may be hugely useful* and interesting – as well as more achievable in the near-term.

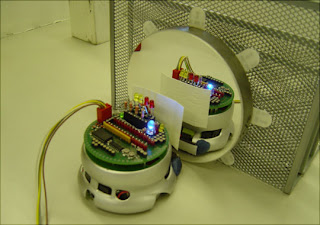

Let’s start by thinking about what a minimally self-aware system would be like. Think of a robot able to monitor its own battery level. One could argue that, technically, that robot has some minimal self-awareness, but I think that to qualify as ‘self-aware’ the robot would also need some mechanism to react appropriately when its battery level falls below a certain level. In other words, a behaviour linked to its internal self-sensing. It could be as simple as switching on a battery-low warning LED, or as complex as suspending its current activity to go and find a battery charging station.

So this suggests a definition for minimal self-awareness:

A self-aware system is one that can monitor some internal property and react, with an appropriate behaviour, when that property changes.

So how would we measure this kind of self-awareness? Well if we know the internal mechanism because we designed it), then it’s trivial to declare the system as (minimally) self-aware. But what if we don’t? Then we have to observe the system’s behaviour and deduce that it must be self-aware; it must be reasonably safe to assume an animal visits the watering hole to drink because of some internal sensing of ‘thirst’.

But it seems to me that we cannot invent some universal test for self-awareness that encompasses all self-aware systems, from the minimal to the sentient; a kind of universal mirror test. Of course the mirror test is itself unsatisfactory. For a start it only works for animals (or robots) with vision and – in the case of animals – with a reasonably unambiguous behavioural response that suggests “it’s me!” recognition.

And it would be trivially easy to equip a robot with a camera and image processing software that compares the camera image with a (mirror) image of itself, then lights an LED, or makes a sound (or something) to indicate “that’s me!” if there’s a match. Put the robot in front of a mirror and the robot will signal “that’s me!”. Does that make the robot self-aware? This thought experiment shows why we should be sceptical about claims of robots that pass the mirror test (although some work in this direction is certainly interesting). It also demonstrates that, just as in the minimally self-aware robot case, we need to examine the internal mechanisms.

So where does this leave us? It seems to me that self-awareness is, like intelligence, not one thing that animals or robots have more or less of. And it follows, again like intelligence, there cannot be one test for self-awareness, either at the minimal or the sentient ends of the self-awareness spectrum.

Related posts:

Machine Intelligence: fake or real?

How Intelligent are Intelligent Robots?

Could a robot have feelings?

* In the comments below Andrey Pozhogin asks the question:What are the benefits of being a self-aware robot? Will it do its job better for selfish reasons?

A minimal level of self-awareness, illustrated by my example of a robot able to sense its own battery level and stop what it’s doing to go and find a recharging station when the battery level drops below a certain level, has obvious utility. But what about higher levels of self-awareness? A robot that is able to sense that parts of itself are failing and either adapt its behaviour to compensate, or fail safely is clearly a robot we’re likely to trust more than a robot with no such internal fault detection. In short, its a safer robot because of this self-awareness.

But these robots, able to respond appropriately to internal changes (to battery level, or faults) are still essentially reactive. A higher level of artificial self-awareness can be achieved by providing a robot with an internal model of itself. Having an internal model (which mirrors the status of the real robot as self-sensed, i.e. it’s a continuously updating self-model) allows a level of predictive control. By running its self-model inside a simulation of its environment the robot can then try out different actions and test the likely outcomes of alternative actions. (As an aside, this robot would be a Popperian creature of Dennett’s Tower of Generate and Test – see my blog post here.) By assessing the outcomes of each possible action for its safety the robot would be able to choose the action most likely to be the safest. A self-model represents, I think, a higher level of self-awareness with significant potential for greater safety and trustworthiness in autonomous robots.

To answer the 2nd part of Andrey’s question, the robot would do its job better, not for selfish reasons – but for self-aware reasons.

(postscript added 4 July 2012)

tags: Alan Winfield, EU perspectives