Robohub.org

See and feel virtual water with this immersive crossmodal perception system from Solidray

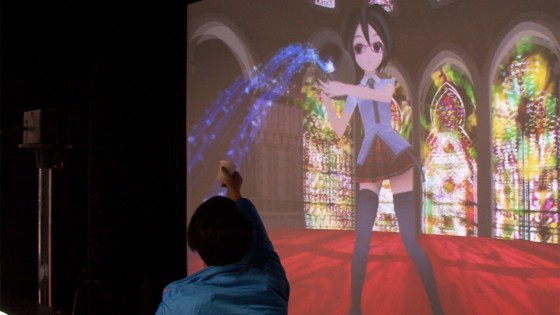

Solidray, which is involved in virtual reality production, has released an immersive crossmodal system incorporating visual and tactile feedback, enabling the user to see and feel flowing water in a virtual space.

“When you put on the 3D glasses, the scene appears to be coming towards you. You’re looking at a virtual world created in the computer. The most important thing is, things appear life-sized, so the female character appears life-sized before the user’s eyes. So, it looks as if she is really in front of you. Also, water is flowing out of the 3D scene. When the user takes a cup, and places it against the water, vibration is transmitted to the cup, making it feel as if water is pouring into the cup.”

The glasses have a magnetic sensor, which precisely measures the user’s line of sight in 3D. This enables the system to dynamically change the viewpoint in 3D, in line with the viewing position, so the user can look into the scene from all directions.

The tactile element uses the TECHTILE toolkit, a haptic recording and playback tool developed by a research group at Keio University. The sensation of water being poured is recorded using a microphone in advance, and when the position of the cup overlaps the parabolic line of the water, the sensation is reproduced. The position of the cup is measured using an infrared camera.

“Here, we’ve added tactile as well as visual sensations. Taking things that far makes other sensations arise in the brain. You can really feel that you’ve gone into a virtual space. All we’re doing is making the cup vibrate, but some users even say it feels cold or heavy.”

“We’re researching how to make users feel sensations that aren’t being delivered. We’d like to use that in promotions. For example, this system uses a cute character. Cute characters are said to be two-dimensional, but they can become three-dimensional. We think it’s more fun to look at a life-sized character than a little figure. So, we think business utilizing that may emerge.”

tags: c-Research-Innovation