Robohub.org

Tricking LIDAR and robocars

image: Steve Jurvetson/flickr

Security researcher Jonathan Petit claims to be able to hack the multi-thousand-dollar laser ranging – LIDAR – systems that self-driving cars rely on. How does he do it and is it as dangerous as it sounds?

Much has been made of Petit’s recent disclosure of an attack on some of the LIDAR systems used in robocars. I saw his presentation on this in July, but he asked me for confidentiality until they released their paper in October. However, since he has decided to disclose it, there’s been a lot of press, with truth and misconceptions.

There are many security aspects to robocars. By far the greatest concern would be compromise of the control computers by malicious software, and great efforts will be taken to prevent that. Many of those efforts will involve having the cars not talk to any untrusted sources of code or data that might be malicious. The car’s sensors, however, must take in information from outside the vehicle, so they are another source of concern.

There are ways to compromise many of the sensors on a robocar. GPS can be easily spoofed and there are tools out there to do that (fortunately real robocars will only use GPS as one source to gauge their location). Radar is also very easy to compromise — far easier than LIDAR, agrees Petit — but his team’s goal was to see if LIDAR is vulnerable.

The attack was a real one, but not a particularly frightening one, in spite of the press it received. This type of attack may cause a well designed vehicle to believe there are “ghost” objects that don’t actually exist, so that it might brake for something that’s not there or even swerve around it. It might also overwhelm the sensor, so that it feels the sensor has failed and thus the car would go into failure mode, stopping or pulling off the road. This is not a good thing, of course, and it has some safety consequences, but it’s also fairly unlikely to happen. Essentially, there are far easier ways to do these things that don’t involve LIDAR, so it’s not too likely anybody would want to mount such an attack.

Indeed, to carry out this type of attack you need to be physically present either in front of the car (or perhaps to the side of the road) and you need a solid object that’s already in front of the car, such as the back of a truck that it’s following. This is a higher bar than with an attack that might be done remotely (such as a computer intrusion) or via a radio signal (such as with hypothetical vehicle-to-vehicle radio, should cars decide to use that tech).

Here’s how it works: LIDAR sends out a very short pulse of laser light and then waits for it to reflect back. The pulse is a small dot and the reflection is seen through a lens aimed tightly at the place it was sent to. The time it takes for the light to come back tells you how far away the target is and the brightness tells you how reflective it is, like a black-and-white photo.

To fool LIDAR, you must send another pulse that comes from, or appears to come from, the target spot, and it has to come in at just the right time, before the real pulse from what’s really in front of the LIDAR does.

The attack requires knowing the characteristics of the target LIDAR very well. You must know exactly when it is going to send its pulses before it does so, and thus precisely (to the nanosecond) when a return reflection will arrive from a hypothetical object in front of it. Many LIDARs are quite predictable. They scan a scene with a rotating drum and you can see the pulses coming out and know when they will be sent.

The attack requires knowing the characteristics of the target LIDAR very well. You must know exactly when it is going to send its pulses before it does so, and thus precisely (to the nanosecond) when a return reflection will arrive from a hypothetical object in front of it. Many LIDARs are quite predictable. They scan a scene with a rotating drum and you can see the pulses coming out and know when they will be sent.

In the simplest version of the attack, the LIDAR is scanning something like a wall in front of it. There aren’t such walls on a highway, but there are things like signs, bridges and the backs of trucks and some cars.

The attack laser sends a pulse of light at the wall or other object, but does it so that it will hit the wall in the right place, earlier than the real pulse from the target LIDAR. This pulse will then bounce back, go in the lens of the LIDAR and make it appear that there is something closer than the wall.

The attack pulse does not have to be bright, but it needs to be similar to the pulse from the LIDAR so that the reflection looks the same. The attack pulse must also be at the very same wavelength and launched at just the right time, to the nanosecond.

If you send out lots of pulses without good timing, you won’t create a fake object, but you can make noise. That noise would blind the LIDAR about the wall in front of it, but it would be very obviously noise. Petit and crew did tests of this type of noise attack, and they were a success.

The fancier type of attack knows the timing of the target LIDAR perfectly and paints a careful series of pulses so it looks like a complex object is present, closer than the wall. Petit’s team were able to do this on a small scale.

This kind of attack can only make the ghost object appear in front of another object, like another vehicle or perhaps a road sign or bridge. It could also be reflected off the road itself. The ghost object, being closer than the road in question, would appear to be higher than the road surface.

There are LIDAR designs which favour the strongest or the last pulse, or even report multiple ones. The latter would probably be able to detect a spoof.

Petit also described an attack from in front of the target LIDAR, such as in a vehicle further along the road. Such an attack, if I recall correctly, was not actually produced but is possible in theory. In this attack, you would shine a laser directly at the LIDAR. This is a much brighter pulse and, in theory, it might shine on the LIDAR’s lens from an angle, but be bright enough to generate reflections inside it that would be mistaken for the otherwise much dimmer return pulse (similar to the bright spots known as “lens flare” in a typical camera shooting close to the sun.) In this case, you could create spots where there is nothing behind the ghost object, though it is unknown just how far an angle from the attack laser (which will appear as a real object) needs to be. The flare pulse would have to be aimed quite precisely to hit the lens, if you want a complex object.

image: Steve Jurvetson/flickr

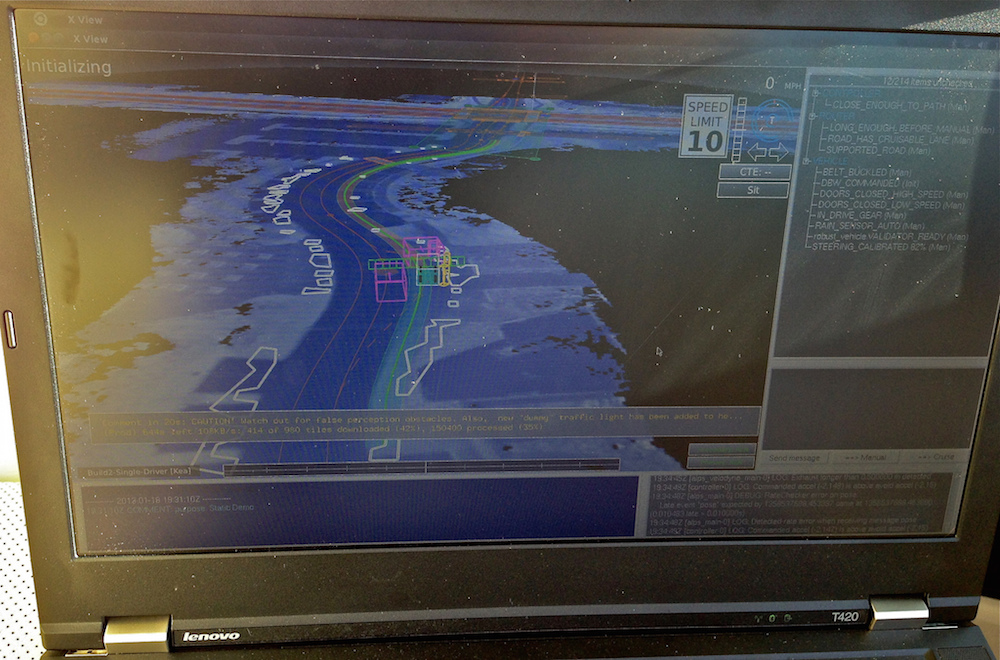

As noted, all this does is create a ghost object, or noise, that temporarily blinds the LIDAR. The researchers also demonstrated a scarier (but less real) “denial of service” attack against the LIDAR processing software. This software’s job is to take the cloud of 3D points returned by the LIDAR and turn it into a list of objects perceived by it. Cars like Google’s, and most others, don’t use the standard software from the manufacturer.

The software they tested here was limited, and could only track a fixed number of objects – around 64, I believe. If there are 65 objects (which is actually quite a lot) it will miss one. This is a far more serious error, and is called a ‘false negative’ — there might be something right in front of you and your LIDAR would see it but the software would discard it and so it would be invisible to you. Thus, you might hit it, if things go badly.

The attack involves creating lots of ghost objects — so many that they overload the software and go over the limit. The researchers demonstrated this but, again, only on fairly basic software that was not designed to be robust. Even software that can only track a fixed number of objects should detect when it has reached its limit and report that, so the system can mark the result as faulty and take appropriate action.

As noted, most attacks, even with an overwhelming number of ghost objects, would only cause the car to decide to brake for no reason. This is not that hard to do. Humans do this all the time actually, braking by mistake or for lightweight objects like tumbleweeds or animals dashing over the road. You can make a perfectly functioning robocar brake by throwing a ball on the road. You can also blind humans temporarily with a bright flash of light or a cloud of dust or water. This attack is much more high tech and complex but amounts to the same thing.

It is risky to suddenly brake on the road, as the car behind you may be following too closely and hit you. This happens even if you brake for something real. Swerving is a bit more dangerous, but can normally only be done when there is a very high likelihood the path being swerved into is clear. Still, it’s always a good idea to avoid swerving. But again, you would also do this for the low-tech example of a ball thrown onto the road.

It is possible to design a LIDAR to return the distance of the first return it sees (the closest object or the last or perhaps the most solid). Some equipment may report the strongest or multiple returns. The Velodyne used by many teams reports only one return.

If a LIDAR reports the first, you can fake objects in front of the background one. More frighteningly, if it reports the last you can fake an object behind the background and possibly hide it (though that would be quite difficult and require you making your fake object very large). If it reports the strongest, you can put your fake object in either place.

Because this attack is not that dangerous, makers of LIDARs and software may not bother to protect against it. If they do take action, there are a number of potential fixes:

If their LIDAR is not predictable about when it sends pulses, the attack can only create noise, not coherent ghost objects. The noise will be seen as noise, or an attack, and responded to directly. For example, even a few nanoseconds of random variation in when pulses are sent would cause any attempted ghost object to explode into a cloud of noise.

LIDARs can detect if they get two returns from the same pulse. If they see that over a region, it is likely there is an attack going on, depending on the pattern. Two returns can happen naturally, but this pattern should be quite distinctive.

LIDARs that scan rather than spin have been designed. They could scan in an unpredictable way, again reducing any ghost objects to noise.

In theory, a LIDAR could send out pulses with encoded data and expect to see the same encoded data return. This requires fancier electronics, but would have many side benefits — a LIDAR would never see interference from other LIDARs, for instance, and might even be able to pull out information away from the background illumination (i.e. the sun) with a better signal to noise ratio, meaning more range. However, total power output in the pulses remains fixed so this may not be doable.

Of course, software should be robust against attacks and detect their patterns, should they occur. That’s a relatively cheap and easy thing to do, as it’s just software.

This topic brings up another common question about LIDAR: whether they might interfere with one another when every car has one. The answer is that they can interfere, but only minimally. A LIDAR sends out a pulse of light and waits for about a microsecond to see the bounce back (a microsecond means the target is 150 meters away). In order to interfere, another LIDAR (or attack laser) has to shine on the tight spot being looked at with the LIDAR’s return lens during the exact same microsecond. Because any given LIDAR might be sending out a million pulses every second, this will happen, but rarely and mainly in isolated spots. As such, it’s not that hard to tune it out as noise. To our eyes, the world would get painted with lots of laser light on a street full of LIDARs. But, to the LIDARs, which are looking for a small spot to be illuminated for less than a nanosecond during a specific microsecond, the interference is much smaller.

Radar is a different story. Radar today is very low resolution. It’s quite possible for somebody else’s radar beam to bounce off your very wide radar target at a similar time. But auto radars are not like the old “pinging” radars used for aviation; they send out a constantly changing frequency of radiation and look at that of the return to figure out how long the signal took to come back. They must also allow for how much that frequency changed due to the Doppler effect. This gives them some advantage, as two radars using this technique should differ in their patterns over time, but it’s a bigger problem: attack against radar is much easier because you don’t need to be nearly so accurate and you can often predict a pattern. Although radars that randomize their pattern could be robust against interference and attack.

This post originally appeared on Robocars.com.

tags: Automotive, autonomous vehicle, Google, LIDAR, robocars