Robohub.org

Video: Giving robots and prostheses a sense of touch

The UCLA Biomechatronics Lab develops a language of touch that can be “felt” by computers and humans alike.

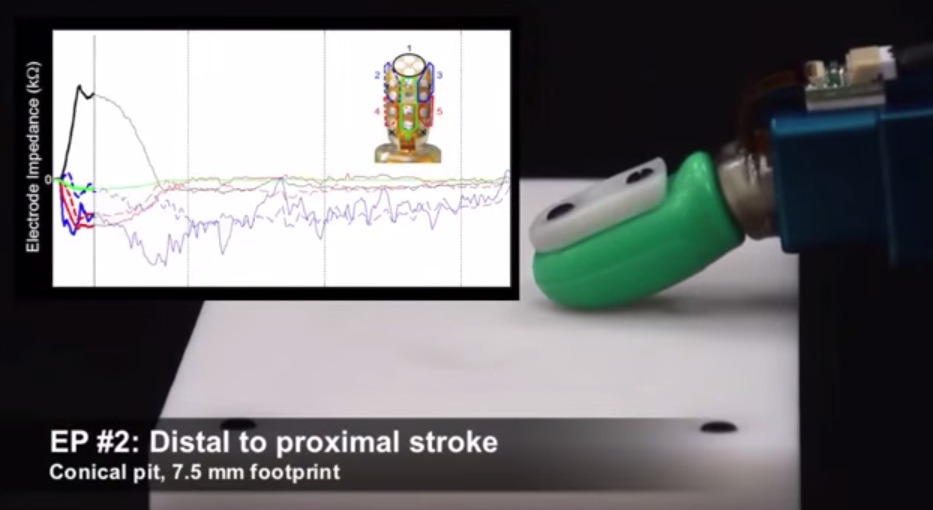

The UCLA Biomechatronics Lab develops a language of touch that can be “felt” by computers and humans alike.Research engineers and students in the University of California, Los Angeles (UCLA) Biomechatronics Lab are designing artificial limbs to be more sensational, with the emphasis on sensation.

With support from the National Science Foundation (NSF), the team, led by mechanical engineer Veronica J. Santos, is constructing a language of touch that both a computer and a human can understand. The researchers are quantifying this with mechanical touch sensors that interact with objects of various shapes, sizes and textures. Using an array of instrumentation, Santos’ team is able to translate that interaction into data a computer can understand.

The data is used to create a formula or algorithm that gives the computer the ability to identify patterns among the items it has in its library of experiences and something it has never felt before. This research will help the team develop artificial haptic intelligence, which is, essentially, giving robots, as well as prostheses, the “human touch.”

tags: Algorithm AI-Cognition, c-Research-Innovation, cx-Health-Medicine, human-robot interaction, robotic prosthetics