Robohub.org

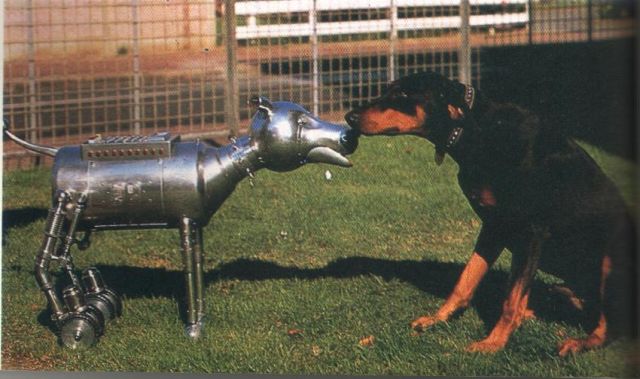

Your robot doggie could really be pleased to see you

There have been several stories in the last few weeks about emotional robots – robots that feel. Some are suggesting that this is the next big thing in robotics. It’s something I wrote about in this blog post seven years ago: could a robot have feelings?

My position on this question has always been pretty straightforward: It’s easy to make robots that behave as if they have feelings, but quite a different matter to make robots that really have feelings.

But now I’m not so sure. There are I think two major problems with this apparently clear distinction between as if and really have.

The first is what do we mean by ‘really have feelings’. I’m reminded that I once said to a radio interviewer who asked me if a robot have feelings: if you can tell me what feelings are, I’ll tell you whether a robot can have them or not . Our instinct (feeling even) is that feelings are something to do with hormones, the messy and complicated chemistry that too often seems to get in the way of our lives. Thinking, on the other hand, we feel to be quite different; the cool clean process of neurons firing, brains working smoothly. Like computers. Of course this instinct, this dualism, is quite wrong. We now know, for instance, that damage to the emotional centre of the brain can lead to an inability to make decisions. This false dualism has led I think to the trope of the cold, calculating unfeeling robot.

I think there is also some unhelpful biological essentialism at work here. We prefer it to be true that only biological things can have feelings. But which biological things? Single celled organisms? No, they don’t have feelings. Why not? Because they are too simple. Ah, so only complex biological things have feelings. Ok, what about sharks or crocodiles; they’re complex biological things; do they have feelings? Well, basic feelings like hunger, but not sophisticated feelings, like love or regret. Ah, mammals then. But which ones? Well elephants seem to mourn their dead. And dogs of course. They have a rich spectrum of emotions. Ok, but how do we know? Well because of the way they behave: your dog behaves as if he’s pleased to see you because he really is pleased to see you. And of course they have the same body chemistry as us, and since our feelings are real* so must theirs be.

And this brings me to the second problem. The question of ‘as if’. I’ve written before that when we (roboticists) talk about a robot being intelligent, what we mean is a robot that behaves ‘as if‘ it is intelligent. In other words, an intelligent robot is not really intelligent, it is an imitation of intelligence. But for a moment let’s not think about artificial intelligence, instead think of artificial flight. Aircraft are, in some sense, an imitation of bird flight. And some recent flapping wing flying robots are clearly a better imitation – a higher fidelity simulation – than fixed-wing aircraft. But it would be absurd to argue that an aircraft, or a flapping wing robot, is not really flying. So how do we escape this logical fix? It’s simple. We just have to accept that an artefact, in this case an aircraft or flying robot, is both an emulation of bird flight and really flying. In other words an artificial thing can be both behaving as if it has some property of natural systems and really demonstrating that property. A robot can be behaving as if it is intelligent and – at the same time – really be intelligent. Are there categories of properties for which this would not be true? Like feelings..? I used to think so, but I’ve changed my mind.

I’m now convinced that we could, eventually, build a robot that has feelings. But not by simply programming behaviours so that the robot behaves as if it has feelings. Or by having to invent some exotic chemistry that emulates bio-chemical hormonal systems. I think the key is robots with self-models. Robots that have simulations of themselves inside themselves. If a robot is capable of internally modelling the consequences of its (or others’) actions, on itself, then it seems to me it could demonstrate something akin to regret (about being switched off, for instance). A robot with a self-model has the computational machinery to also model the consequences of actions on conspecifics – other robots. It would have an artificial Theory of Mind and that, I think, is a prerequisite for empathy. Importantly we would also program the robot to model heterospecifics, in particular humans, because we absolutely require empathic robots to be empathic towards humans (and, I would argue, animals in general).

So, how would this robot have feelings? It would, I believe, have feelings by virtue of being able to model the consequences of actions, both its own and others’ actions, on itself and others. This would lead to it making decisions about how to act, and behave, which would demonstrate feelings, like regret, guilt, pleasure or even love, with an authenticity that would make it impossible to argue that it doesn’t really have feelings.So your robot doggie could really be pleased to see you.

*except when they’re not.

Postscript. A colleague has tweeted that I am confusing feelings and emotion here. Mea culpa. I’m using the word feelings here in a pop-psychology everyday sense of feeling hungry, or tired, or a sense of regret. Wikipedia defines feelings, in psychology, as a word is ‘usually reserved for the conscious subjective experience of emotion’. The same colleague asserts that what I’ve outlined could lead to artificial empathy, but not artificial emotion (or presumably feelings). I’m not sure I understand what emotions are well enough to argue. But I guess the idea I’m really trying to explore here is artificial subjectivity. Surely a robot with artificial subjectivity who’s behaviour expresses and reflects that subjective experience could be said to be behaving emotionally?

tags: Alan Winfield, Algorithm AI-Cognition, c-Research-Innovation, EU perspectives