Robohub.org

“A Paro walks into a club…” (or, Notes on the design of automated body language)

This article discusses personality design and how proper natural language interface design includes body language. The article is about the design of hearts and minds for robots. It argues that psychology must be graphically represented, that body language is a means to do that, and points out why this is kind of funny.

Comrades, we live in a bleak and humourless world. Here we are thirteen years into the twenty-first century, and we all carry around Star Trek style tri-corders, we have access to almost all human opinions via this awesome global computer network, we have thousands and thousands of channels we can flip through on television, we have something like 48,000 people signed up to go colonize Mars, and we even have robots roaming around up there, taking samples of that planet. But we still don’t have robots that can tell a good joke.

Even this new bartender robot, Makr Shakr was prime for a little humour, like, “A robot walks into a bar and asks for a screwdriver..”?

But seriously, folks, in my travels I have met with Honda’s Asimo, Adlebaran’s NAO, Toyota’s Partner Robot and many others, and each of these presumably world-class robots was, in the most critical sense of the word, robotic. They were weirdly-shaped computers with an appendage. If you give me a choice between a robot that can walk and one that rolls around on wheels, I’ll take the one that can talk. Simply put, talking is more important than walking. Design priorities in robotics should focus first on human interface and second on locomotion.

We live at a moment in which natural language interface is replacing the GUI just as the GUI replaced the command line.

Natural language interface developments are being led by Google and Apple, with some strong work being done by IBM. Last week Google’s Chrome browser appeared with a little microphone just to the right of the input bar and Google Now has been out for a year or so. Apple has had Siri, of course, and is now rumoured to be building a wrist watch that will have a natural language interface to it, probably building off the lessons learned from Siri.

Natural language interface makes sense anywhere there is big data or little screen. It makes sense for robots for these same reasons. It provides access to more data and reduces the need for a screen. But most of all, building a natural language interface also gives the robot personality. And this makes the robot more valuable to the human because it turns a robot into an emotion machine.

Giving a robot a personality includes two primary components. First is psychology.

Last month, in The Uncanny Valet I wrote a bit about the design of psychology, specifically for android-style robots, which wasn’t so much an effort to write about human-shaped walking-talking robots (androids), as it was to point out that human interaction seeks human likeness. To emotionally bond with a robot it needs to be like us. The more it is like us the more we can bond with it. This is most important in the field of automated psychology. This isn’t a new field. If you don’t believe me dial an American 800 number. Those horrid little automated voices that corporations use so widely these days are reproducing faster than feral rabbits (may we name them robbits?). In fact, I would love statistics on how many voice answering machines are out there, but I would boldly bet on there being, on the market today, more software robots than hardware robots. I can tell you that this number is increasing and the quality of software robots is improving.

Each month we see advancements in what amounts to software robots, or virtual knowledge workers. Last week Infosys (a Bangalore-based provider of IT services) announced it is working with IPsoft (a New York-based provider of autonomic IT services) to build autonomous tools designed for help desks, operations, and other management needs. In an article about the the new partnership it is mentioned, “IPSoft has focused on autonomics for IT services, and Slaby estimates in his report that nearly all Level 1 support issues can be solved by IPsoft’s ‘virtual engineers’.”

We are past the primitive dawn of automated psychology. It is becoming a branch of the robotics industry.

As graphic design for the GUI was to software design for the personal computer industry, personality design for natural language is to the software of the personal robotics industry.

At Geppetto Labs we build conversational systems. This means that we take the natural language input from a user, pipe that through an analytics system that measures affect, syntactic and semantic values, and then feed the resulting data into an NLP system. We author personalities via simple server-side tools that script what non-technical authors (generally film screen writers) have written into formatted rulesets. We also bend Chatscript and other tools for non-personality behaviour, like enhancing Amazon search by natural language understanding, using specific phrases or moments to trigger specific animations, etc. After that we stir in some scraping, automated ontology creation, and even tools to automate first person interviews and film scripts to craft a personality that can be managed. This is the foundation for natural language interface – at least how we do it.

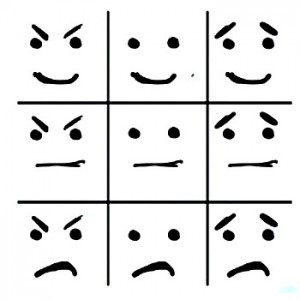

And there’s an entire second level to natural language interfaces that has nothing to do with text. In more advanced projects Geppetto Labs need to add animation that fires when the NL output does. The simplest example I can think of is the word “Hi” being tied to a raising of the hand. Hand gestures are easy, and clear, but gestures are only one part of the body language we build. Body language might also be a facial expression, the position of the arms or the tilt of the head, but what it means is that we have to not only think about the psychology of the system, but how to tie that to the activation of non-verbal forms of communication as well. We have to walk the talk. That means we have to design body language into the personality. It is linked with the psychology and frames the text.

Psychology needs to be graphically represented, just like a book needs a cover, and body language is the best means of achieving that. Well, at least that’s where evolution has taken us.<

Body language coordinates the psychology of the personality with the visual presentation. Coordinating what is said and what is done is core to the personality. This very coordination might be what we commonly call personality. If you don’t believe, me consider this: watch television for a day and note what “Personalities” do; newscasters, politicians, actors, singers, and dancers. They accompany their words with the most broad-stroke gestures and body language humans convey.

This is to say that body language may be more important to natural language interface than the words used.

Many famous studies claim that body language accounts for the majority of our communication (the most famous is probably Dr. Albert Mehabrian’s 7:38:55 rule). Or, more precisely, that the words we use transmit the minority of what we communicate. So how we move and what we visually communicate while we verbally blather on is pretty key to how others understand us.

Consider the eyes. We blink more when we’re stressed out. It can indicate dopamine levels and both the pain or the creativity of the person we’re talking with. The timing of a blink can indicate a punchline to a joke, a response to that joke, confusion at the punch line, or frustration at how it isn’t funny. Pinched and held tight can mean something entirely different. We don’t like to keep eye contact too long or it starts to feel threatening. The person who listens should initiate eye contact – and if they don’t it might mean they just don’t care what you’re talking about. But don’t break it too soon or the person listening will feel as if they’re not important. Don’t look at the ceiling when you talk (or you might come off as a snob) and don’t look at the floor, either (or you might come off as a bummer). If you’re talking with someone and they look up and to your right it generally means they’re remembering something, visually. If you’re talking with someone and they look up and to your left means they’re visually creating something. Horizontal looking while talking indicates the person is most likely accessing auditory cues and, to your right is, again, remembering and to your left is, again, creating. And if they look down maybe they’re depressed. Or maybe they’re just looking at the carpet. Or maybe they’re remembering how something feels. That applies to most right-handed people around the world (according to John Bandler, Richard Grinder, and scads of other neurologists), but it indicates that there’s a lot happening when we speak with someone. A lot more than words.

But the eyes do this while other things are going on. The eyebrows and cheeks are wiggling, the mouth opening and closing, the head is tilting and nodding, the shoulders are going up and down, the arms are waving around, the hands are flapping … and that’s all above the belt-line.

Please remember that human interaction seeks human likeness, so the body language of the robot needs to mirror the body language of the conversant. Think back on one of the early successful chat bots, ELIZA. This system was designed to simply mirror what a person said to it and repeat that back, like many psychologists. Several people evidently fell in love with the system.

Please remember that human interaction seeks human likeness, so the body language of the robot needs to mirror the body language of the conversant. Think back on one of the early successful chat bots, ELIZA. This system was designed to simply mirror what a person said to it and repeat that back, like many psychologists. Several people evidently fell in love with the system.

The corollary of this is that a well-design natural language system will move like we do.

Today, we have a new graphical version of that, designed to note and reflect the body language of the conversant. Its name is Ellie.

A webcam and a game controller track eye directions, run some facial recognition software to chart expressions, and do the same with body positions. While the system is being trained in realtime in its early days, this training goes towards the video mapping input to the system’s output, just as any NLP system (such as Siri or Watson was designed, and exactly as we do our work at Geppetto Labs). It’s being trained on how to read people.

But there’s a missing part here, which I will guess the good Mr. Stan Rizzo is considering, which is generation of response movement in real time.

How the character moves, how the robot moves, is a key component. Just as natural language has the component parts of understanding and generation (NLU and NLG, respectively) body language has these same input and output cues. At Geppetto Labs we have several characters that we’ve been working on that have this running, and a process I’ll outline next month.

A robot walks into a bar and asks for a screwdriver, and the bartender says, “What is this, some kinda joke?” Personality, like a joke, synchronizes words and body language, linking them together and leaving us. “A Paro walks into a club” is about as bad as I get, and I promise I will not repeat the joke, but I bring it up as an icon of how to build personality: combine the mind and the body.

Natural language interface, when properly designed, is far more than just text.

tags: AI, Design, Geppetto Labs, Mark Stephen Meadows, Natural Language Processing, Personality Design, robot, Uncanny Valley, virtual robot