Robohub.org

Mapping in the Cloud

UPDATE: New video of a collaborative, cloud-based mapping experiment. Mapping is essential for mobile robots and a cornerstone of many more robotics applications that require a robot to interact with its physical environment. It is widely considered the most difficult perceptual problem in robotics, both from an algorithmic but also from a computational perspective. Mapping essentially requires solving a huge optimization problem over a large amount of images and their extracted features. This requires beefy computers and high-end graphics cards – resulting in power-hungry and expensive robots.

Update

Here is a video of a collaborative mapping experiment using Rapyuta, the open source cloud robotics framework. Instead of just compressing each frame of the stream, we now run a dense visual odometry algorithm to produce keyframes and these keyframes are eventually sent to the cloud for optimization. This technique is a very natural way to compress since keyframes are produced only when the robot has moved a certain distance or angle. The Cloud, in addition to optimization, now also does merging of maps produced by multiple robots. The code is open under the Apache 2.0 license at https://github.com/rapyuta/rapyuta-mapping.

Together with our RoboEarth colleagues Dominique Hunziker, Dhananjay Sathe, Mayank Singh, we have set up an inexpensive, light weight robot so that it can perform full 3D mapping in real-time by offloading heavy computation to the RoboEarth Cloud Engine (nickname: Rapyuta) (see previous Robohub article). In the video below Master student Vlad Usenko shows how robots can sidestep this problem.

How it works

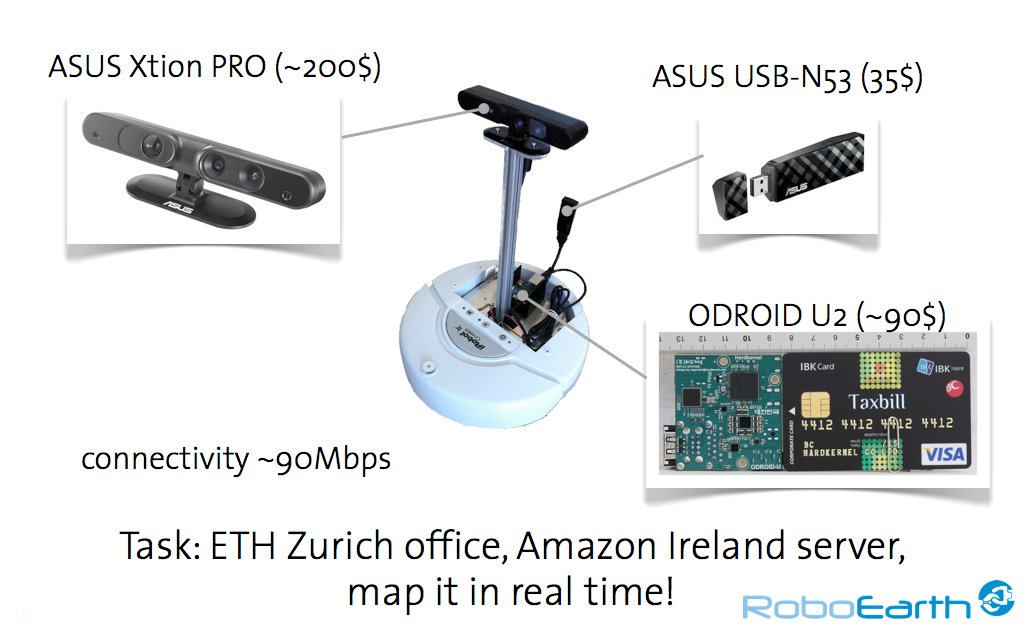

What actually happens here? As you can see from the video and the figure below, the robot is equipped with an RGBD sensor (ASUS Xtion PRO), an ARM-based single board computer (Odriod-U2), and an off-the-shelf wireless dongle. The robot transmits RGB images (QVGA resolution) and depth images (QVGA resolution) at 30 FPS to Rapyuta with a frame by frame compression (JPEG for RGB, and PNG for depth images). The single board computer is only used for compression, communication, and low-level control of the robot – the heavy computation all happens off-board. The optimized map shown at the end of the video is built by manually triggering the optimization process in the cloud after the demo (i.e., not in real time).

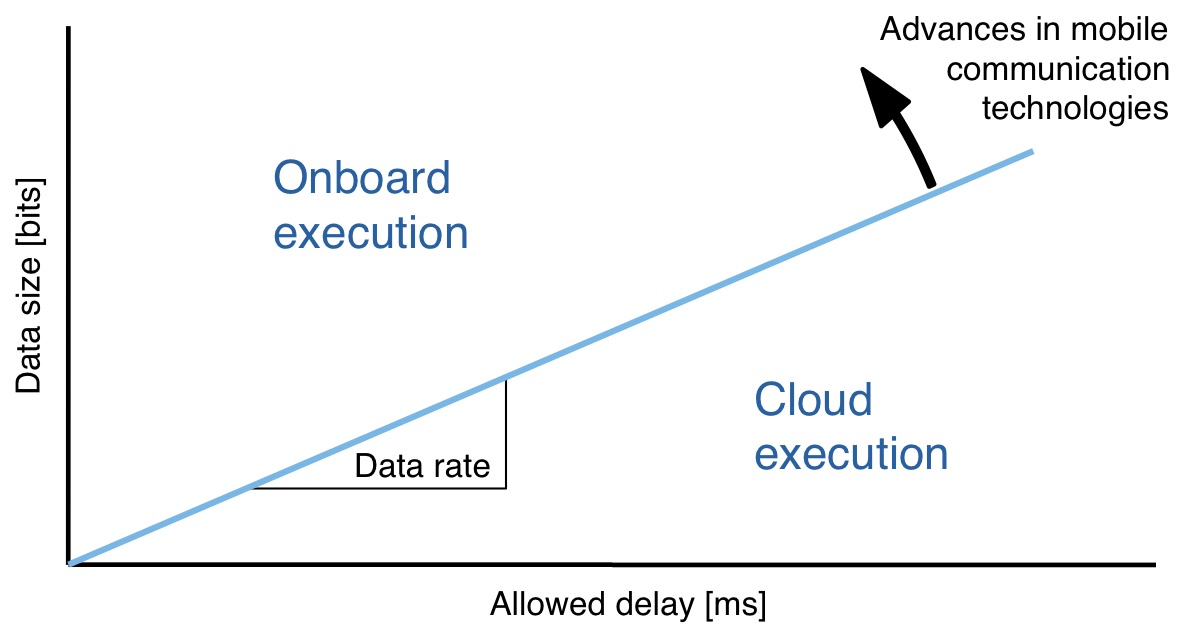

The main purpose of this demonstrator is to showcase that today’s wireless technologies allow robots to outsource computationally heavy data processing to the cloud. As shown in the figure below, and as I’ve laid out in an earlier article on Cloud Robotics, bandwidth is a key driver for cloud-based robot services and applications.

Most actual mapping scenarios call for a slightly different split in work-load: You’d want to perform visual odometry locally and only send keyframes to Rapyuta for global optimization. The good news is that we already supports this setup: Rapyuta provides the corresponding software packages to run on ODroid boards along with the necessary cloud computing infrastructure.

What’s next?

This demonstration stops short of actually using the data returned from the cloud: A map is created and available in real time, but not actively used to control the robot. Using the map to control the robot, e.g. to improve exploration, is one logical extension.

Another natural next step would be to use the computational resources in the cloud for localizing in an existing map. Imagine a robot entering a new building and trying to get its bearings. Such localization in an existing map requires performing a search over the entire map – essentially a very large set of images and their features – to find the best match between what the robot is seeing now and a part of the map.

But the cloud can be used to do much more interesting things than improve single robot navigation. Multiple robots can instantaneously share their maps, so applications like multi-robot mapping become much easier. This implies using the cloud as more than a pure computational resource – it becomes a medium to store, share, and collectively improve data. This is interesting for static scenes, but things get even more interesting for dynamic scenes, for example when multiple robots track dynamic objects in the map. With WIRE, some of my RoboEarth colleagues have developed algorithms to do just that. We are currently working on integrating this functionality with Rapyuta and the RoboEarth database and will make it available as a Rapyuta cloud service later this year.

Many of the key advantages of using this Cloud Robotics approach to mapping will only become apparent when maps are brought together with the vast amounts of knowledge required to understand environments. Performing mapping in the cloud not only allows the creation of maps but also the ability to understand them: By bringing computation close to the knowledge required to make sense of all of a robot’s sensor information, Cloud Robotics offers robots a very powerful way to understand the world around them. When working together, cloud computing frameworks like Rapyuta and online knowledge bases like RoboEarth can allow us to make robots that are both smart and inexpensive.

Additional information

For further information, see:

- Technical details and tutorial for this setup (available early July)

- Video of recent live Cloud Robotics Mapping demo at ROSCon 2013

- Cloud Robotics Workshop at IROS 2013 in November, Tokyo

- Homepage of the RoboEarth Cloud Engine (codename “Rapyuta”)

- RoboEarth homepage

Questions?

Feel free to post in the comments below.

tags: Algorithm AI-Cognition, Cloud Computing, cloud robotics, cx-Education-DIY, cx-Research-Innovation, DIY, Mapping-Surveillance, open source, Prototype, Research, review, Robotics technology, Service Household Other