Robohub.org

People and Robots: UC’s new multidisciplinary CITRIS initiative wants humans in the loop

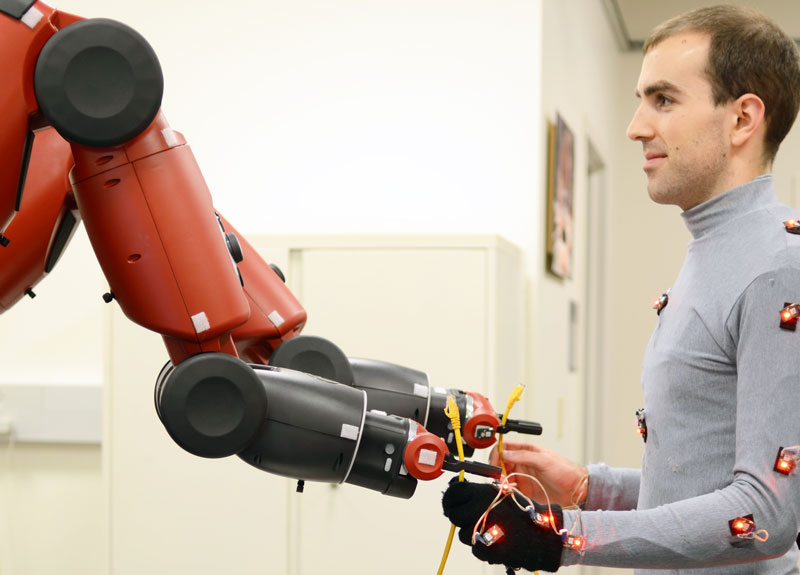

The Baxter robot hands off a cable to a human collaborator – an example of a co-robot in action. Photo credit: Aaron Bestick, UC Berkeley.

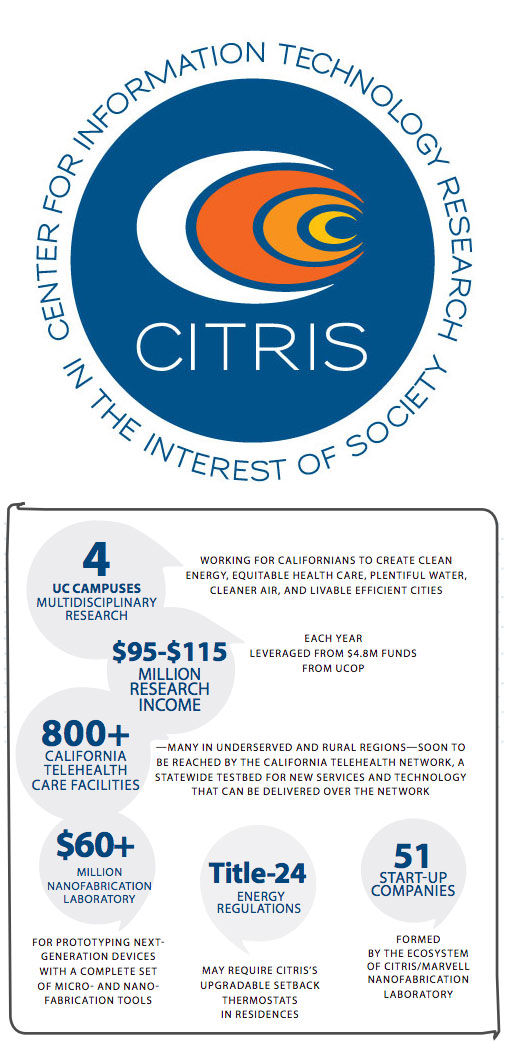

Since 2001, the University of California’s Center for Information Technology Research in the Interest of Society (CITRIS) has leveraged multi-disciplinary research to tackle large societal problems like IT for healthcare, and intelligent infrastructure for public safety, water management and environmental sustainability. Now CITRIS is launching a new initiative that will focus on robotics research that benefits humans and involves humans in the loop.

Sensitivity to human issues is a key aspect of the new robotics initiative, which has been created under the premise that for robotics to reach its potential, humans – and human needs – will have to be integrated into the picture. Ken Goldberg, one of the CITRIS faculty, explained in a phone interview that while there have been many exciting developments in robotics in recent years thanks to the confluence of a thriving research community, new software packages, open source systems, and the availability of low cost components, fearful public sentiment has also been growing in parallel.

“There has been a lot of press about the issue of jobs, loss of privacy, and the idea that as robots advance they will becoming threatening to humans,” he said. “We want to be able to focus on robotics applications that are beneficial to humans, and that have humans uppermost in mind. We firmly believe that humans are essential to the operation of robots – and that robots will be far more effective as tools that enhance humans rather than as something that replaces them.”

The program will draw from the expertise of over 60 faculty from across four UC campuses, covering the fields of control theory, optimization, computer vision, machine learning, statistics, law, philosophy and art. “It’s a very broad group,” said Goldberg. “We can be blinded when we are all thinking along similar lines and we all have similar assumptions and experiences. It’s helpful to bring people together from different backgrounds – that diversity often leads to insight.”

The program will draw from the expertise of over 60 faculty from across four UC campuses, covering the fields of control theory, optimization, computer vision, machine learning, statistics, law, philosophy and art. “It’s a very broad group,” said Goldberg. “We can be blinded when we are all thinking along similar lines and we all have similar assumptions and experiences. It’s helpful to bring people together from different backgrounds – that diversity often leads to insight.”

Adding robotics as a focus area may be an important step for CITRIS, but it is not a very large one given the background of some of CITRIS faculty and emeritus directors. Ruzena Bajscy, the founding Director of CITRIS, is known for her research in the fields of robotics, artificial intelligence and machine perception, but her credentials reach into neuroscience, applied mechanics and computer science as well. “She’s an expert in computer vision and perception, and she teaches and continues to do research on human-centered robots, which is one of the themes of this new initiative,” said Goldberg, who had Bajscy as an undergrad advisor.

CITRIS was formed in 2001, when researchers within the UC system realized that the real opportunities lay not just in developing new and innovative technologies, but in applying them. It is one of four California Institutes for Science and Innovation that were created to leverage private and government resources and shorten the path to entrepreneurial investment. The CITRIS plan involves three main pillars: sensitivity to human issues; rigorous theory evaluated on standard benchmarks; and modular systems built on shared software toolkits. Goldberg’s interview (continued below) sheds light on how these pillars fit into the CITRIS model.

Excerpts from Ken Goldberg’s interview:

How does the CITRIS initiative define what is “robotic work” and what is “human work”?

In the past there was this idea that you put the robots in the cage in the assembly line and you had to keep humans away from them because they were doing something dangerous – like spot welding or machining. But with companies like Willow Garage and Baxter and others, we are starting to think about robots differently – as something that can work side by side with humans. So we have to design them differently, we have to think about the issues of safety, privacy and security. We are also looking at the potential that humans could be involved in teaching robots by going through demonstrations and having robots learn by observation. With robots in the home, for example, one project that we’re interested in is decluttering.

We’ve gotten used to the idea of robots doing dangerous jobs, but in that capacity they are very separate from us. Now that we want collaborative robots – robots that can work alongside humans – we seem to be OK giving them the boring jobs (the jobs that are “robotic”, to use the word as it is currently applied). But by definition those jobs are not creative, communicative or social. If we are working alongside robots, and even teaching them, will we be looking for robots that are able to be more than just “robotic”?

I think that creativity, and to a large degree, sociability, is something that humans are going to have a great advantage in (as we should), and I hope we continue to have the edge in those areas. But I think we can develop robots that are more responsive. Maybe they won’t be able to express or innovate themselves, but they can start to observe nuances of manipulation by observing and measuring human performance, for example. Essentially, we want them to assist us.

One example would be surgery, where the surgeons are performing a complex operation. Certain aspects of the operation that are monotonous and tedious, but there are also many aspects of the surgery that require extremely specialized skills. So the key is to balance those two things out. You don’t want the robot to do the things that require skill, but you do want it to be able to perform something like suturing, or debridement – tasks that take up valuable time in surgery.

It’s the same analogy with what’s happening with driving. There’s been a lot of talk about the fully autonomous car, but I think what we are going to see is much more practical – the idea of driver assist. For example, when I’m driving to work, there’s a certain period when I’m on the freeway and it doesn’t require as much attention. Or when I’m stuck in traffic, it’s very tedious to be inching along. These things require attention, but really we could have an automated system do that.

One of the key pillars of the CITRIS initiative is modular systems built on shared software toolkits. Given the rapid advances in robotics over the past five years, where is that headed?

The major development has been with ROS – the Robot Operating System – which has been a tremendous benefit for the field. When I was a grad student, and for many years thereafter, when you wanted to build a robot system you started from scratch. Now you can go and get libraries and pull lots of components.

I think what’s exciting is the question of how to take that to the next level … how to have systems that use a software-as-a-service (SaaS) model where the entire software is maintained in the cloud. You could send out data to be processed in the cloud and you would get back results, such as motion plot plans or grasp plans. And just like with Google docs, the benefit is that all the software maintenance, installation, and updates are done in the cloud so that the end user or the robot that is operating in the field doesn’t have to worry about all that, and can essentially just access the latest software.

Consolidating that is a major benefit and would certainly fast track things for the end user. But it can be a battle to find group willing to undertake this kind of consolidation and support that. Are you seeing more interest from large companies in supporting software as a service for robotics?

A number of programs have had company and industry support for open source software at various levels of development. Because we are a public institution, Berkley has always championed is the idea of open source – of putting code out there and making it publicly available. We’d like to continue in that direction by having software that we can support and make available as packages. One thing that industry seems interested in is that, as certain piece of software builds momentum, a startup might form around it to take it to the next level. We want to be open to that and put together software and data resources as part of the new initiative. Pieter Abbeel is going to be leading that aspect.

Many more startups are leveraging ROS now, but at the ultimate commercializing level there is still hesitancy from the larger companies, and sometimes it means that ROS is being used as a development environment rather than being seen as the final endpoint because of issues around maintaining its commercial viability. Is open source more likely to be part of the commercialization process if more public institutions are supporting it?

I think an example of this is the AMP lab at UC Berkley – the Algorithm, Machines and People lab. They have been putting out [[software for systems]] in machine learning and they’ve been extremely successful. The SPARK package has millions of users now and has been incorporated into commercial products, and they’ve created a spinoff – Databricks – that is is taking that to a new level. So I think that a first step is to put out software that people can use. And then entrepreneurs and investors can see that there is a demand for it – that’s what takes it to the next step where it can be commercialized.

This example points out that robotics isn’t developing in isolation and is able to leverage so many of the things that have been able to roll out in software and mobile technologies. This brings me to another area that you’ve outlined for CITRIS: rigorous theory evaluated on standard benchmarks. I think that’s critically important. What’s leading towards this, and how will you follow through?

Let me give you an example. One of my areas of research is grasping, and I feel that this field has never had a very solid benchmark of objects to test grasping on. There are some datasets … for example, the field of machine vision has been doing this very effectively for many years, and there are beautiful datasets for testing machine vision algorithms. But grasping hasn’t had that yet, so this is something we are pursuing. For example, Pieter Abbeel is working with colleagues at CMU and Yale to develop a kit of parts that are common to home and medical environments, and he is going to make this available as a suitcase or box of parts. The goal is to get funding so that he can make it available at very low cost and send it out to research groups around the world so that they could test their algorithms on common parts and compare them.

We are excited because now we have a critical mass of faculty and students who can use these benchmarks and begin producing their own benchmarks.

Another example is the Amazon Picking Challenge that will be at ICRA this year. Interestingly, one of Pieter’s students did a very detailed scan of each of the objects from the picking challenge, using multiple cameras with 3D sensors on a nice white background, and made them available to the community. That kind of data set is very difficult for any individual lab to produce on their own. So our idea was: we will do that scanning and share it with everybody. And our hope is that it could be used again as a nice benchmark set.

Unfortunately those objects are not particularly interesting from a grasping perspective. In fact we were just lamenting that they are very interesting from a computer vision perspective, but they are all very similar in shape so they don’t really give us a lot to work with in terms of grasp algorithms.

They are being driven by a particular commercial area – logistics and warehousing – aren’t these a set a of the most common objects in that environment?

They are common in that environment, but boxes and books and are pretty rectilinear, and from a grasping perspective, we are interested in things that are a little more challenging.

So in terms of benchmarks you’d be calling for something like the agriculture industry – where the variety of shapes and the delicacy of the objects creates a lot of interesting challenges – to sponsor a grasping challenge?

Yes, that’s a great example. Or a company like an assembly, automotive or electronics assembly company present us with a really complex set of objects that need to be grasped and manipulated. That would be really interesting. A lot of companies are nervous about doing that because for proprietary reasons they don’t want to share their objects, and so this is where a university lab can help out because we can actually do the dirty work of collecting all that. We’d like to work with industry and we see this as filling a gap that industry hasn’t set itself to do yet.

You have mentioned that when robotics research is confined to very small labs, the resources are simply not there to develop standardized benchmarks and problem sets. How are virtualized environments extending the reach of these benchmarks to greater section of the research community?

There’s a need for standardized simulation environments and we have seen a lot of progress there in the last few years. There is indeed a bigger community of users sharing tools that can start to become more widely accepted. Again, the key advantage is that you can start comparing results across groups and labs. The key is having a diversity of ideas and perspectives brought together. I’m a firm believer that we can be blinded to insights because we are all thinking along similar lines and we all have similar assumptions and experiences. So it’s helpful to bring people together from different backgrounds and that diversity often leads to an insight. We’ve always assumed things are one way, and this encourages to try things another way. That’s the benefit. There are so many researchers working in these areas. To some degree everybody is working in their own labs, but we want people to share, interact and communicate between the labs and students. That’s where I think we have enormous possibility.

tags: c-Research-Innovation, collaborative robotics, human-robot interaction, Ken Goldberg