Robohub.org

Amazon challenges robotics’ hot topic: Perception

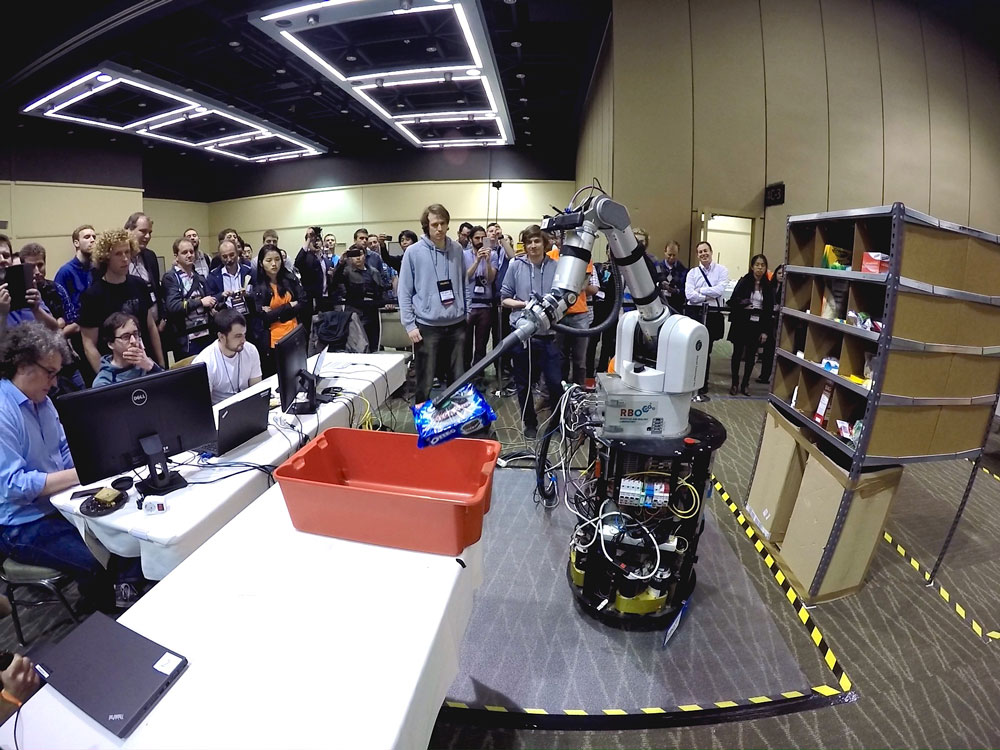

RBO Team from TU Berlin wins the Amazon Picking Challenge at ICRA 2015. Photo Credit: RBO.

Capturing and processing camera and sensor data and recognizing various shapes to determine a set of robotic actions is conceptually easy. Yet Amazon challenged the industry to do a selecting and picking task robotically and 28 teams from around the world rose to it.

Perception isn’t just about cameras and sensors. Software has to convert the data and infer as to what it “sees”. In the case of the Amazon Picking Challenge held last week at the IEEE International Conference on Robotics & Automation (ICRA), each team’s robot was to pick from a shopping list of consumer items of varying shapes and sizes – from pencils, to toys, tennis balls, cookies and cereal boxes – which were haphazardly stored on shelves, and then place their selected items in a bin. They could use any robot, mobile or not, and any arm and end-of-arm grasping tool or tools to accomplish the task.

It’s tricky for robots using sensors to identify and locate objects that can be confused by plastic packaging within the shelf or storage area. Rodney Brooks, of iRobot, MIT and Rethink Robotics fame, often speaks of an industry-wide aspirational goal regarding perception in robotics: “If we were only able to provide the visual capabilities of a 2-year old child, robots would quickly get a lot better.” That is what this contest is all about.

Software has to first identify the item to pick and then figure out the best way to grab it and move it out of the storage area. Amazon, with its acquisition of Kiva Systems, has mastered bringing goods to the picker/packer and now wants to automate the remaining process of picking the correct goods from the shelves and placing them in the packing box, hence their Amazon Picking Challenge.

The top three winners were the teams from Technical University (TU) – Berlin, with 148 points; MIT in 2nd place with 88 points; and the 3rd place finisher (Oakland U and Dataspeed), which only got 35 points. Teams were scored on how many items were correctly selected, picked and placed.

Many commercial companies with proprietary software for just this type of application (such as Tenessee-based Universal Robotics and their Neocortex Vision System, and Silicon Valley startup Fetch Robotics who were premiering their new Fetch and Freight system at the same ICRA conference) chose not to enter because the terms of the challenge included that the software be open sourced.

Team RBO from TU-Berlin wrote their own vision system software and will soon be working on a paper on the subject. They used a Barrett WAM arm because it was the most flexible device for this task amongst the arms that they had access to in their lab. They used a vacuum cleaner tool augmented with a suction cup and a vacuum cleaner to power the suction. For a base, they decided they needed to go mobile and used an old Nomadic Technologies platform, which they upgraded to fit the needs of the contest. Nomadic no longer exists. It was acquired by 3Com in 2000. TU’s photo at right shows robot’s camera view and random placement of items in cubby holes. Watch a video of their winning run here:

Team RBO from TU-Berlin wrote their own vision system software and will soon be working on a paper on the subject. They used a Barrett WAM arm because it was the most flexible device for this task amongst the arms that they had access to in their lab. They used a vacuum cleaner tool augmented with a suction cup and a vacuum cleaner to power the suction. For a base, they decided they needed to go mobile and used an old Nomadic Technologies platform, which they upgraded to fit the needs of the contest. Nomadic no longer exists. It was acquired by 3Com in 2000. TU’s photo at right shows robot’s camera view and random placement of items in cubby holes. Watch a video of their winning run here:

Noriko Takiguchi, a Japanese reporter for RoboNews.net, who was at the contest, observed the TU team and said that their approach was torque-based plus position control of the arm and the mobile base, consequently they had good torque control that that gave them flexibility in choosing where to place the suction cup and how much suction to apply.

Team RBO received a 1st place prize of $20,000 plus travel costs for the equipment and team members.

tags: Amazon Picking Challenge, c-Events, cx-Research-Innovation, Germany, ICRA 2015, perception