Robohub.org

Creating expressive robot swarms

As robot swarms leave the lab and enter our daily lives, it is important that we find ways by which we can effectively communicate with robot swarms, especially ones that contain a high number of robots. In our lab, we are thinking of ways to make swarms for people that are easy and intuitive to interact with. By making robots expressive, we will be able to understand their state and therefore, we will be able to make decisions accordingly. To that extent, we have created a system where humans can build a canvas with robots and create shapes with up to 300 real robots and up to 1000 simulated robots.

Painting with robots

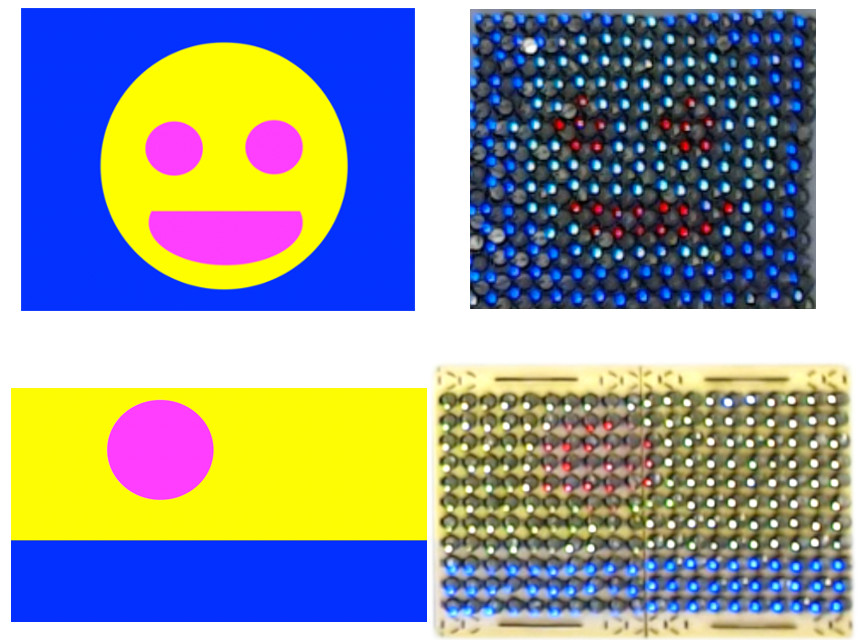

In a system we created called Robotic Canvas, we project an image onto a robot swarm via an overhead projector, and the swarm replicates the image using their LEDs by sensing the colour of light projected. Each robot, therefore, acts as a pixel on the canvas. The humans can then interact with the robot pixels by copying/pasting pixels (LED colour) onto different parts on the canvas, erasing them (turning off LEDs), changing their colour, or saving/retrieving paintings (by saving/retrieving the state of the LED). If a GIF or a video is projected onto the robots, the robots appear to be showing a video. The robots are also decentralised, which means they have no central controller telling them what to do, avoiding a single point of failure of the system. This enables the system to carry on if one robot fails, enabling humans to still interact and create paintings with the rest of the robots. The robots have to resort to talking to their neighbours and sensing their environment in order to obtain information on how to act next. Consequently, robot pixels use only local interactions (i.e. communications with their neighbouring robots) and environmental interactions (i.e. sensing the colour of light and shadows) to be able to tell which interaction is taking place. Here are some images showing the robots recreating images projected onto them.

Here is a performance we did with the robots. This is a story of day and night; the sun sets on the ocean permitting night to arrive. Then, start begin to show in the night sky, and clouds form as well. Finally, the sun rises again.

Creating shapes with robots

In Robotic Canvas, the robots were stationary, and they needed to fill out the whole shape to be able to represent the image projected onto them properly. However, we were able to reduce the number of robots used by enabling them to move and aggregate around edges of images projected onto them. This way, a smaller number of robots can be used to represent an image while still preserving clear image representation. We were also able to produce videos with the robots by projecting a video onto them. We used up to 300 real robots (as can be seen from the line, circle and arrow shapes below) and up to 1000 simulated robots (as can be seen in the letters F, T and the blinking eye video).

The way the robots are able to aggregate around edges is again done only through local and environmental interactions. Robots share with their neighbours the light colour they sense. They then combine their neighbours’ opinions with their own to reach a final decision on what colour is being projected. Robots move randomly as long as their opinions match their neighbours’ opinions. If there is a high conflict of opinions, that means robots are standing on an edge, and they stop moving. They then broadcast a message to their neighbours to aggregate around them to represent the edge. We can increase the distance by which neighbours respond to the edge robots, giving us the ability to have thicker or thinner lines of robots on the edges.

The robots do not need to have the image the user wishes for them to represent stored in their memory. That gives the system an important feature: adaptability. The user can change the image projected at any time and the robots will re-configure themselves to represent the new image.

The inspiration behind robot expressivity

Our research was inspired by the fact that robot swarms and human-swarm interaction are exciting hot topics in today’s world of robotics. Searching for ways by which to interact with swarm robots that neither break their decentralisation nature of not needing a central controller, nor humans needing to interact with each robot separately (as there could potentially be thousands of robots and hence would be unfeasible to update them separately) is an interesting and challenging problem to solve. Therefore, we created the Robotic Canvas to experiment with methods by which a user can relay messages to, and influence the behaviour of, 100’s of robots without needing to communicate with each separately. We researched how we can do such a task only using environmental and/or local interactions only. Furthermore, we decided to add mobility to the robots, which results in using a smaller number of robots for image representation.

Challenges along the way

While creating paintings and shapes look interesting and fun, the road to creating this system was not without obstacles! Going from working in simulation to working with real-life robots deemed challenging. This was due to noise from robot motion in real robots and also errors in sensor readings. Using a circular arena helped with preventing robots getting stuck at the boundaries of the arena. As for sensing errors, filtering noisy readings before broadcasting opinions to neighbours helped with reducing error in edge detection.

Limitations of our system

The size of the robots is directly proportional to the resolution of the image, similar to how pixels work. The smaller the robots (and their LEDs), the clearer the picture representation will be. Therefore, there is a limitation on how clearly very complex images could be represented with the current robots used (the Kilobots).

Beyond painting

The emergent shape-formation behaviour of robot swarms has many potential applications in the real world. Its expressive nature serves it well as an artistic and interactive visual display. Also, the robots could be used as functional materials which respond to light projections by depositing themselves onto image edges, which could have applications in architecture and electronics. The system could also have applications in ocean clean-ups, where robot swarms could detect and surround pollutants in oceans such as oil spills.

tags: c-Research-Innovation, Swarming