Robohub.org

DARPA’s HAPTIX project hopes to provide prosthetic hands with sense of touch

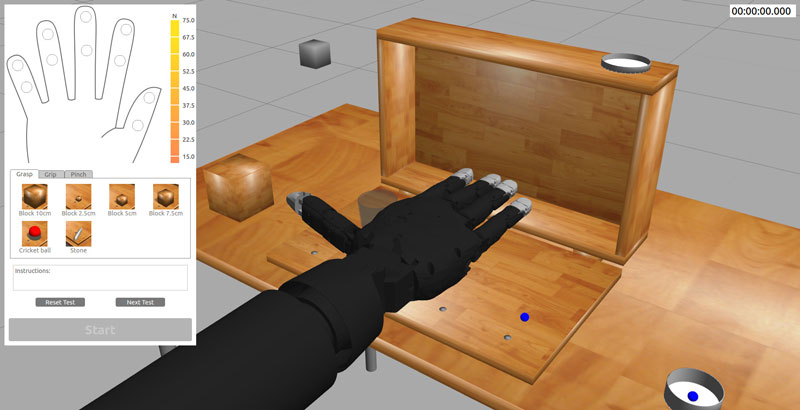

To help HAPTIX performers to quickly and effectively conduct their research, DARPA is providing each team with open source simulation software in which to test their designs. The software includes a variant of the DARPA Robotics Challenge Simulator from the June 2013 Virtual Robotics Challenge, the first stage of the DARPA Robotics Challenge. Source: DARPA.

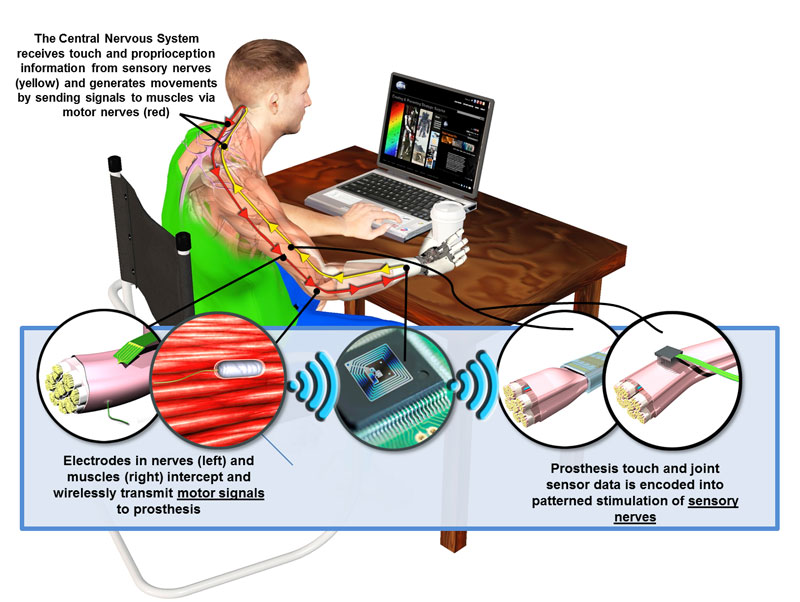

The Gazebo team has been hard at work setting up a simulation environment for the Defense Advanced Research Projects Agency (DARPA)’s Hand Proprioception and Touch Interfaces (HAPTIX) program. The goal of the HAPTIX program is to provide amputees with prosthetic limb systems that feel and function like natural limbs, and to develop next-generation sensorimotor interfaces to drive and receive rich sensory content from these limbs. Managed by Dr. Doug Weber, HAPTIX is being run out of DARPA’s Biological Technologies Office (BTO).

As the organization maintaining Gazebo, OSRF has been tasked with extending Gazebo to simulate prosthetic hands and test environments, and develop both graphical and programming interfaces to the hands. OSRF is officially releasing a new version of Gazebo for use by HAPTIX participants. Highlights of the new release include support for OptiTrack motion capture system; the NVIDIA 3D vision system; numerous teleoperation options including the Razer Hydra, SpaceNavigator, mouse, mixer board and keyboard; a high-dexterity prosthetic arm; and programmatic control of the simulated arm using Linux, Windows and MATLAB. More information and tutorials are available at the Gazebo website. Here’s an overview video:

“Our track record of success in simulation as part of the DARPA Robotics Challenge makes OSRF a natural partner for the HAPTIX program,” according to John Hsu, Chief Scientist at Open Source Robotics Foundation. “Simulation of prosthetic hands and the accompanying GUI will significantly enhance the HAPTIX program’s ability to help restore more natural functionality to wounded service members.”

Gazebo is an open source simulator that makes it possible to rapidly test algorithms, design robots, and perform regression testing using realistic scenarios. Gazebo provides users with a robust physics engine, high-quality graphics, and convenient programmatic and graphical interfaces. Gazebo was the simulation environment for the VRC, the Virtual Robotics Challenge stage of the DARPA Robotics Challenge.

DARPA’s Hand Proprioception and Touch Interfaces (HAPTIX) program aims to develop fully implantable, modular and reconfigurable neural-interface systems that would enable intuitive, dexterous control of advanced upper-limb prosthetic devices. Source: DARPA.

This project also marks the first time Windows and MATLAB users can interact with Gazebo, thanks to our new cross-platform transport library. The scope is limited to the HAPTIX project, however plans are in motion to bring the entire Gazebo package to Windows.

Teams participating on HAPTIX will have access to a customized version of Gazebo that the Johns Hopkins University Applied Physics Laboratory Modular Prosthetic Limb (MPL) developed under the DARPA Revolutionizing Prosthetics program, as well as representative physical therapy objects used in clinical research environments.

More details on HAPTIX can be found in the DARPA announcement.

tags: c-Research-Innovation, cx-Health-Medicine, DARPA, Gazebo, human-robot interaction, OSRF, robotic prosthetics