Robohub.org

David Robert on “Do robots need heads?”

As a robot animator I can attest to the fact that robots don’t “need” heads to be treated as social entities. Research has shown that people will befriend a stick as long as it moves properly [1].

As a robot animator I can attest to the fact that robots don’t “need” heads to be treated as social entities. Research has shown that people will befriend a stick as long as it moves properly [1].

We have a long-standing habit of anthropomorphizing things that aren’t human by attributing to them human-level personality traits or internal motivations based on cognitive-affective architectures that just aren’t there. Animators have relied on the audience’s willingness to suspend disbelief and, in essence, co-animate things into existence: from a sack of flour to a magic broom. It’s possible to incorporate the user’s willingness to bring a robot to life by appropriately setting expectations and being acutely aware of how the context of interaction affects possible outcomes.

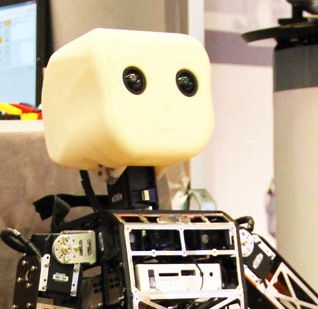

In human lifeforms, a head usually circumscribes a face, whereas in a robot a face can be placed anywhere. Although wonderfully complex, in high degree of freedom (DOF) robot heads, the facial muscles can be challenging to orchestrate with sufficient timing precision. If your robot design facilitates expression through the careful control of the quality of motion rendered, a head isn’t necessary in order to communicate essential non-verbal cues. As long as you consider a means for revealing the robot’s internal state, a head simply isn’t needed. A robot’s intentions can be conveyed through expressive motion and sound regardless of its form or body configuration.

[1] Harris, J., & Sharlin, E. (2011, July). Exploring the affect of abstract motion in social human-robot interaction. In RO-MAN, 2011 IEEE (pp. 441-448). IEEE.

tags: c-Research-Innovation, human-robot interaction, humanoid, RBI, Robotics by Invitation, Social aspect