Robohub.org

Engineers build brain-controlled music player

Imagine if playing music was as simple at looking at your laptop screen. Now it is thanks to Kenneth Camilleri and his team of researchers from the Department of Systems and Control Engineering and the Centre for Biomedical Cybernetics at the University of Malta, who have developed a music player that can be controlled by the human brain.

Camilleri and his team have been studying brain responses for ten years. Now they have found one that is optimal for controlling a music player using eye movements. The system was originally developed to improve the quality of life of individuals with severely impaired motor abilities such as those with motor neuron disease or cerebral palsy.

The technology works by reading two key features of the user’s nervous system: the nerves that trigger muscular movement in the eyes and the way that the brain processes vision. The user can control the music player simply by looking at a series of flickering boxes on a computer screen. Each of the lights flicker at a certain frequency and as the user looks at them their brain synchronizes at the same rate. This brain pattern reading system, developed by Rosanne Zerafa relies on a system involving Steady State Visually Evoked potentials (SSVEPs).

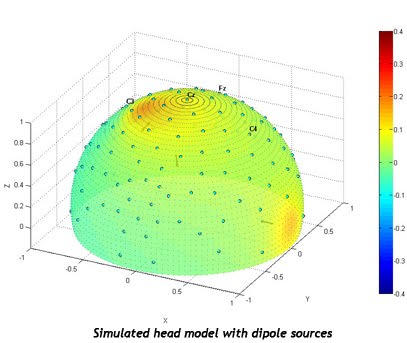

Electrical signals sent by the brain are then picked up by a series of electrodes placed at specific locations on the user’s scalp. This process, known as electroencephalography (EEG), records the brain responses and converts the brain activity into a series of computer commands.

Electrical signals sent by the brain are then picked up by a series of electrodes placed at specific locations on the user’s scalp. This process, known as electroencephalography (EEG), records the brain responses and converts the brain activity into a series of computer commands.

As the user looks at the boxes on the screen, the computer program is able to figure out the commands, allowing the music player to be controlled without the need of any physical movement. In order to adjust the volume, or change the song, the user just has to look at the corresponding box. The command takes effect in just seconds.

For people who have become paralyzed due to a spinal injury, the normal flow of brain signals through the spine and to muscles is disrupted. However, the cranial nerves are separate and link directly from the brain to certain areas of the body, bypassing the spine altogether. This particular brain-computer interface exploits one of these; the occulomotor nerve, which is responsible for the eye’s movements. This means that even an individual with complete body paralysis can still move their eyes over images on a screen.

This cutting age brain-computer interface system could lead the way for the development of similar user interfaces for tablets and smart phones. The concept could also be designed to aid with assisted living applications, for example.

The BCI system was presented at the 6th International IEEE/EMBS Neural Engineering Conference in San Diego, California by team member Dr. Owen Falzon.

tags: BCI, BMI, c-Research-Innovation, cx-Health-Medicine, cyborg