Robohub.org

Grasping unknown objects

This post is part of our ongoing efforts to make the latest papers in robotics accessible to a general audience.

To manipulate objects, robots are often required to estimate their position and orientation in space. The robot will behave differently if it’s grasping a glass that is standing up, or one that has been tipped over. On the other hand, it shouldn’t make a difference if the robot is gripping two different glasses with similar poses. The challenge is to have robots learn how to grasp new objects, based on previous experience.

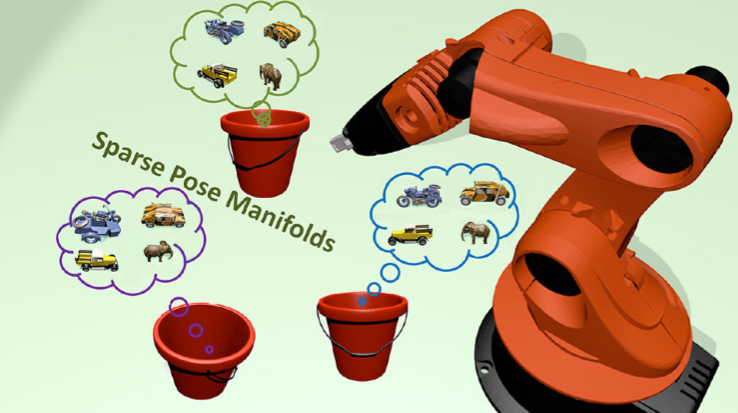

To this end, the latest paper in Autonomous Robots proposes a Sparse Pose Manifolds (SPM) method. As shown in the figure above, different objects viewed from the same perspective should share identical poses. All the objects facing right are in the same “pose-bucket”, which is different from the bucket for objects facing left, or forward. For each pose, the robot knows how to behave to guide the gripper to grasp the object. To grip an unknown object, the robot estimates what “bucket” the object falls into.

The videos below shows how this method can efficiently guide a robotic gripper to grasp an unknown object and illustrates the performance of the pose estimation module.

tags: Actuation, c-Research-Innovation, Manipulation