Robohub.org

Self-supervised learning of visual appearance solves fundamental problems of optical flow

Flying insects as inspiration to AI for small drones

How do honeybees land on flowers or avoid obstacles? One would expect such questions to be mostly of interest to biologists. However, the rise of small electronics and robotic systems has also made them relevant to robotics and Artificial Intelligence (AI). For example, small flying robots are extremely restricted in terms of the sensors and processing that they can carry onboard. If these robots are to be as autonomous as the much larger self-driving cars, they will have to use an extremely efficient type of artificial intelligence – similar to the highly developed intelligence possessed by flying insects.

Optical flow

One of the main tricks up the insect’s sleeve is the extensive use of ‘optical flow’: the way in which objects move in their view. They use it to land on flowers and avoid obstacles or predators. Insects use surprisingly simple and elegant optical flow strategies to tackle complex tasks. For example, for landing, honeybees keep the optical flow divergence (how quickly things get bigger in view) constant when going down. By following this simple rule, they automatically make smooth, soft landings.

I started my work on optical flow control from enthusiasm about such elegant, simple strategies. However, developing the control methods to actually implement these strategies in flying robots turned out to be far from trivial. For example, when I first worked on optical flow landing my flying robots would not actually land, but they started to oscillate, continuously going up and down, just above the landing surface.

Honeybees are a fertile source of inspiration for the AI of small drones. They are able to perform an impressive repertoire of complex behaviors with very limited processing (~960,000 neurons). Drones are in their turn very interesting “models” for biology. Testing out hypotheses from biology on drones can bring novel insights into the problems faced and solved by flying insects like honeybees.

Fundamental problems

Optical flow has two fundamental problems that have been widely described in the growing literature on bio-inspired robotics. The first problem is that optical flow only provides mixed information on distances and velocities – and not on distance or velocity separately. To illustrate, if there are two landing drones and one of them flies twice as high and twice as fast as the other drone, then they experience exactly the same optical flow. However, for good control these two drones should actually react differently to deviations in the optical flow divergence. If a drone does not adapt its reactions to the height when landing, it will never arrive and start to oscillate above the landing surface.

The second problem is that optical flow is very small and little informative in the direction in which a robot is moving. This is very unfortunate for obstacle avoidance, because it means that the obstacles straight ahead of the robot are the hardest ones to detect! The problems are illustrated in the figures below.

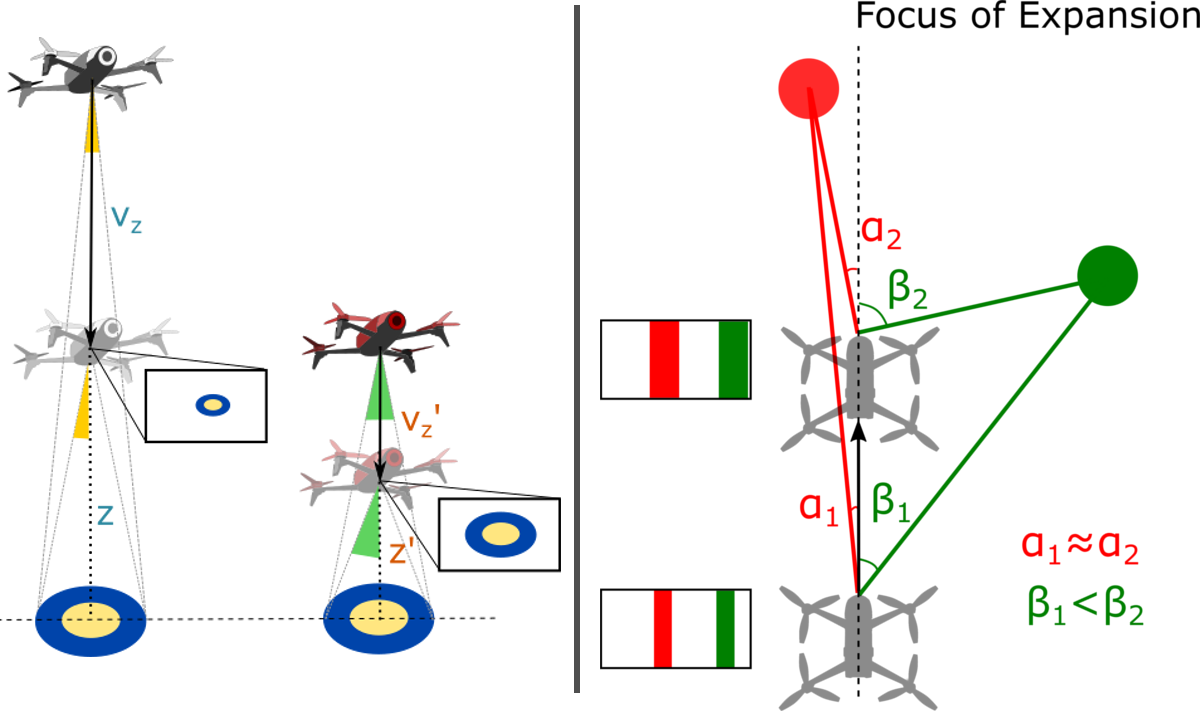

Left: Problem 1: The white drone is twice as high and goes down twice as fast as the red drone. However, they both see the same optical flow divergence, as in both cases the object in view gets twice as big. This can be seen by the colored triangles – at the highest position it captures the full angle of the landing platform, at the lowest position it only covers half of the landing platform in the field of view.

Right: Problem 2: The drone moves straight forward, in the direction of its forward-looking camera. Hence, the focus of expansion is straight ahead. Objects close to this direction, like the red obstacle, have very little flow. This is illustrated by the red lines in the figure: The angles of these lines with respect to the camera are very similar. Objects further from this direction, like the green obstacle, have considerable flow. Indeed, the green lines show that the angle gets quickly bigger when the drone moves forward.

Learning visual appearance as the solution

In an article published in Nature Machine Intelligence today [1], we propose a solution to both problems. The main idea was that both problems of optical flow would disappear if the robots were able to interpret not only optical flow, but also the visual appearance of objects in their environment. This solution becomes evident from the above figures. The rectangular insets show the images captured by the drones. For the first problem it is evident that the image perfectly captures the difference in height between the white and red drone: The landing platform is simply larger in the red drone’s image. For the second problem the red obstacle is as large as the green one in the drone’s image. Given their identical size, the obstacles are equally close to the drone.

Exploiting visual appearance as captured by an image would allow robots to see distances to objects in the scene similarly to how we humans can estimate distances in a still picture. This would allow drones to immediately pick the right control gain for optical flow control and it would allow them to see obstacles in the flight direction. The only question was: How can a flying robot learn to see distances like that?

The key to this question lay in a theory I devised a few years back [2], which showed that flying robots can actively induce optical flow oscillations to perceive distances to objects in the scene. In the approach proposed in the Nature Machine Intelligence article the robots use such oscillations in order to learn what the objects in their environment look like at different distances. In this way, the robot can for example learn how fine the texture of grass is when looking at it from different heights during landing, or how thick tree barks are at different distances when navigating in a forest.

Relevance to robotics and applications

Implementing this learning process on flying robots led to much faster, smoother optical flow landings than we ever achieved before. Moreover, for obstacle avoidance, the robots were now also able to see obstacles in the flight direction very clearly. This did not only improve obstacle detection performance, but also allowed our robots to speed up. We believe that the proposed methods will be very relevant to resource-constrained flying robots, especially when they operate in a rather confined environment, such as flying in greenhouses to monitor crop or keeping track of the stock in warehouses.

It is interesting to compare our way of distance learning with recent methods in the computer vision domain for single camera (monocular) distance perception. In the field of computer vision, self-supervised learning of monocular distance perception is done with the help of projective geometry and the reconstruction of images. This results in impressively accurate, dense distance maps. However, these maps are still “unscaled” – they can show that one object is twice as far as another one but cannot convey distances in an absolute sense.

In contrast, our proposed method provides “scaled” distance estimates. Interestingly, the scaling is not in terms of meters but in terms of control gains that would lead the drone to oscillate. This makes it very relevant for control. This feels very much like the way in which we humans perceive distances. Also for us it may be more natural to reason in terms of actions (“Is an object within reach?”, “How many steps do I roughly need to get to a place?”) than in terms of meters. It hence reminds very much of the perception of “affordances”, a concept forwarded by Gibson, who introduced the concept of optical flow [3].

Picture of our drone during obstacle avoidance experiments. At first, the drone flies forward while trying to keep the height constant by keeping the vertical optical flow equal to zero. If it gets close to an obstacle, it will oscillate. The increasing oscillation when approaching an obstacle is used to learn how to see distance by means of visual appearance. After learning, the drone can fly faster and safer. For the experiments we used a Parrot Bebop 2 drone, replacing its onboard software with the Paparazzi open source autopilot – performing all computing onboard of the drone’s native processor.

Relevance to biology

The findings are not only relevant to robotics, but also provide a new hypothesis for insect intelligence. Typical honeybee experiments start with a learning phase, in which honeybees exhibit various oscillatory behaviors when they get acquainted with a new environment and related novel cues like artificial flowers. The final measurements presented in articles typically take place after this learning phase has finished and focus predominantly on the role of optical flow. The presented learning process forms a novel hypothesis on how flying insects improve their navigational skills over their lifetime. This suggests that we should set up more studies to investigate and report on this learning phase.

Guido de Croon, Christophe De Wagter, and Tobias Seid.

References

- Enhancing optical-flow-based control by learning visual appearance cues for flying robots. G.C.H.E. de Croon, C. De Wagter, and T. Seidl, Nature Machine Intelligence 3(1), 2021.

- Monocular distance estimation with optical flow maneuvers and efference copies: a stability-based strategy. G.C.H.E. de Croon, Bioinspiration & biomimetics, 11(1), 016004.

- The Ecological Approach to Visual Perception. J.J. Gibson (1979).

tags: bio-inspired, c-Research-Innovation, Flying