Robohub.org

Towards building brain-like cognition and control for robots

Myon walking with human support. Source: Neurorobotics Research Lab

The idea of connecting brain-inspired models of computation to robots is probably as old as the discipline of robotics itself; as far back as 1950, neurophysiologist William Grey Walter had already conceived of an autonomous mobile platform that was controlled by brain-inspired analog circuitry[1]. Today, researchers are connecting robotics with neuroscience in order to both build intelligent robots and to better understand the brain. The workshop Advances in Biologically Inspired Brain-Like Cognition and Control for Learning Robots at IROS (Hamburg) brought together experts from diverse fields in brain-based robotics, neurorobotics, artificial neural networks and machine learning to discuss the state of the art.

Learning About Animal Locomotion Control using Robots

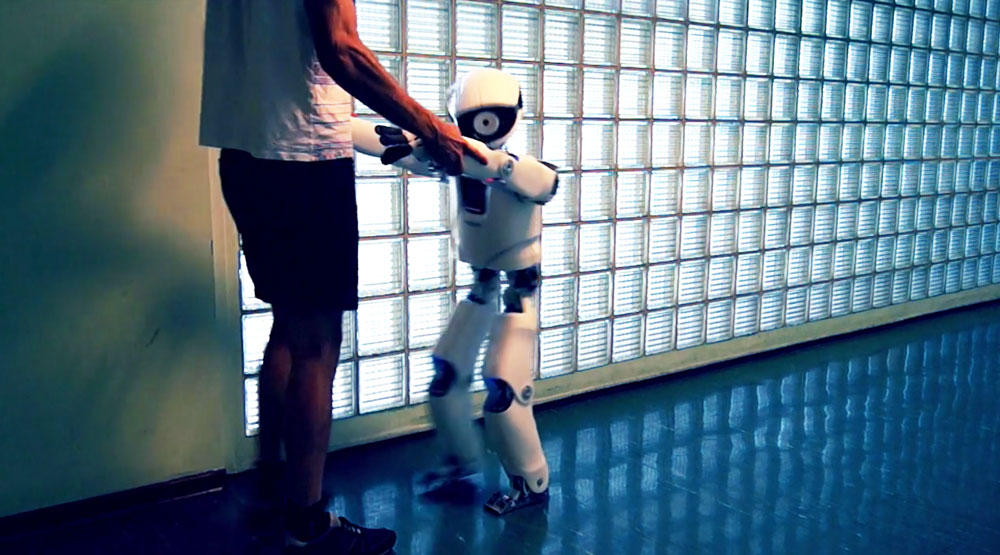

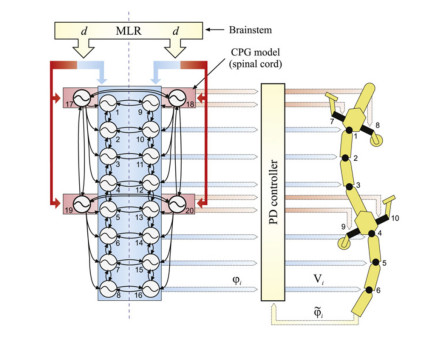

Salamander CPG model tested with a amphibious salamander-like robot (Ijspeert et al., 2007).

In contrast to studying animal locomotion though simulation, biorobots allow researchers to observe physical interaction with the real world, while at the same time avoiding the ethical issues of animal experimentation. In the first talk, Auke Ijspeert from EPFL in Switzerland gave an introduction to his research on animal locomotion and biorobotics, and spoke about the advantages of using robots as an experimental tool.

After introducing the four essential ingredients of animal motor control (descending modulation from higher brain areas, reflexes, the musculoskeletal system, and central pattern generators – CPGs) Ijspeert’s talk focused on his group’s efforts to implement walking and swimming in a salamander-like robot using CPGs. CPGs are assumed to be neural circuits that are implemented in the spinal cord of vertebrates, and while there is no clear experimental evidence, researchers in the field suspect that they are responsible for implementing motion primitives that are controlled via modulation signals from the brain. In the salamander robot, this modulation enabled a CPG modeled as a system of coupled oscillators to switch between walking and swimming locomotion.

Further studies on the salamander robot include the incorporation of sensory feedback, which considerably improves performance in the presence of external perturbations. Ijspeert concluded by presenting recent research that showed how adding a CPG to a sensory-driven model of human bipedal locomotion can simplify the control of walking speed.

Employing Neural and Physical Dynamics for Robot Control

The second major theme of the workshop featured talks from three invited speakers. Manfred Hild from the Neurorobotics Research Laboratory at Beuth Hochschule für Technik Berlin, Germany presented on Self-Exploration of Autonomous Robots Using Attractor-Based Behavior Control. Rather than developing explicit models for his Myon [2] and Semni [3] robots, the goal of Hild’s research is to acquire implicit body representations through self-exploration.

The second major theme of the workshop featured talks from three invited speakers. Manfred Hild from the Neurorobotics Research Laboratory at Beuth Hochschule für Technik Berlin, Germany presented on Self-Exploration of Autonomous Robots Using Attractor-Based Behavior Control. Rather than developing explicit models for his Myon [2] and Semni [3] robots, the goal of Hild’s research is to acquire implicit body representations through self-exploration.

The information gathered during this process is stored in sensorimotor manifolds that allow for behavior control. Learning these manifolds through naïve motor babbling is infeasible due to the complexity and size of the resulting data. In Hild’s approach, all joints are controlled independently by relatively simple cognitive sensorimotor loops. The embodied interplay of these individual loops enables attractor-based behavior control through transitions between different attractor states of the system formed by the robot and the environment. By varying the parameters of the individual cognitive sensorimotor loops, it is possible to learn sensorimotor manifolds in a highly efficient manner.

By contrast, Joni Dambre from Reservoir Computing Lab at Ghent University in Belgium leverages dynamical systems for robot control. In her talk Practical approaches to exploiting body dynamics in robot motor control, she provided an overview of her group’s research on applications of the reservoir computing paradigm in robotics. In particular, the focus was on low-cost compliant robots whose dynamics are not analytically tractable and therefore cannot be controlled using standard methods from classical robotics. The idea of reservoir computing was originally conceived independently by Jaeger[4] and Maas[5] in 2001 and 2002, respectively. In the original publications, the reservoir was modeled as a randomly generated recurrent network of artificial neurons or spiking neurons. Rather than training all connections of the network individually, only a small set of linear readout units is adapted with a simple learning algorithm-like linear regression. The internal weights of the complex reservoir network remain constant. Based on this concept, Dambre’s group has developed a method for embedding complex tunable CPGs into neural networks as well as an approach for creating inverse models using reservoir computing. In another study, the reservoir network was replaced by the physical dynamics of a tensegrity robot, which was then able to learn CPGs. Combining reservoir computing with morphological computation therefore provides an easy means of obtaining embodiment. If the body dynamics are not rich enough for the desired control task, it is possible to couple them with a neural reservoir. As an example, Dambre presented new results on learning to walk with the Oncilla robot.

The last presentation on neural dynamics was given by Herbert Jaeger from the MINDS group at Jacobs University in Bremen, Germany. In his talk Generating and Modulating Complex Motion Patterns with Recurrent Neural Networks and Conceptors, Jaeger (one of the inventors of the reservoir computing approach) outlined a novel computational principle called conceptors. Conceptors are an extension to Jaeger’s echo state network approach to reservoir computing and enable a recurrent network of artificial neurons to store and reproduce dynamical patterns. For each pattern stored in the neural network, a corresponding conceptor is generated. By later inserting this conceptor as a neural filter into the state update loop of the reservoir network, the corresponding pattern can be retrieved. Since a single reservoir can store several different motion patterns at the same time, it is also possible to morph patterns by linearly blending between different conceptors. This was demonstrated using the example of recorded human motion patterns.

Training and Understanding Deep Neural Networks

The recent successes of deep learning techniques have brought new momentum to the field of machine learning, and have heralded a renaissance in artificial neural networks. Deep neural networks can achieve superhuman performance in traffic sign recognition[6], learn to play computer games[7], and automatically generate appropriate responses to e-mail messages[8] — rendering them a highly interesting tool for commercial applications ranging from autonomous driving to automated image tagging. It is thus no surprise that companies like Facebook, Google and Microsoft have founded their own deep learning research laboratories.

In his talk on Training and Understanding Deep Neural Networks for Robotics, Design, and Perception, Jason Yosinski from the Creative Machines Lab at Cornell University shed light on some quite different applications and properties of deep neural networks. At the beginning, he demonstrated his work on central pattern producing networks (CPPNs) for generating 3D models. The shape of the generated forms is controlled by an evolutionary algorithm. On endlessforms.com, users can try out this algorithm live by selecting their preferred forms as a starting point and see how they evolve over different generations. Yosinski has also applied CPPNs for evolving gaits on the four-legged robot, Aracna.

The second part of Yosinksi’s talks was dedicated to methods for visualizing and understanding the inner workings of deep neural networks like AlexNet[9]. He demonstrated how CPPNs can be used to generate artificial images that are falsely recognized with high confidence by deep convolutional neural networks. This approach was used in the deep visualization toolbox[10], an open source tool for visualization and analysis of deep neural networks.

The Neurorobotics Platform of the Human Brain Project

In the last talk of the workshop, Stefan Ulbrich from FZI Forschungszentrum Informatik in Karlsruhe, Germany presented The Neurorobotics Platform[11] of the Human Brain Project[12]. This platform is developed within the Neurorobotics Subproject of the Human Brain Project and will provide both roboticists and neuroscientists with tools to connect robot models to large-scale brain simulations running on supercomputers or neuromorphic hardware. All functionality is made accessible via a modern web-based user interface that displays a live visualization of the simulated robot, its environment, and live data such as brain activity or robot state parameters. A separate closed-loop engine connects and synchronizes the robot and brain simulation components. The data exchanged between brain and robot is processed by user-definable transfer functions. Dedicated designers will enable users to define their own robots, environments and experiments. Alternatively, ready-to-run setups will be made available in a catalogue.

After outlining the roadmap for developing new features for the Neurobotics platform, Ulbrich closed his presentation with a video showing the platform in action. Interested users will be able to access and try out the Neurorobotics platform after its public release in April 2016.

https://www.youtube.com/watch?v=b_RjzdlN0y4

Poster Session

The invited talks were complemented by a poster session that presented range of topics, including a review of the role of time in neurobiological learning algorithms, an approach to cognitive walking, and a neural architecture for autonomous task recognition, learning and execution. Topics with a special focus on neurorobotics included the cerebellar control of a compliant robot arm and select experiments from the Neurorobotics Platform.

Final thoughts

The variety of different topics in the workshop and diverse backgrounds of the invited speakers demonstrates that there is a lot of research being done in machine learning and biologically inspired cognition, and much of it goes beyond standard deep convolutional neural networks. Instead of asking ‘which of the proposed methods will outperform all of its competitors,’ the question should rather be ‘how can these methods and their individual strengths be combined to build better robots?’ This workshop was a first important step on this way.

To foster future collaboration, a call for contributions to a special issue of the journal Frontiers in Neurorobotics on the topic of the workshop will soon be announced. Further information about the workshop and abstracts of all talks and posters are available on the www.neurorobotics.net.

[1] http://www.nature.com/scientificamerican/journal/v182/n5/pdf/scientificamerican0550-42.pdf

[2] http://www.neurorobotik.de/robots/myon_de.php

[3] http://www.neurorobotik.de/robots_de.php

[4] H. Jaeger (2001): The “echo state” approach to analysing and training recurrent neural networks. GMD Repo

[5] Maass, W., Natschläger, T., & Markram, H. (2002). Real-time computing without stable states: A new framework for neural computation based on perturbations. Neural computation, 14(11), 2531-2560.

[6] http://people.idsia.ch/~juergen/superhumanpatternrecognition.html

[7] http://arxiv.org/abs/1312.5602

[8] http://googleresearch.blogspot.ch/2015/11/computer-respond-to-this-email.html

[9] Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). Imagenet classification with deep convolutional neural networks. In Advances in neural information processing systems (pp. 1097-1105).

[10] http://yosinski.com/deepvis

[11] http://www.neurorobotics.net

[12] http://www.humanbrainproject.eu

tags: Algorithm AI-Cognition, Algorithm Controls, bio-inspired, c-Research-Innovation, cx-Events