Robohub.org

Where’s Susi? Airborne orangutan tracking with python and react.js

You are faced with a few thousand hectares of rainforest that you know harbours one or more orangutans that you need to track down. Where, how, and why do you start looking?

You are faced with a few thousand hectares of rainforest that you know harbours one or more orangutans that you need to track down. Where, how, and why do you start looking?

Background

About a year ago I was doing a lot of drone-related work and was presented with the following problem: Would it be possible to use a drone to fly above the Bornean jungle and search for tagged orangutans?

To understand the motivation behind the question we need some background.

The orangutan is one of the five great apes and is only found in Indonesia and Sumatra. They split off from the main African ape line about 16-19 million years ago and are extremely intelligent, strong, and agile animals. Sadly, extreme pressure on its habitat from loggers, palm oil plantations, forest fires, and general human activity has led the orangutan to be become endangered, or even critically endangered in the case of the Sumatran orangutan. Unfortunately for the species, its high intelligence and cuteness factor, particularly of the babies, has additionally led to their capture and sale as pets, often held in deplorable circumstances.

As a result a large number of NGOs, charities, and rescue centres have come into existence to try to save what little habitat there is left. As an aside, while this post will focus on the orangutan, it is important to note that the plight of the orangutan, albeit very visible, is just one symptom of the more fundamental issue of how humans mange and interact with the natural environment. There are hundreds of other plant and animal species that share a similar, if not worse, plight and have received just a fraction of the attention and resources orangutans have.

As a result a large number of NGOs, charities, and rescue centres have come into existence to try to save what little habitat there is left. As an aside, while this post will focus on the orangutan, it is important to note that the plight of the orangutan, albeit very visible, is just one symptom of the more fundamental issue of how humans mange and interact with the natural environment. There are hundreds of other plant and animal species that share a similar, if not worse, plight and have received just a fraction of the attention and resources orangutans have.

In my case my main point of contact has been International Animal Rescue and thus this post will reflect most closely their needs and workflows. There are of course other orangutan (rescue) centres such as BOS, SOCP, Project Orangutan, etc. and I was lucky to meet them all during a workshop in Indonesia last May. Each of them do wonderful and humbling work in their own right, and face similar challenges. One thing I did learn throughout this is that every individual orangutan is different, and that every orangutan sanctuary is different. What works for one may not work for the other.

The main focus of an orangutan rescue centre is to work with the local community to protect habitat and rehabilitate orangutans that have been confiscated from owners or found in palm oil plantations. The ultimate goal being to release them back into the wild where they can live out a normal, healthy life. For some though this is no longer possible as they came in too old, too young (and thus did not spend enough time learning from their mother), or sustained too much trauma. For those that can be released back it is important to keep track of how well they survive in the wild so that the release program can be optimised to maximise chances of survival. This is the post release monitoring phase and, depending on the protocols of the centre, can be very intensive, taking up to 2-3 years.

The main focus of an orangutan rescue centre is to work with the local community to protect habitat and rehabilitate orangutans that have been confiscated from owners or found in palm oil plantations. The ultimate goal being to release them back into the wild where they can live out a normal, healthy life. For some though this is no longer possible as they came in too old, too young (and thus did not spend enough time learning from their mother), or sustained too much trauma. For those that can be released back it is important to keep track of how well they survive in the wild so that the release program can be optimised to maximise chances of survival. This is the post release monitoring phase and, depending on the protocols of the centre, can be very intensive, taking up to 2-3 years.

Post release monitoring

Anybody who has set foot in proper rainforest will know how hard it is to find and track anything, let alone an agile, intelligent, tree climbing ape. As a result a small tracking beacon has been developed that is implanted in the back of the neck and transmits a regular pulse at a particular VHF frequency. Note it is simply a keyed pulse, there is no modulation or data transmission of any sort. Given a receiver, and a 3 element yagi, it then becomes possible to pick up the signal and track down the animal. If you are within 200-400m of it that is… (on average).

The rescue centres are well aware that this is simple and relatively old technology. However it is the only thing available. There are some ideas brewing but that is a completely different story.

The first couple months of post release monitoring are very intense. A team of two people will follow an animal through the jungle and monitor its behaviour close to 24/7. As confidence in the animal grows this will then taper off over a period of 2-3 years. The problem then becomes this: if you have not seen the animal for 2 months and your radio tracking equipment only gives you 200-400m range, where on earth do you start looking? Searching manually (the current method) is extremely time consuming and frustrating. Again, something you only appreciate if you have stood there in the mud, heat and mosquitos.

A view from above

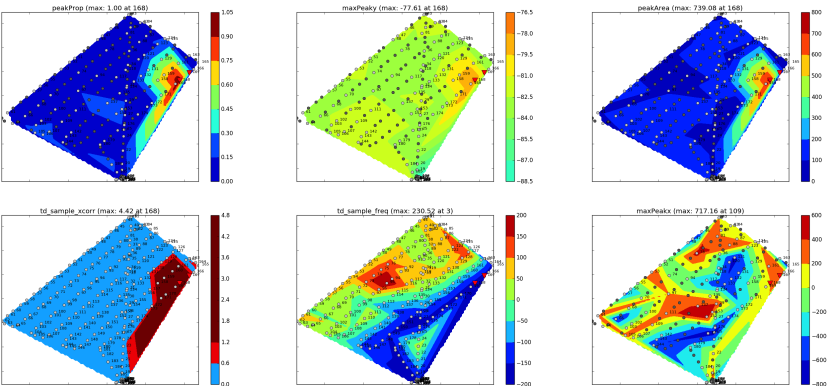

Hence the idea of using a drone to search an area from above and generate a heatmap of where it detected the implants. Manual search efforts could then be targeted accordingly. While the system is not perfect yet, a year later I’m quite confident that the answer is yes, it can be done.

Hence the idea of using a drone to search an area from above and generate a heatmap of where it detected the implants. Manual search efforts could then be targeted accordingly. While the system is not perfect yet, a year later I’m quite confident that the answer is yes, it can be done.

This deserves another aside. Note that the fundamental problem lies with the poor range of the implants and the fact that all you get is a ping. The drone is just a band-aid to turn the needle in a haystack problem into a needle in a bale problem. The implants do work (though the failure rate is rather high), but there is no long term data about how safe they are over a period of 5-50 years. This is a trade-off every centre needs to make for itself and hence some centres refuse to use them all together.

In the ideal world you have a transmitter you can attach to the animal externally, has GPS, and talks straight to a satellite. However, orangutans are smart, intelligent, and their physiology makes it difficult to attach anything externally. Even then, having something that does not harm or impede the animal, can adapt as the animal gains/loses weight, can not be removed by the animal itself, and has a power source that runs for at least 3 years, is not trivial. Not impossible, I have done plenty of brainstorming around possible solutions, but definitely a longer term project that I know at least one group is exploring together with Serge from Conservation Drones. There are also other ideas floating about, around the use of sensor networks and completely passive sensors.

All this to say that I don’t see the drone as the be-all-end-all solution, but as a useful tool to satisfy a current need.

Implementation

Fundamentally the problem is actually very simple. Detecting a keyed radio pulse against background noise and localising each detection in space and time. My take on these things is always a very pragmatic one. Start simple, test often, and most importantly, build something people will and can use sustainably. Else, why bother? I have seen far too many fancy one-off demos or academic papers that remain just that. This does make it harder. You have to take into account the human & operational element and be willing to walk away from the idea if it turns out to be the wrong approach.

Hence, while initial tests and brainstorming were done around directional antennas and closed-loop control, we decided to start off simple and use an omnidirectional antenna together with pre-defined automated search patterns. This would also allow for detecting multiple animals simultaneously. Definitely less efficient, but also less failure modes. For the same reason the choice was made to stick with fairly standard off-the-shelf hardware components. A common Software Defined Radio (SDR) module, Pixhawk autopilot, Odroid companion computer, and versatile but compact & efficient multirotor frame (actually the same one as used for my metal detector and deep drone experiments). As for the antenna, different types were tried and in the end we settled for a lightweight loop.

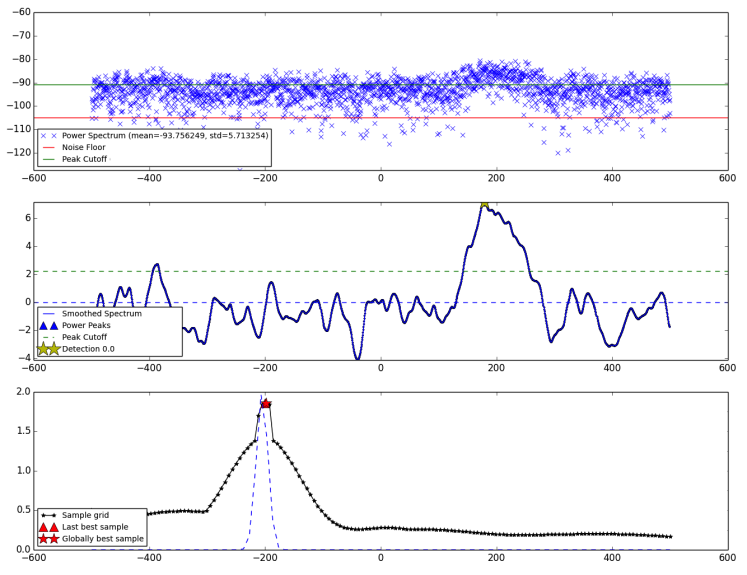

Detecting a pulse in frequency & time domain

On the software side, brain cycles were minimised through leveraging the python scientific computing stack. The core detection loop consists of collecting samples from the SDR, performing some signal processing in the time and frequency domain to detect the presence of a pulse, and logging this to persistent storage together with the current location, altitude, speed, and heading of the drone. A complication being the fact that neither the radio equipment nor the transmitter operate at exactly the frequency you tell them to. So the frequency you actually detect the pulse at will be offset and can vary with the environmental conditions. The whole system operates completely offline with no communication link with the ground assumed.

In some sense, this approach has been the sledgehammer one, given the relatively simple fundamental RF problem. But it also brings advantages in terms of flexibility.

The initial interaction with the system was through running a number of scripts over ssh, but this was of course not an option for a non-techie. Hence it was a great excuse to try out react.js to develop a browser based UI served through flask over wifi. That way, all that is needed to manage the tracking system is a tablet & browser from which to start / stop the tracking and review the results as an interactive heatmap using leaflet. Alternatively, the data can be downloaded as a CSV file or a series of geotiffs. If an internet connection is available, then Google satellite or Bing Aerial imagery can serve as basemap. Otherwise, a local tile layer can be used. For example, IAR had already created an orthophoto of the pre-release site, so that was then easily turned into tiles and hosted locally on the odroid.

Browser based UI for interacting with the payload

Tests: UK

Initial tests were done purely on the ground and many hours were spent trying antennas, changing parameters, and all round fiddling. One of the first things that was tested was interference from the drone. Surprisingly, this was not found to be a big issue with the platform I was using. I did notice during later tests that when we mounted the tracking payload on IAR’s DJI Inspire, the noise level was noticeably higher.

Once reasonably happy with results on the ground, I started testing from the air. This again involved many iterations and frustrations, with knees in the mud on a Sunday morning, trying to type with frozen hands, and frantically covering up equipment as it started raining. The joy of UK winters. During this time I have seen pretty much every single component fail and luckily the copter made it all the way through in one piece.

Once reasonably happy with results on the ground, I started testing from the air. This again involved many iterations and frustrations, with knees in the mud on a Sunday morning, trying to type with frozen hands, and frantically covering up equipment as it started raining. The joy of UK winters. During this time I have seen pretty much every single component fail and luckily the copter made it all the way through in one piece.

The workflow slowly streamlined and tests were also done with a Maja fixed wing with Bristol Robotics Lab to confirm things worked on a different platform and at higher speeds. The highest detection seen so far being from 120m. Still a long way from the performance of the dedicated handheld hardware, but good enough. Note you actually don’t want too large a detection range as then you loose specificity.

At that point, plans also started to form for doing field tests on location in Borneo. As much as you can test and anticipate problems, UK heathland is not exactly representative of the Bornean jungle. Many spares and lots of logistical planning later, I arrived at the IAR site in West Kalimantan. Albeit without my two largest batteries as they had, incorrectly in my view, been confiscated at Schiphol airport.

Tests: Borneo

The first couple days in Kalimantan were spent liaising with the local team and doing verification tests with the same implant I had used in the UK. This turned out to be more cumbersome than expected. The signal seemed weaker and the heat meant my MacBook was cooking itself, the odroid was almost cooking itself, and the voltage regulator was the first thing to completely die. Luckily I had a spare. Glitches were ironed out and the first implanted test was done by briefly flying over a young orangutan called Susi as she was walked to the pre-release site. Strong detections were recorded at 60m and showed that the act of implanting did indeed strengthen the signal. The keepers did say that Susi was weary of the drone hovering above. Another point to take into account.

Then followed fights over the pre-release site at different heights and speeds to see if we could reliably locate Susi as she moved about in the forest. This worked, though the forest canopy was quite low (~25-30m) and was relatively young, but dense, secondary forest. Highest detections were recorded at 55m at 10m/s while Susi was playing on the ground. So not quite as high as I’d like yet, but already a useful range and it proved the concept. The higher the animal is in the canopy, the easier of course.

Animal localisation from the detections

During the tests we also had some fun trying to get IAR’s DJI Inspire to film the drone as it was doing its thing. Turned out harder than we thought but thanks to IAR pilot Heru, we managed to get some shots.

The plan had also been to test the tracking payload on a fixed wing for increased coverage. For this Frank Sedlar (who I knew from a past World Bank Citisense Conference) was kind enough to come down from Jakarta with his Skywalker. Together we came to be known as “Drine” and “Drone” by the local team as that was much easier to remember and pronounce than “Dirk” and “Frank”. Unfortunately though, the aircraft flew away in a series of erratic loops during the very first test flight. Luckily we were able to retrieve it in almost perfect condition. A failed / overheated ESC or locked control surfaces are suspected to be the main culprit.

Given that the Inspire is such a wonderfully engineered system, and that they already knew how to fly, we also mounted the payload on it to see how that would work. Turned out it worked quite well with good detections, though I noticed a clear 10dB increase in the baseline noise level.

Given that the Inspire is such a wonderfully engineered system, and that they already knew how to fly, we also mounted the payload on it to see how that would work. Turned out it worked quite well with good detections, though I noticed a clear 10dB increase in the baseline noise level.

The final milestone was to go out to one of IAR’s two release sites and test the system there over a wider area and with a higher canopy. A long and muddy drive later brought us to the edge of the national park, followed by a two hour hike through the swampy forest to the camp: a small clearing by a river with a wooden cabin that is used as base of operations for tracking and monitoring the released animals. It was obvious throughout the hike that takeoff and, more importantly, landing space is at an absolute premium, even for a copter. Let alone some flat, dry space to setup and launch from. Hence it was decided to take off and land from camp and try to confirm the location of Prima, the closest animal at around 2 km.

Getting reliable height data proved a challenge due to the poor GPS signal under the canopy. So the first flight plan that was put together was conservative and relatively short: dash out, do a small search, dash back. Due to a bug in Dronekit, and the fact that the long range telemetry modules I had specially bought for this did not work, we would have to fly totally blind. Even the Taranis Mavlink Telemetry module I had bought as a backup from Airborne Projects did not work due to buggy firmware (fixed now). I had also planned to integrate the Lightware SF11/C range finder to enable accurate altitude hold but that failed to arrive on time due to lost shipments and UPS bureaucracy. The gods weren’t with me on this one.

It was a tense first mission as we waited for the copter to come back which, to our relief, it did and I managed to land it safely.

No detections from Prima were recorded (it turned out later she had moved quite a bit from where we were flying) . After reviewing the recorded video from the onboard Mobius camera, we decided we had some extra margin. The altitude was dropped and the search area extended.

Take-off and search went fine and after a while the Tx signal reconnected, which meant the aircraft was doing the last 1000m home. Then suddenly everything went dark. We waited some more, but it soon became clear that we had lost the drone. A post mortem pointed at a hill around 1km away, whose canopy may have been just high enough to snag the drone on its way back. Unfortunate, but it had been a calculated risk. We tried to locate the drone, hoping for a Tx signal or low battery alarm but failed. Im sure it will pop up someday. Most likely an orangutan will find it first and be spotted sitting in a tree chewing the propeller.

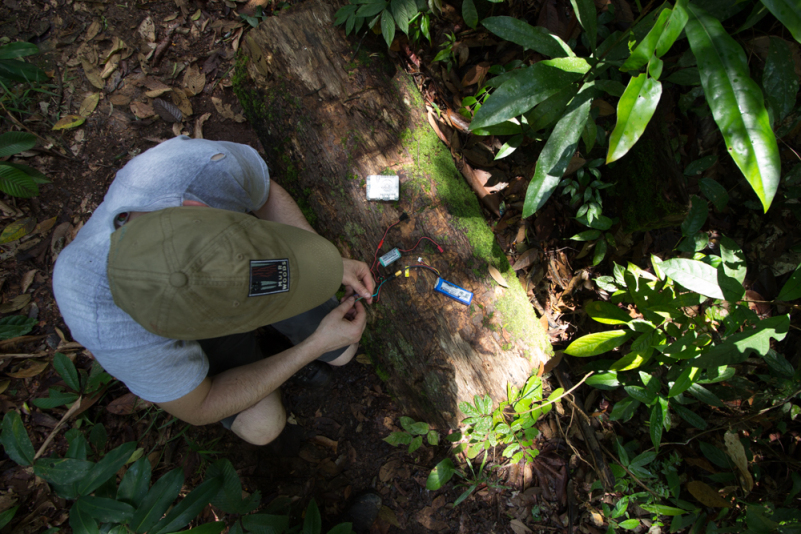

Not wanting to completely give up the search for Prima the plan was to mount the spare payload on the Inspire and use it to search. The range would be rather limited but worth a shot. Unfortunately though, the spare voltage regulator was lost with the copter and the original one had burned up on the first day in Borneo. Hence I then spent some time fiddling with jumper wires, Sugru, duct tape, and other bits of electronics in true MacGyver style. I was thwarted at every turn by the pickiness of the odroid power requirements. I could not get the voltage close enough to the 5V it needed with the components I had.

Not wanting to completely give up the search for Prima the plan was to mount the spare payload on the Inspire and use it to search. The range would be rather limited but worth a shot. Unfortunately though, the spare voltage regulator was lost with the copter and the original one had burned up on the first day in Borneo. Hence I then spent some time fiddling with jumper wires, Sugru, duct tape, and other bits of electronics in true MacGyver style. I was thwarted at every turn by the pickiness of the odroid power requirements. I could not get the voltage close enough to the 5V it needed with the components I had.

Loosing a drone like that is of course not fun but overall Im still happy with the way things went. Lots was learned.

Lessons

Looking back at the past year this has been a really interesting and rewarding project. Though I have to say it has cost me quite a few of my, already scarce, hair follicles. Performance is not quite where I want it yet but the core concept has definitely been proven. The plan now is to take a step back, review the different decisions, and modify / improve the system to increase the efficiency, detection range, and usability. In particular I would like to avoid the need for a separate GCS. This will also involve a review of the configuration and its unlikely we will stick with just a multirotor. On the software side, the geek in me would love to open my book on Bayesian inference and put together a lovely, elegant, and interpretable PGM. However, given the KISS philosophy that’s probably not a good idea and instead explore some custom electronics.

Lots of things have been learned on the way, the three key ones for me:

- Getting lipo’s across country borders by air is a lottery.

- If it can break, it will. If you did not test that last change, it most likely will not work (in the best case) or break something else (more likely).

- Checklists! They are boring and tedious but go without and it will bite you.

Acknowledgements

While I have poured a lot of time and effort into this project it would not have happened without the support and help from many people. First of all a massive thank you to IAR for access to the problem and financial support. IAR does great work and their ground team in Borneo is fantastic. An honour to work alongside Karmele, Heru and the others. You can help support what they do here. Thanks to Frank for coming down from Jakarta and thanks also to my family for putting up with me disappearing on weekends or working late on evenings to fiddle with drones and wave antennas about. Thank you also to Skycap for donating the copter (sorry I lost it!) and supporting the project through its inception.

Thank you’s also go out to Tom Richardson, Sabine Hauert, & Ken Stevens from Bristol Robotics Lab for advice and access to the Maja and to Badland & Marc Gunderson for the help on antennas. Further thanks to Joe Greenwood, Pere Molines, Paul Tapper, and Serge, Chuck & Richard for the discussions and calls. I hope I did not forget anybody but do let me know if I did.

In Closing

If anything you read here sparks your interest or raises some questions please get in touch.

In any case I hope at the very least you take a step back and reflect on how you interact with the natural world. For if current trends continue for much longer, future generations will have to make do with books, youtube videos, and zoo’s rather than the real thing.

Or as Leo Biddle from Project Orangutan put it:

Conservation is about creating hope where there is none.

So how about you help create some hope?

PS: as an aside, for the data scientists out there, there are also some interesting datasets to work on if interested.

tags: c-Aerial, Environment-Agriculture