Robohub.org

Firmer footing for robots with smart walking sticks

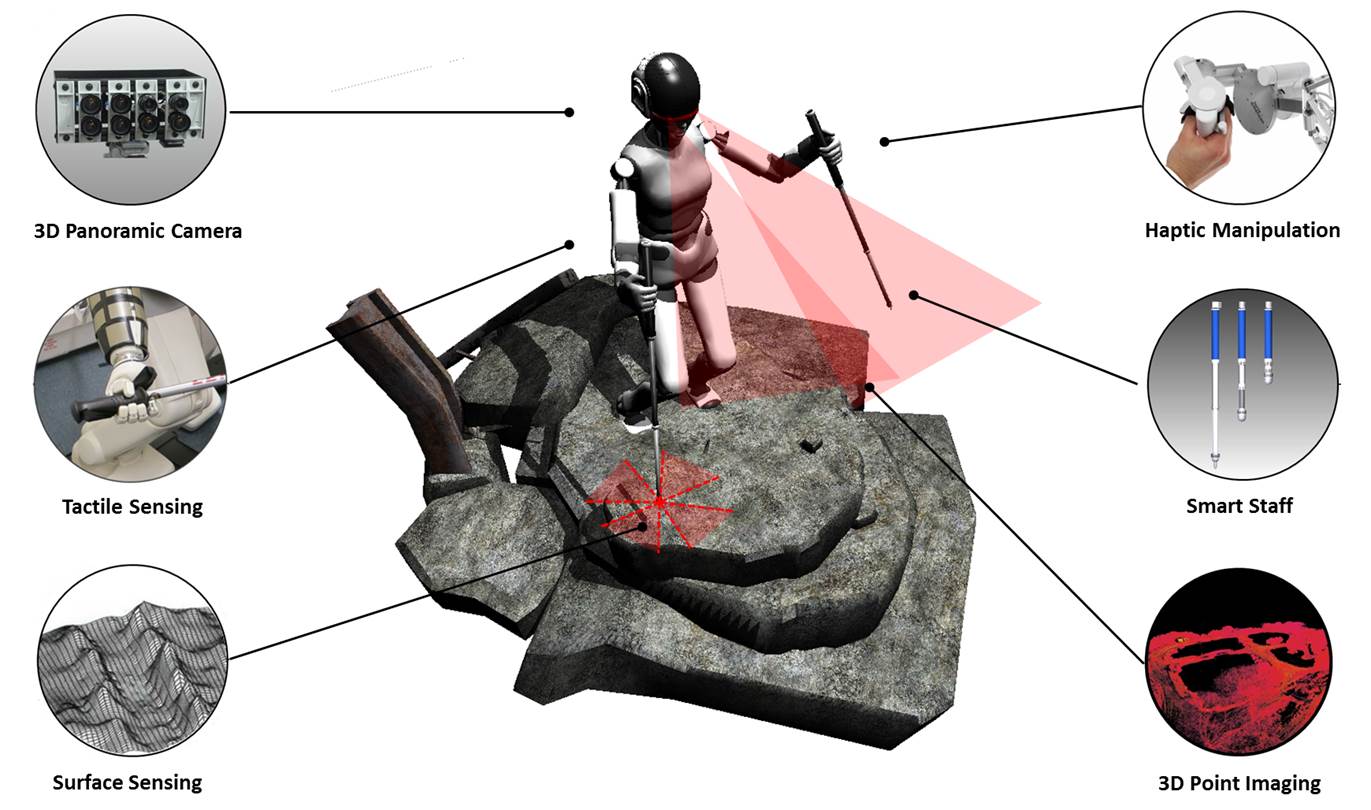

The idea of SupraPeds is to increase locomotion stability by incorporating a pair of actuated smart staffs with vision and force sensing that transforms biped humanoids into multi-legged robots. Credit: Oussama Khatib, Stanford University; Shuyun Chung, Stanford University.

Anyone who has ever watched a humanoid robot move around in the real world — an “unstructured environment,” in research parlance — knows how hard it is for a machine to plan complex movements, balance on uneven surfaces or traverse levels.

Quadruped robots, like Boston Dynamic’s BigDog, seem to be more adept, but don’t have the ability to use their hands to open doors or pull people from rubble.

Humans have trouble balancing sometimes too — say when climbing a mountain or walking on ice. In many cases, people turn to a trusted and time-tested tool: the walking staff.

“Efforts to make robots emulate humans have ignored an important fact: whenever humans approach their limits, they augment their capabilities with a diverse variety of assistive tools,” said Shuyun Chung, a postdoc working with Oussama Khatib in the Stanford University Artificial Intelligence lab.

“Perhaps the most useful tool is the walking staff, which improves support, enables load redistribution and can also be used as a sensor to probe the stability of planned footsteps.”

Researchers at Stanford University have been developing a robotic platform they call SupraPed that uses “smart staffs,” inspired by walking sticks, to better balance and traverse terrain.

Working in disaster recovery situations, the SupraPed can use the staffs to explore the terrain around them, expand the range of movements that are possible and even communicate a sense of touch to a human at a remote site, who can use that data to plan the robot’s movements.

Their work is supported by a grant from the National Science Foundation through the National Robotics Initiative, an effort to develop the next generation of robots that work beside or cooperatively with people.

A diagram showing some of the technologies incorporated into SupraPeds’ actuated smart staffs. Credit: Oussama Khatib, Stanford University; Shuyun Chung, Stanford University.

The smart staff is no simple stick. It’s equipped with 3-D vision capabilities and tactile sensors that can assess the topography of the surface, the friction of the material, its ability to sustain weight and other information relevant to planning the robot’s next move.

Just as we might poke a rock to see how stable it is before we jump on it to cross a creek, SupraPed can use its smart staffs to test the ground before it takes a step.

But endowing SupraPed or other future robots with increased mobility isn’t simply a question of handing a robot a computerized stick. The Stanford team needed to create a suite of new algorithms and control mechanisms to allow the robot to incorporate and control the staff.

Among the challenges they had to overcome in the research was that of balancing a changing center of mass during an activity. This is difficult in rough, irregular terrain and more so with a system that uses input from four independent surfaces to balance and move.

The Stanford research team developed new mathematical formulations of the relationship between internal forces and movement control, allowing them to surmount existing problems and provide improved stability for the SupraPed.

Before developing a physical prototype of their system, the researchers first ran SupraPed through a series of simulated tests using the Simulation and Active Interface framework developed in their lab. The simulations placed SupraPed in series of challenging terrains–outcroppings of rubble–and tested how the robotic platform reacted when it encountered obstacles.

In each simulation, the robot plants its smart staffs, testing its firmness just as an expert hiker might, and swings its foot forward, moving from one balanced position to the next.

Using the algorithms developed by the Stanford team, it almost never falls.

The team presented their work at the IEEE International Conference on Robotics and Automation. The first prototype of smart staff has been designed and fabricated, and is being tested in the lab environment. The researchers hope that one day, the telescopic staff easily can be integrated with any human-size humanoid robot.

“Although research on rescue robots working in hazardous environments has made tremendous progress over the past decade,” Chung said, “we believe that SupraPeds will bring a whole new level of mobility and manipulation for humanoid robots.”

The SupraPeds also herald a future where robots, like humans, use tools to augment their abilities.

Investigators

Oussama Khatib

Shuyun Chung

Related Institutions/Organizations

Stanford University

Related Programs

National Robotics Initiative

tags: Actuation, c-Research-Innovation, humanoid, ICRA 2014, Oussama Khatib, Shuyun Chung, Stanford University, SupraPed, US