Robohub.org

From precision farming to autonomous farming: How commodity technologies enable revolutionary impact

The popular conception of farming as low-tech is woefully out of date. Modern farmers are high-tech operators: They use GIS software to plan their fields, GPS to guide field operations, and auto-steer systems to make tractors follow that GPS guidance without human hands. Given this technology foundation, the transition to full autonomy is already in progress, leveraging commodity parts and advanced software to get there more quickly than is possible in many other domains.

This article outlines some of the key technologies that enable autonomous farming, using the Kinze Autonomous Grain Harvesting System as a case study.

Jaybridge Robotics automates vehicles for driverless operation in industrial domains including agriculture and mining. We do this using commercial, off-the-shelf (COTS) components and our software. We believe software is the greatest challenge in making vehicular robotics cost-effective and reliable. For the last few years, we have been working with Kinze Manufacturing to automate their line of agricultural equipment (see video above). In this article, I’m going to explain why farmers are technologically well-positioned to take advantage of automated farm vehicles. I’ll also provide a case study of the technology behind the Kinze Autonomous Grain Harvesting System

Satellite-guided farming

Although urbanites may still think of farming as low-tech, backward profession, a great deal of professional farming has gone high-tech in the US and other developed nations. The last decade, in particular, has seen the rapid embrace of high-tech under the general label of Precision Agriculture.

Farmers collect and act on copious amounts of data. Global Positioning System (GPS) data from satellites (see Figure 1) lies at the heart of Precision Agriculture. Farmers use farm management software (e.g FarmWorks) and GPS receivers to map their fields, and to track the yield (amount of crop) that they get from every square meter. They can augment this yield data with a variety of other information. For instance, they may also perform a detailed soil sampling survey to determine the soil’s nutrient mix in different areas of the field. Plant health can be assessed in part by plant coloration, so some farmers will purchase satellite or aircraft flyover imagery enabling them to determine the health of their plants at various times during the year. Of course, all of this additional information goes into the computer mapping software.

Based on their collected and mapped (georeferenced) data, farmers can generate prescription maps which specify how much fertilizer to apply in each region of the field, how densely to plant seed in that region, and so on, in order to optimize yield and minimize unnecessary chemical applications.

To take optimal advantage of prescription maps, many modern farming implements are computer-controlled. Planters, as the name suggests, put seed in the ground. A planter such as the one shown in Figure 2 may feature independently controllable row units, enabling each unit to be turned on and off, or have its planting rate adjusted, independently.

Given this sort of planter, the prescription map is loaded into the computer on the tractor, and the tractor driver simply..drives. The driver steers the tractor, the tractor pulls the planter, and the onboard computer controls the seeding rate based on where the planter is in the field. The computer also tracks where seed has already been applied, so if the driver has to drive through already-seeded territory, it doesn’t get double-seeded.

The same strategy is applied in other crop maintenance activities such as fertilizing and other chemical applications. The computer monitors the vehicle location and ensures chemicals are applied only where prescribed, in customized doses tailored to the specific area. This has cost benefits to the farmer — less chemicals used is dollars saved — and it also has environmental benefits, since less chemicals used is less chemicals at risk of leeching into the surrounding ecosystem.

Cooperative autonomy

When putting seed in the ground, and later coming back to harvest it, it’s important that the harvester follows the same path as the planter did months earlier. And of course, the harvester and planter know where they are the same way that your smartphone knows where you are: GPS.

You may have noticed, however, that your cell phone can be off by quite a bit. The difference with farming vehicles is that while the GPS unit you have in your phone or your car knows where you are to within a few yards, a high-precision augmented GPS in a modern tractor knows where it is to within a couple of inches. This accuracy has profound consequences.

First, it allows a tractor driver to reproduce a route over and over. At planting, seed goes in the ground. When treating, fertilizer is applied directly to the seeded area — without being applied to the unseeded territory between the rows. Finally, at harvest, the driver reaps with high efficiency by being just as accurate coming along the rows in the harvester.

Historically, the precision-guided driver was assisted by a “lightbar” — a line of LEDs that indicate in real-time whether the vehicle is on track or whether a steering correction is needed. Nowadays, an advanced tractor just drives itself along the route.

Auto-steer systems are available for a variety of tractor models, both as built-in and as after-market additions. Most current auto-steer systems can only drive the rows, requiring driver intervention at the end of each row, but advanced systems from some vendors can now handle certain simple turns. Even with auto-steer, a driver is still required to watch for obstacles and monitor the equipment, although the work is a lot less fatiguing when the driver can go “hands-off” for long rows. While the farmer is still setting the throttle and looking out for collision, the system is inarguably autonomously driving itself.

Prepared for full automation

This says a lot about farmers as future users of more completely automated systems. On farms that have embraced precision agriculture:

• The farmers are tech-savvy computer users.

• They survey their fields with precision.

• Their tractors are already partially drive-by-wire, meaning that a computer can already control key functions such as steering.

• Their tractors are equipped with high-precision GPS systems.

Given this baseline, transitioning to full autonomy is relatively straightforward, using off-the-shelf parts and advanced software.

Case study: Kinze Autonomous Grain Harvesting System

Kinze Manufacturing makes grain carts and planters for row crops. For the last few years, Jaybridge Robotics has been working with Kinze to automate tractors pulling their grain carts to produce the Kinze Autonomous Grain Harvesting System, shown in the opening Video and in Figure 3. In this case study, we’ll take a closer look at how the Kinze system builds on existing technologies and Jaybridge’s software.

A high-level overview Jaybridge Robotics’ software is shown in Figure 4. Our software takes advantage of commodity components to perform key vehicle automation tasks including:

• User interface enabling the user to perform the workflow.

• Vehicle path planning.

• Vehicle control, including steering, brakes, throttle, etc.

• Navigation

• Obstacle detection

• Inter-vehicle communications

Let’s look at how these elements are realized in the Kinze Autonomous Grain Harvesting system.

The user interface, shown in Figure 5, runs on a touch-screen Android tablet. Working with Kinze, four primary workflow elements were identified. In Offload, a grain cart drives in tandem with a combine while the combine simultaneously harvests and dumps crop into the grain cart. In Follow, a grain cart follows along behind a combine, for instance when the combine transits a narrow area. In Park, a grain cart drives back to a designated parking area, where it meets up with a semi which will transport the grain onward. And in idle mode, of course, the grain cart idles awaiting further instruction. Those key workflow elements are realized in the major buttons down the right-hand side of the screen, while additional capabilities such as manual crop editing and obstacle denotation are provide along the bottom.

Path planning, vehicle control, navigation, and obstacle detection all take place in real-time on the embedded computer onboard the tractor towing the grain cart.

The path plan adapts in real-time as the combine moves – keep in mind that combine motion moves not only the grain cart’s destination (in follow or offload modes), but also clears crop, creating additional drivable area. The plan may have to feature complex maneuvers, e.g. Figure 6 where harvesting is taking place in a terraced field. The path planner relies on the navigation system identifying the grain cart’s position, orientation, and velocity. When tandem-driving with the combine for offload, it also relies on high-speed communications between the vehicles to exchange position information. The path planner must continuously consider vehicle position and the drivable area map, as well as the vehicle’s physical capabilities.

Vehicle control also takes place in real-time, ensuring that the vehicle follows the planned paths. Like path planning, control runs on the onboard embedded computer, synchronizing throttle, brakes, and steering to achieve the desired path.

The navigation system fuses data from the factory-standard high-precision GPS system with other vehicle information to provide an extremely accurate estimate of vehicle state.

The obstacle detection system relies on a spinning laser range finder (LIDAR) and automotive RADAR more typically used for adaptive cruise control. Data from both sensors are fused for enhanced detection capability.

Inter-vehicle communications take place via two different channels. At longer ranges, grain carts and combines communicate via cell data, taking advantage of pervasive cell coverage extending ever deeper into the heart of farm country. At close range, and especially when tandem driving, a short-range high-bandwidth radio is used to exchange data to coordinate driving.

It’s important to note that the hardware components are commercial-off-the-shelf (COTS) parts: from the embedded computer to the LIDAR to the cell modem, the technology exists today at very reasonable price points. Jaybridge’s software transforms them from a collection of parts to a fully automated grain harvesting system.

Reliability

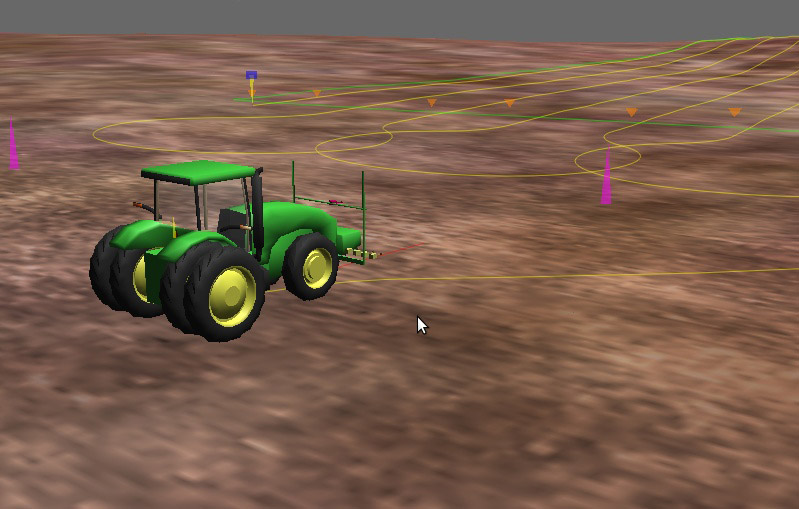

Industrial machinery has to be reliable. Farming machinery is no exception. So a key aspect of Jaybridge Robotics’ work is ensuring that automated vehicles, and the software controlling them, are reliable. Jaybridge relies on a number of techniques including formal code inspection, unit testing, regression testing, and large-scale simulation (see Figure 7) to validate software before it goes onto real hardware. Simulation, in particular, is a potent tool in our arsenal: it gives every Jaybridge engineer a complete system to work with, without having to find parking for a bunch of tractors.

Ongoing development

The Kinze Autonomous Grain Harvesting system was unveiled to the public in 2011. In 2012, multiple systems were put into the hands of real Illinois farmers for the fall corn and soybean harvest. In 2013, systems are once again working the harvest, with increased capabilities and ever-greater robustness. As we continue along the technology roadmap, we are looking forward to further enhancing the capabilities and robustness of the Kinze Autonomous Grain Harvesting System.

tags: analysis, Business, cx-Industrial-Automation, Environment-Agriculture, robohub focus on agricultural robotics, Robotics technology, Service Professional Field Robotics Agriculture