Robohub.org

233

Geometric Methods in Computer Vision with Kostas Daniilidis

Transcript Below.

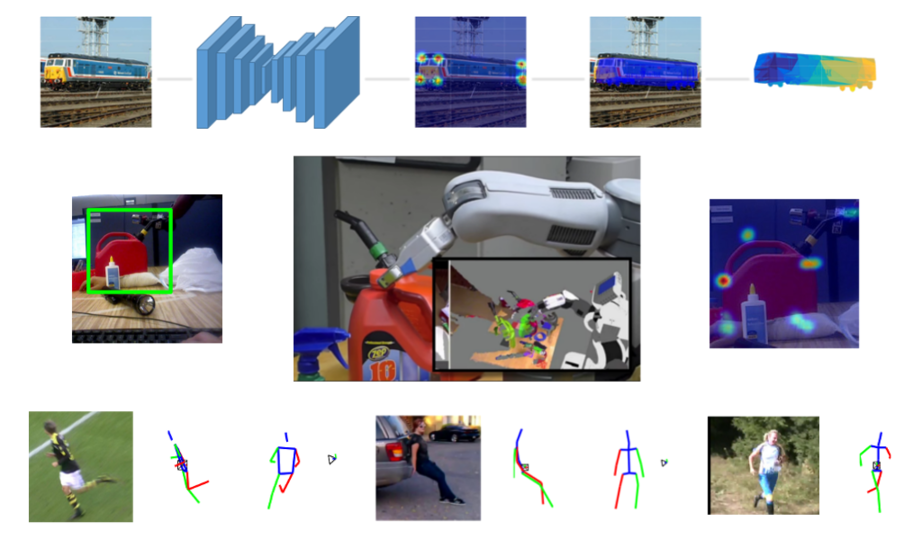

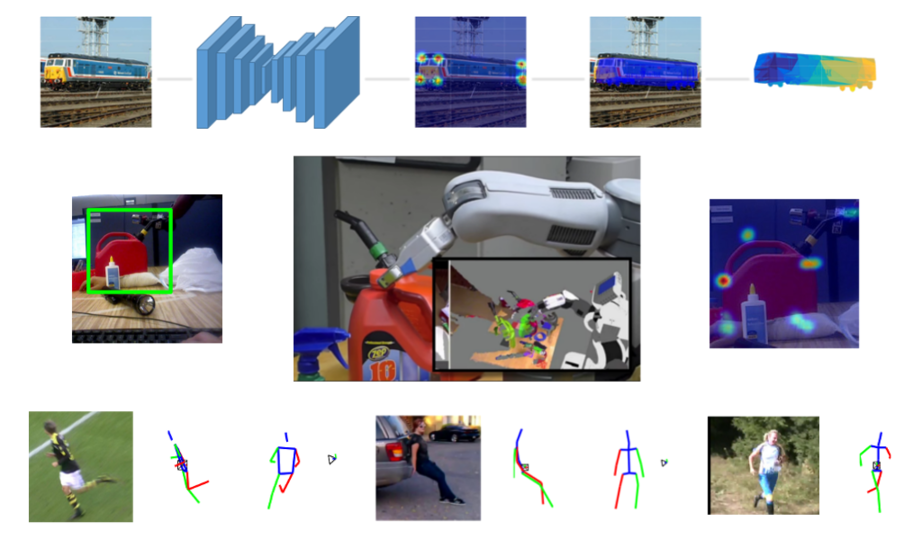

In this episode, Jack Rasiel speaks with Kostas Daniilidis, Professor of Computer and Information at the University of Pennsylvania, about new developments in computer vision and robotics. Daniilidis’ research team is pioneering new approaches to understanding the 3D structure of the world from simple and ubiquitous 2D images. They are also investigating how these techniques can be used to improve robots’ ability to understand and manipulate objects in their environment. Daniilidis puts this in the context of current trends in robot learning and perception, and speculates how it will help bring more robots from the lab to the “real world”. How does bleeding edge research become a viable product? Daniilidis speaks to this from personal experience, as an advisor to startups spun out from the GRASP Lab and Penn’s Pennovation incubator.

Kostas Daniilidis

Kostas Daniilidis is the Ruth Yalom Stone Professor of Computer and Information Science at the University of Pennsylvania where he has been faculty since 1998. He is an IEEE Fellow.

Kostas Daniilidis is the Ruth Yalom Stone Professor of Computer and Information Science at the University of Pennsylvania where he has been faculty since 1998. He is an IEEE Fellow.

He was the director of the interdisciplinary GRASP laboratory from 2008 to 2013, Associate Dean for Graduate Education from 2012-2016, and Director of Online Learning since 2016. He obtained his undergraduate degree in Electrical Engineering from the National Technical University of Athens, 1986, and his PhD in Computer Science from the University of Karlsruhe, 1992, under the supervision of Hans-Hellmut Nagel. He was Associate Editor of IEEE Transactions on Pattern Analysis and Machine Intelligence from 2003 to 2007. He co-chaired with Pollefeys IEEE 3DPVT 2006, and he was Program co-chair of ECCV 2010. His most cited works have been on visual odometry, omnidirectional vision, 3D pose estimation, 3D registration, hand-eye calibration, structure from motion, and image matching. Kostas’ main interest today is in deep learning of 3D representations, data association, event-based cameras, semantic localization and mapping, and vision based manipulation. He initiated and directed the production of the Penn Coursera Robotics Specialization and the Penn EdX MicroMasters in Robotics.

Links:

- Download mp3 (13.3 MB)

- Subscribe to Robots using iTunes

- Subscribe to Robots using RSS

- Research from Daniilidis Group

Transcript has been edited for clarity.

Jack:

Hi, Welcome to Robots Podcast. Could you please introduce yourself?

Kostas D.:

Yes. I’m Kostas Daniilidis. I am a professor of Computer Information Science at the University of Pennsylvania. I work at the GRASP laboratory, one of the top robotics laboratories in the world.

Jack:

Very nice. Your work spans a lot of different fields grounded in geometric methods in computer vision. Could you describe what you’re working on these days?

Kostas D.:

Right. My passion has been always to extract the 3D from two-dimensional data, like single images or a video. I have been working since 1990, when I published my first paper on the error analysis of 3D motion estimation, and since then I have addressed all problems. How to estimate your own 3D motion as a robot. How to estimate the three-dimensional pose of objects. If you want to grasp them, how you do a 3D model of environment, and how do you estimate the shape of objects and even humans.

Jack:

Some of your most recent research has been on, as you said, given a 2D image of an object, to find out where that object is in 3D relative to the camera.

Kostas D.:

Right. This is a very interesting problem. First, because we want to know where objects are to detect them in environment. But then, when we wanted to interact with these objects, we really need to know their six-dimensional pose, both rotation translation and their shape because we might not have seen this object. Detecting the objects, if you know, somehow, the class of objects, can be done these days with classification with deep learning.

Jack:

Mm-hmm (affirmative)

Kostas D.:

And estimating the 3D pose and shape is a much more recent area. And the way we address it is not by using a depth sensor to get the shape and the pose. Not by using multiple view. But by using, really, the information about the contiguity the object. So if we want grasp a specific drill we know, from many examples how, approximately, drills look like and still we can detect a new drill and we can interpolate the shape of the new drill, just based on previous examples. The challenge there is really to find some anchor points or the silhouette of the object so that you can really relate colors – the appearance of the objects with whatever you have in your databases’, 3D models that may be CAD models or data you have acquired with the connect sensor, for example.

This is really the main challenge, to match the three-dimensional information, something like the point cloud you have in your database and the color information which might be key points or edges and the two objects, separately, there are many approaches. You have point clouds and the CAD model database, somehow you can estimate the shape of the object from the pose. You have only 2D images and 2D objects and somehow you can find their scaling and rotation in the image. But connecting the two, you need both, you need learning and during real time testing is challenging.

Jack:

Right. So you mentioned grasping as a potential application of pose-estimation, you also apply this to human pose-estimation – both for objects and humans, what are the other potential applications?

Kostas D.:

For three-dimensional pose … As you said, humans is a very challenging application, also very useful. We can use it in science like in oceanology – to study motion models and the mechanics, not only of humans but also of animals. We have another project where we observe birds and their posture and their flying. We can really study very fast motions in sports. But for the 3D pose of objects, even like the pose of other cars – with respect to you – in a scene, is very important, as well as in many interactive applications. I can imagine that there is a combination of Photoshop and AutoCAD, where somebody gives you a picture and out of the picture directly, you can get a 3D model and rotate it and modify this picture. So this is what we call an image popup.

Jack:

And that was a recent paper of yours?

Kostas D.:

That was a recent paper at ICC 2015, yeah.

Jack:

Wow, that’s interesting. So how are these systems implemented, especially when you’re estimating, simultaneously, the variations in shape of the objects and where they are? It seems like you’d have to have one before you could do the other.

Kostas D.:

That’s true, like a chicken and egg problem. So, methods have changed dramatically in the last two, three years because of the introduction of deep learning architectures, which really revolutionize not only classification tasks, but many other tasks like segmentation, detection, localisation of objects and images and now, even 3D geometry.

At the beginning, I can tell you, thirty years ago, somebody would use something like – what we call a toy world. You would have specific instances of objects, you would identify edges and corners, you would try to find correspondences between these edges and corners to specific objects in the world and then find the 3D position using something that is called Perspective-n-Point problem, which was known for photogrammetry.

Now we are dealing with unknown instances of objects and that they’re much more complicated illuminations, so really identifying the anchor points like the landmarks, or key points, in the objects which correspond to salient 3D information, is something that we do by training with these points. In the case of humans these points are really, very easily identifiable. These are like the seventeen joints of the human body and you can really train and work with him, put in an image and the output are seventeen channels, each of them with one joint, and for the objects we define joints by hand – by manual annotation – and this is really, I think, a bottleneck in our research and we really have to change.

One of the ways to change it is to introduce some … People call it self supervision, or robot-assisted supervision, where somebody does not go by hand and annotates stuff but you could possibly try to grasp points and then grasp specific points and you are grasping the fine, salient points of an object. So there are other cases where people are training objects during just general grasping, this is the one paper by CMU – the paper with fort-six – if I remember correctly – manipulators from Google, where you build intermediate representations and some of these representations have salient 3D information that you could use to characterize 2D images and somehow then, match them to intermediate objects.

Jack:

When you say intermediate representations …

Kostas D.:

Intermediate representation is something where you’re given an image and you are getting like a few key points on the image detected, still in 2D. From these 2D images then we have to select … We have a selection mechanism, which of them correspond to 3D point we know on the CAD model and then we run what is called a sparse combination of CAD models. Sparse combination means that you are really … From a database of thousands of models, you need only the nearest neighbors as a linear combination and this gives you, at the end, simultaneously, both the pose and the 3D shape of the object.

Jack:

So what you’re saying is that you have a large database of different instances of the same class of object?

Kostas D.:

Right.

Jack:

A bunch of different drills that look similar and you find what are the most representative instances of how drill shapes can be different. Some might have much longer handles, some might …

Kostas D.:

Right, usually we even have much larger databases for cars. We have databases for all possible, like cars, like Sedans and SUVs, etc. Then we selected among them, very few … this is a mathematical optimization, where you try to minimize the number of coefficients in the linear combination. We have published a paper with this method, it’s called ‘sparseness meets deepness’ which is really the sparse optimization for shape meets the results of the deep architecture for the landmarks, for the key points.

Jack:

Right. So you’re not over-complicating the parameter space of the …

Kostas D.:

Exactly. We try to not over-complicate the parameter space of the shape and to do it more explicitly. Now, we have more recent papers which is running now only for humans and we’ll try also on objects, where we have trained the network to learn end to end, given an image to learn the three-dimensional pose and shape and we start this intermediate step of to-the-point and it seems that it’s giving better results than the two-step approach.

Jack:

So once you can directly detect the 3D pose of these key points, rather than going for 2D to 3D, what’s the motivation to do that and what are the advantages?

Kostas D.:

Detecting the 3D joints of a human is already what is the final outcome and usually when we get the 3D position of points of the object, then we already pretty much have constrained the shape of the three-dimensional objects and then computing the pose is just a straightforward transformation.

Jack:

Right. Interesting.

Kostas D.:

There are other approaches which have also directly the pose as an outcome, as a regression variable. Where you have a fully connected layer or multiple fully connected layers and you get at the end, just three parameters for translations and three parameters for rotation. Many approaches in general, which are end to end in 3D and they need to estimate the pose or even detect where there is an object. They struggle with the three-dimensional rotations and these networks have to be trained with many poses.

That’s not a big problem because we can produce these poses artificially in 3D if we want, by rotating the object virtually, but scientifically, we would like to understand how to build a network that will be like rotational variant.

Jack:

Interesting. You were discussing that one of the potential applications is to grasping.

Kostas D.:

Right. This is still something like a classic approach. Although we use deep learning, end to end, it’s till giving us at the end a 3D model of it’s pose and we have to decide where to grasp. There is software to that, like GraspIt.

Jack:

Which is a simulator. If you have a 3D model, you can just estimate roughly, where a grasp will be successful.

Kostas D.:

Yeah, or you can precompute such positions and then apply them.

Jack:

But you need to have that 3D model.

Kostas D.:

You need to have that 3D model. So this is not really the way to go in long term, in the future and there are already groups working more, first in directly recognizing where to grasp but also not having as a target function in the learning where to grasp, but just good grasp or bad grasp, meaning I have grasped and the object has fallen down or I have grasped and the grasp is very stable like the object is not moving in the hand.

So this can be just … You can have as an input image, you can have as any output, the grasp pose and you can train it basically on positive examples, like as a generative model or you can have an input image in motions and you can have as an output grasp positive, grasp negative, bad grasp, and you can train it and obviously, you need hours and hours in order to do that, of robot operation, like in the grasp paper. But I think this is a really self supervised approach. You don’t need any manual labor link or anything like building correspondences between salient CAD models the way we do it now for objects.

Jack:

Using the unsupervised learning approach?

Kostas D.:

Yes, the unsupervised learning approach – we just have robots around them for thousands of hours and we just let them grasp many objects and I think the key idea behind there is that again, you have some compression of the object inside the network and this compression of the object and, as a matter of fact, if you see these representations many time, they have salient 2D points, okay? And these salient 2D points are somehow correlated with your motion, your joint position and velocities and you find which of these correlations are good and which correlations are bad. I think that’s really the way to go.

The main point there is not for every new object class to have to run a robot for a week, or to run seven robots for a week. The key point there is whether you can keep the model and refine it, or whether you can transfer the model to some other situation, but definitely, if you are let’s say, in a factory where all the parts are automobile parts – you need to grasp them, and then you go to the supermarket where you have to grasp products that you don’t have to retrain it completely.

Jack:

Right. Although, at the same time, with the current state of artificial intelligence and deep learning, current approaches to unsupervised learning for grasping – you don’t get that higher level inference about how to transfer … You’re just learning it based on the geometry, the object, right. So this is in some ways, your approach.

Kostas D.:

Right. Exactly. Even with our approach, where we split the problem and somehow we understand it better, I think in detecting the 2D points would still have separate training like for products in the supermarket and separate training for parts in an automobile factory. I think the inference will be exactly the same, just some different CAD models in each case. The grasping would be much trickier in our case with having to extract, every time, a separate 2D model and really, depend on the valuation. I think it would be trivial for products where everything is in packages and will do much more complicating on like lifting a tool or, let’s say, taking a whole body part of car and trying to move it somewhere. These are very challenging situations, but this is the general thread of artificial intelligence of robotics. I think is first, how to train things by doing things and not by ladling.

This is one and second is not to train a separate network for every separate situation. I think right now, we have many networks for many situations. Like a separate network, for example, to detect where is the lane while driving, separate network to detect the pedestrians and I think these have to be somehow unified.

Jack:

And so you mentioned that you would like in the future for optic post-detection to be more robust for different classes and not have to retrain for different classes, is that a research direction that you intend to pursue?

Kostas D.:

I think for object detection, for the sake of grasping, I think definitely we should be blind … It will be more blind to the semantics and really non-blind to the data set we are using for the actual robot that is grasping. It will really depend what we present to the robot. If we present small model cars and drills it will learn all of them together, without separating the way we do now – A separate model for the drills, a separate model for the cars – because we need manual characterization of the key points, which is separating the drills and separating the cars.

Jack:

Right. But that would be an entirely different … The systems wouldn’t share very much in common at that point, it seems like you’d have to …

Kostas D.:

If we just let the robot work for hours and try to learn how to lift toy cars and hold the drill then probably, would learn all the drills and toy cars and nothing else.

Jack:

Yeah.

Kostas D.:

But, definitely, the semantic they finish will be through what the robot has learned to grasp and their shape. If there are other things that look like toy cars, the robot will be able to grasp them as well.

Jack:

Right. So you mentioned a bit, the practical challenges of implementing these systems, what directions are you heading in right now to put these sorts of post-detection systems in the real world.

Kostas D.:

That’s a great … And this question is easy to answer in 2016. Ten years ago, there were very few systems that somebody would read about in the newspaper or the person in the street would know about. Right now, everybody knows about driverless cars, which use everything that we have talked about – 3D motion and detection of humans and basically, all parts of robot perception …

Jack:

Delivery drones, to the age of robotics now.

Kostas D.:

… Delivery drones and we do, here in the grasp laboratory, we try to also launch spinoff companies, which I think the time is mature that some of these things come to the market. The one spinoff that I have co-founded with my student Jonas Cleveland, is COSY Robotics and this is about mapping retail spaces and establishing a mapping of all the products in the retail space – what is called a planogram. This includes both robot mapping and is building the map of the store and very fine-grained recognition of product categories …

Jack:

Right.

Kostas D.:

… And even more challenging things which we are learning that are extremely important for bigger suppliers – bigger retail stores – like fresh food. What situation is the fresh food on the shelves and when should it be removed, when it should bring fresher food there and this would allow better optimization of the shelf space and at the end, also, of the whole inventory.

Jack:

What’s the process for launching a startup, for taking it from the lab to Pentovation and then beyond? Pentovation is the lab [crosstalk 00:27:21]

Kostas D.:

Pentovation is the new space in the south of the university of Pennsylvania pen campus, which hosts many companies which are commercializing ideas from the academic club.

Jack:

Yeah.

Kostas D.:

The process is usually that you think that some of these ideas can indeed be realized in the near future and you can have a very good technology around them and in legal terms, there is some licensing of the technology to the startup because the intellectual property belongs to the university – this is exclusively licensed to a startup – and then you have to build a very good team. I think the main way to do that is by having students starting the startup. Students can attract, much more easily, other students and so attracting talent is the number one challenge and I think number two – in terms of technology – is really keeping it simple. Not doing the technology with the motivation of writing a paper like we do in academia, but with the motivation of making it work in a specific environment and then, obviously, you have to also sell this. This is a completely different world, a completely different universe for us researchers and you need to have good people who will promote this idea, both for customers and also for fundraising.

Jack:

But of course, being at a research institution that is part of a larger university, you have access to all those people and you can build a good team.

Kostas D.:

Definitely Penn is a very good name, both in terms of engineering and in business, and there is a lot of talent that we can attract also from outside Philadelphia.

Jack:

Yeah and as robotics has become more commercially viable, it’s good that academia has found a place there and students still know that they can gain business acumen skills that can take them into the workforce, or they can pivot and go further into academia. The option’s still there for them.

Kostas D.:

Right. Both, indeed with the rise of AI and robotics these years, many PHD students and even faculty are moving to industry. I still recommend students to follow graduate studies. You can work in industry any time of you life you want these days, but doing a PHD while you are out of undergraduate school, with all the motivation and energy you have, it will give you the right way to address problems in a more foundational way and it will definitely make you think more abstractly, it will make you think more in longterm vision and you will definitely learn to work on bigger projects, rather than being in an industry directly out of your undergraduate school. If you see the startups that are built from students directly out of the undergraduate schools, they are startups with extremely smart ideas but not the highest technology.

Jack:

Right.

Kostas D.:

All the startups in AI and deep learning have some PHD positions.

Jack:

Of course. You hear about when Google acquires a new startup that what each PHD cost.

Kostas D.:

Exactly, yeah.

Jack:

Also, for a student who’s interested in a academia and research, what advice would you give them?

Kostas D.:

Right. What I really recommend entering PHD students is to first still take graduate courses – they still have to learn a lot – and they’re given courses which are either in math or in probability, so that they understand better, how things work and I really recommend them to work on more fundamental problems rather than build just a system. You need to build a system to experiment, but you have to think about it as a physicist, that the system itself is not your final goal. Your final goal is to build a system so that you study it and see if it works or not and when it doesn’t work. When my student comes and says “I’m so happy it works” I really always want to know when it doesn’t work and explain it.

Jack:

Right. All right, very interesting stuff. Thank you so much Kostas.

Kostas D.:

Thank you very much. It was a very exciting conversation.

tags: c-Research-Innovation, cx-Research-Innovation