Robohub.org

Parrot AR.Drone app harnesses crowd power to fast-track vision learning in robotic spacecraft

Astrodrone is both a simulation game app for the Parrot AR.Drone and a scientific crowd-sourcing experiment that aims to improve landing, obstacle avoidance and docking capabilities in autonomous space probes.

As researchers at the European Space Agency’s Advanced Concepts Team, we wanted to study how visual cues could be used by robotic spacecraft to help them navigate unknown, extraterrestrial environments. One of our main research goals was to explore how robots can share knowledge about their environments and behaviors to speed up this visual learning process.

© European Space Agency, Anneke Le Floc’h

KEY INFO

Project name

AstroDrone

Research team

- Paul K. Gerke

(European Space Agency/Radboud University Nijmegen) - Ida Sprinkhuizen-Kuyper

(Radboud University Nijmegen) - Guido de Croon

(European Space Agency/Delft University of Technology)

Links

AstroDrone/ESA website

AstroDrone iPhone App

Status

Ongoing research project

Android App in development

Similar to the RoboEarth project, the central idea is that a group of robots sharing visual information such as raw camera images or abstracted mathematical image features would have a much broader visual experience to learn from than a single robot operating on its own.

We had already studied the use of motion-based cues in navigation, such as optic flow and the size changes of visually salient features like SURF, but we we wanted to verify our findings in this area and at the same time investigate if the appearance of visual features could be used to aid navigation as well. To this end, we needed a very large robotic data set of visually salient image features paired with corresponding robotic state estimates, and it was simply infeasible to gather all this data by ourselves.

That’s where the Parrot AR.Drone entered into play. The AR stands for Augmented Reality, and the drone is indeed a toy quad rotor meant for playing games. We thought we could develop a game for the Parrot that would serve as a means for crowd-sourcing the data we needed. And because the Parrot was designed to be used in augmented reality games and can be controlled by an iOS device, data-gathering could be done in a gaming context, making it fun for people to participate in the experiment.

To this end, we designed a mission-based game where players simulate docking the Parrot with the International Space Station as quickly as possible while maintaining good control. Bonus points are given for correct orientation and low speed on the final approach.

At the end of the mission, players can log their high scores on a score board, and at the same time contribute to the experiment by anonymously sharing abstract mathematical image features and velocity readings.

The Parrot AR.Drone is uniquely suitable for this purpose because:

- It is widely available to the public, with over a half-million units sold world-wide.

- It is a real robot with many useful sensors, including two cameras (at the front and on the bottom), sonar, accelerometers, gyrometers, and (on the Parrot AR drone 2.0) a pressuremeter and magnetometer. Further, it already uses these sensors to achieve accurate onboard state estimation and (consequently) stable hovering.

- It can be controlled by smart devices such as the iPhone or Android devices, which can be used send data over the internet.

- The code for communicating with Parrot is open source.

Methodology

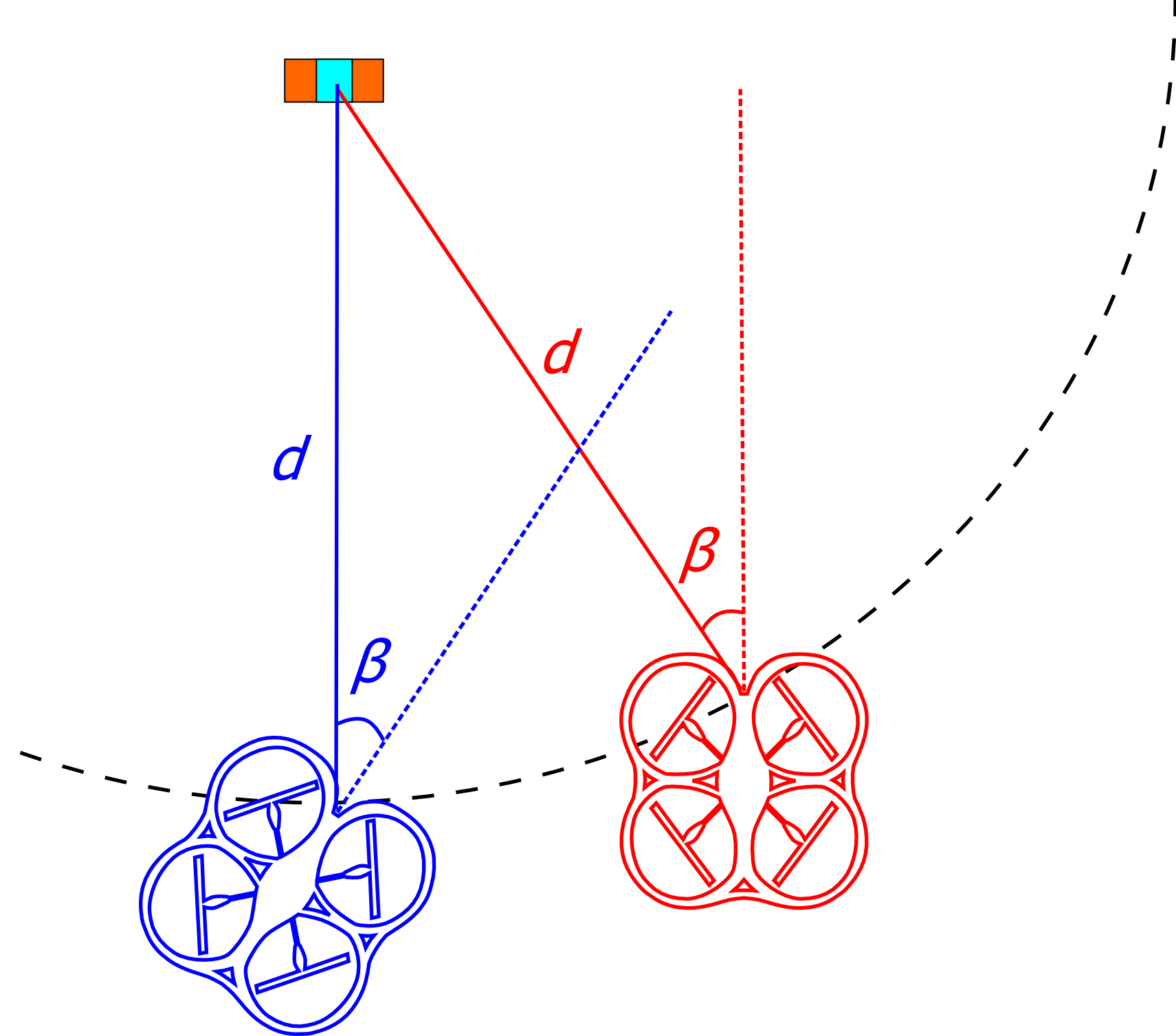

The question we faced experimentally was how to couple the real-world object to the virtual space in which the drone would be flying. Having a reference object of known size was a goal since it would provide some form of ground-truth measurement. As it happens, the Parrot AR.Drone is delivered with a marker that is recognized in the images by the onboard firmware. The recognition gives an image position (x,y) and a distance to the marker. Note, however, that this information is not enough to disambiguate both the position and orientation of the drone with respect to the marker. Figure 1 shows two configurations that give exactly the same marker detection data. In the ‘red’ case, the marker is in the left part of the image, because the drone is located on the right of the marker. In the ‘blue’ case, the marker is in the left part of the image, because the drone has turned to the right. Note that the distances to the marker are the same.

Figure 1: Ambiguity of retrieved marker data. The angle β and distance d are the same for the red and the blue case, but their position / heading are different.

In order to render the virtual space environment, we perform state estimation on the basis of the drone’s estimates of its height, speeds, angles, and its detection of the marker. The docking port of the ISS (the marker) is assumed to be at location (0,0,0). The state estimation uses an Extended Kalman Filter (EKF), and in the light of the discussion of the marker’s ambiguity, we decided to only update the position on the basis of the marker’s readings, and not the heading. The heading is estimated quite well on board the drone, where the Parrot AR.Drone 2 also uses a magnetometer.

Figure 2 shows what computational processes are happening on which device during the game. The marker detection and the estimation of the drone’s attitude, height, and speeds are performed by the firmware on board the drone. The app is running the drone’s control interface, sending and receiving data from the drone, performing state estimation (X,Y,Z) with respect to the marker and rendering the 3D world.

Figure 2: Distribution of processes over the devices involved in the game.

Of course, for the experiment we also need to process the drone’s onboard images. Because of the iPhone’s computational constraints, we decided to save 5 subsequent images while the player is trying to dock and to process the images after the flight. This processing now happens when the player visits the highscore table and agrees to join the experiment. After extracting the vision data and concatenating this with the state estimates, the data is encrypted for transmission. Finally, since some players may use 3G for sending their data, we considered that the data should be as compact as possible. Therefore, the data is compressed on board the iPhone before being sent. The data from 5 textured images on average takes around 77 kb.

© European Space Agency, Anneke Le Floc’h

PROJECT TIMELINE

Feb – Nov 2012

Game development

Nov 2012 – Jan 2013

Game testing

Jan 2013

App review @ Apple

March 15, 2013

App launch

March 2013 – ongoing

Data collection

Analysis

Testing and Launch

Our original plan was to work on the game from February to April 2012, launch it in May or June, and analyze the data from August to September. In reality, we didn’t converge on the final code until November 2012.

Of course, when you are developing the app, you know the program inside out and by habit you may avoid playing the game in such a way that would make the program malfunction. So in November we invited other people try out and rate the app. The ratings and comments were used to perform some final adjustments, and we sent the app to the Apple store for review in January 2013. At the same time, we began to execute our plans for the launch and related PR-activities. We saw this as an essential part of the project, as the “crowd” first has to know about a project in order to contribute to it.

The app was launched on the 15th of March 2013, accompanied by a press release about AstroDrone from the European Space Agency and the video shown below. Parrot also promoted our app on their site. The news was soon picked up by BBC Technology, Wired, and the Verge, as well as news papers, and television shows such as ARD’s NachtMagazin. This (ongoing) media attention has helped us to reach the public: just one week after the launch, some 4,000 persons downloaded the AstroDrone app, and 458 of these people had already contributed to our experiment by sending their data. This is a nice start, but of course we hope there is more to come.

Next Steps

We have not arrived at the end of this project, but rather at the beginning. We are first solving some small issues by means of app updates. For example, if an iPad or iPod is connected to the drone’s WiFi, it cannot send its data. There was no message that showed the absence of an internet connection (solved in version 1.2). At the same time, we have started analyzing all the data that is coming in.

In the future, we may also improve our crowd-sourcing game in various ways, in order to make it as fun and easy as possible for players, and as useful as possible for our research. In any case, it’s an adventure to work with this exciting new way of gathering robotic data!

[Ed. Hallie Siegel]

tags: AR Drone, c-Research-Innovation, cx-Aerial, ESA, Flying, Research, robot