Robohub.org

Robotic assistive devices for independent living

Autonomy is the soul of independent daily living, and a variety of assistive devices already exist to help people with severe physical disabilities achieve this. But many of them are designed to be used by people who have at least some upper extremity strength, requiring users to push buttons on a hand-held remote control, for example. This makes such devices both inaccessible and unsafe for persons with reduced hand strength.

Autonomy is the soul of independent daily living, and a variety of assistive devices already exist to help people with severe physical disabilities achieve this. But many of them are designed to be used by people who have at least some upper extremity strength, requiring users to push buttons on a hand-held remote control, for example. This makes such devices both inaccessible and unsafe for persons with reduced hand strength.

Our immediate goal is to develop novel, integrated technologies to support independent limb repositioning, which is particularly valuable for those with reduced muscular strength, and helps minimize the occurrence of contractures and pressure ulcers. Our longer term goal is to develop devices that can also be used for assisting such individuals with personal care tasks, and for transferring themselves, from a bed to a commode, for example.

System requirements

The most prominent challenges include accessible and multimodal interaction, autonomy, safety, privacy, affordability, real-time performance, and intelligence.

There are a variety of assistive technologies for input control, and depending on the nature of their disability, some users may prefer a trackball mouse while others prefer speech recognition or an eye-tracking system. Another important design consideration is to determine when it is permissible for robots to override user commands or not follow instructions. Since the capabilities of robotic devices exceed the speed and force of human movement, safety is of great concern; a user may not be able to respond quickly and effectively when the robot behaves unexpectedly.

Because personal care robots are equipped with the ability to sense, process and record information, their use raises concerns about client privacy. Solutions must also be affordable, and strike a balance between price and capability if they are to be successfully marketed in the long run.

In addition, being able to provide immediate assistance is critical when urgent medical care is needed, so the assistive robot must be able to perform tasks in real-time. It must be sensitive and intelligent, delivering services while ensuring that it does not inadvertently cause harm or injury to its users. Such a device must be able to learn and intuitively make decisions in order to safely trade off user comfort and speed when required.

UMBC Research

At the University of Maryland, Baltimore County (UMBC), we recently began developing a solution to support the movement and repositioning of an individual’s arms. We first conducted a survey of people with severely limited arm strength. The aim was to examine their attitudes toward using BCI and speech recognition tools, such as the Emotiv Epoc headset pictured below, for controlling a robotic aid to assist with arm repositioning. The Emotiv headset is an off-the-shelf electroencephalography (EEG) system that provides a non-invasive means of recording electrical activity of the brain along the scalp, and can detect emotions, facial expressions, and conscious thoughts.

Emotiv Epoc BCI Headset. Source: Emotiv

From our study we learned that participants were most interested in multimodal interaction since it provides the most options for effective control. The preferred interaction mode was speech recognition for the overall tasks. However, all the participants said that a BCI device was also useful when speech recognition was not possible, such as when brushing teeth or when breathing difficulties make speech-input solutions challenging. We therefor decided to integrate BCI and speech recognition.

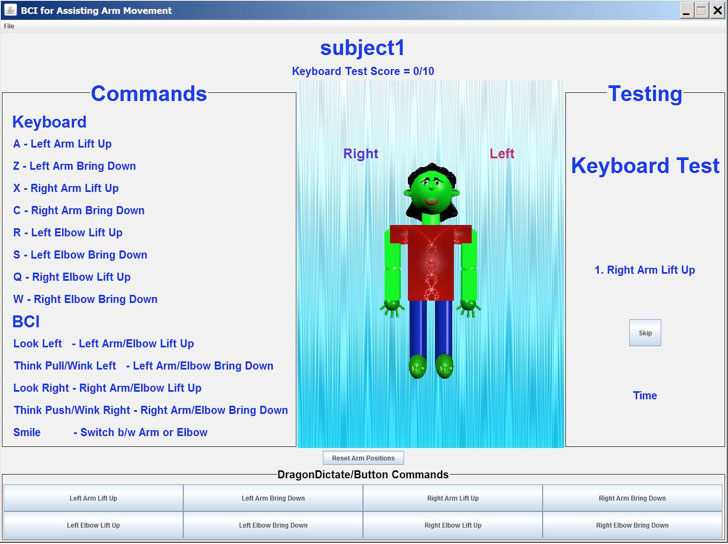

To show the feasibility of integrating BCI with speech recognition for self-directed arm repositioning tasks, we designed a robotic interface for repositioning the simulated arm of an avatar using the Emotiv Epoc headset and Dragon NaturallySpeaking voice recognition software. The system incorporated an accessible GUI.

BCI robot interface with female avatar. Source: UMBC

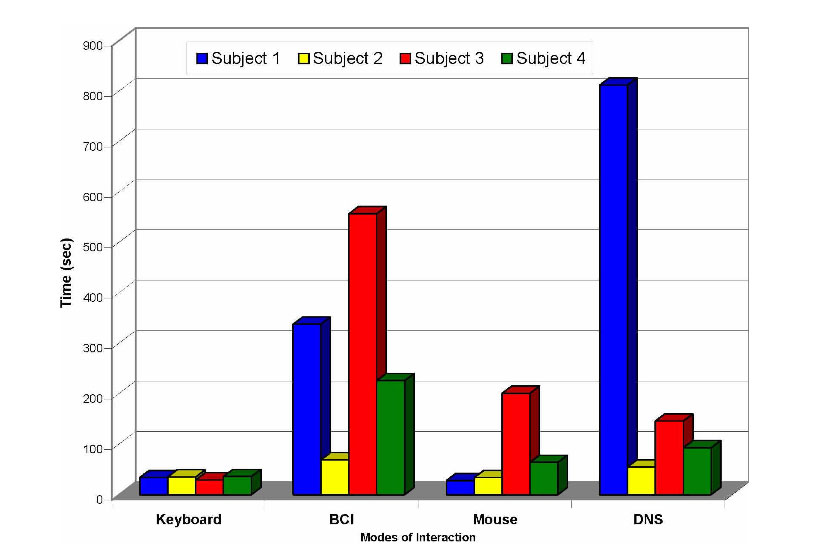

In an exploratory pilot study, four participants without disabilities performed ten arm movement tasks using four inputs (the keyboard, Emotiv Epoc headset, mouse, and Dragon NaturallySpeaking) in sequential order to move the avatar’s shoulder and elbow joints up and down. Participants used a motion imagery paradigm to control the avatar, for example looking towards the left to raise the avatar’s left elbow, looking towards the right to raise the avatar’s right elbow, and so on.

Total time spend using all four interaction methods. Source: UMBC

Preliminary results suggest that, while all participants were able to interact with the avatar using all four input methods, using BCI and speech were most challenging. However, time spent training with Dragon NaturallySpeaking and the Emotiv Epoc can improve performance, and it should be noted that individuals with severely limited hand strength may find voice control and BCI relatively easier to use than a keyboard and mouse.

Further work at UMBC has focused on developing designs for three robotic transferring prototypes, a powered commode wheelchair, a toileting aid, a robotic toothbrush system, and a universal gripper for feeding. Videos simulations of these prototypes can be watched in the playlist below. Kavita Krishnaswamy’s PhD proposal defense Increased Autonomy with Robotics for Daily Living further discusses effective assistive prototypes.

Outlook

One of the most craved aspects of the human experience is to be independent: the abilitiy to take care of one’s self establishes a sense of dignity, inherent freedom, and profound independence. Our goal is to bring robotic assistive devices into the real world where they can support individuals with severe disabilities and alleviate the workload of caregivers, with the ultimate vision of helping people with severe physical disabilities to achieve physical independence without relying on others. As robotic assistive devices become ubiquitous, they will enable people with severe physical disabilities to confidently use technology in their daily lives, not just to survive, but to flourish.

If you liked this article, you may also be interested in:

- Unpowered exoskeleton improves efficiency of human walking

- Noonee testing Chairless Chair exoskeleton at Audi production plants

- Walking assistive devices for the elderly

- Readers optimistic about role of robots as care assistants for seniors

See all the latest robotics news on Robohub, or sign up for our weekly newsletter.

tags: BCI, c-Research-Innovation, cx-Health-Medicine, robohub focus on diversity, Service Household Other