Robohub.org

170

Mobility Transformation Facility with Edwin Olson

NEW: Full transcript below.

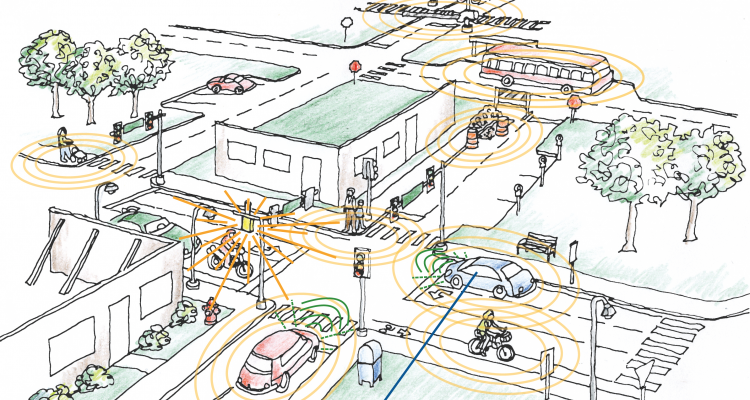

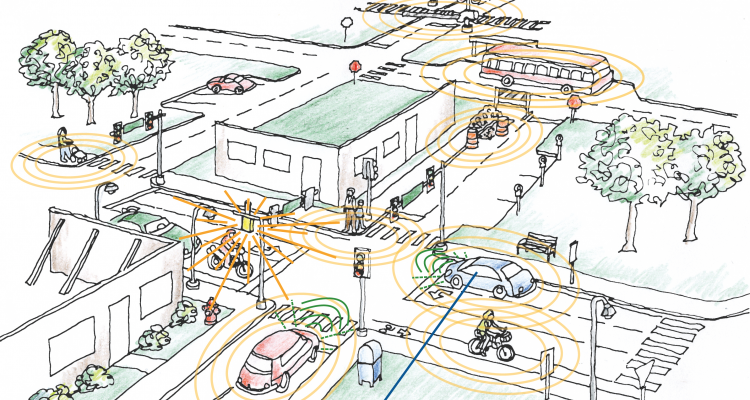

In this episode, Audrow Nash speaks with Edwin Olson, an Associate Professor at the University of Michigan, about the University’s 32-acre testing environment for autonomous cars and the future of driverless vehicles.

The testing environment, called the “Mobility Transformation Facility,” has been designed to provide a simulation of circumstances that an autonomous car would experience driving on real-world streets. The Transformation Facility features “one of everything,” says Edwin Olson, including a four-lane highway, road signs, stoplights, intersections, roundabouts, a railroad crossing, building facades, and even, mechanical cyclists and pedestrians.

Edwin Olson

Edwin Olson is an Associate Professor of Computer Science and Engineering and the University of Michigan. He is the director of the APRIL robotics lab, which studies Autonomy, Perception, Robotics, Interfaces, and Learning. His active research projects include applications to explosive ordinance disposal, search and rescue, multi-robot communication, railway safety, and automobile autonomy and safety.

Edwin Olson is an Associate Professor of Computer Science and Engineering and the University of Michigan. He is the director of the APRIL robotics lab, which studies Autonomy, Perception, Robotics, Interfaces, and Learning. His active research projects include applications to explosive ordinance disposal, search and rescue, multi-robot communication, railway safety, and automobile autonomy and safety.

In 2010, he led the winning team in the MAGIC 2010 competition by developing a collective of 14 robots that semi-autonomously explored and mapped a large-scale urban environment. For winning, the U.S. Department of Defense awarded him $750,000. He was named one of Popular Science’s “Brilliant Ten” in September, 2012. In 2013, he was awarded a DARPA Young Faculty Award.

He received a PhD from the Massachusetts Institute of Technology in 2008 for his work in robust robot mapping. During his time as a PhD student, he was a core member of their DARPA Urban Challenge Team which finished the race in 4th place. His work on autonomous cars continues in cooperation with Ford Motor Company on the Next Generation Vehicle project.

Links:

- Download mp3 (16.4MB)

- Subscribe to Robots using iTunes

- Subscribe to Robots using RSS

- Report on the Mobility Transformation Facility

- Edwin Olson’s website

tags: autonomous car testing, Autonomous Cars, autonomous driving, c-Automotive, cx-Research-Innovation, Mobility Transformation Center, robocars, robohub focus on autonomous driving, University of Michigan