Robohub.org

173

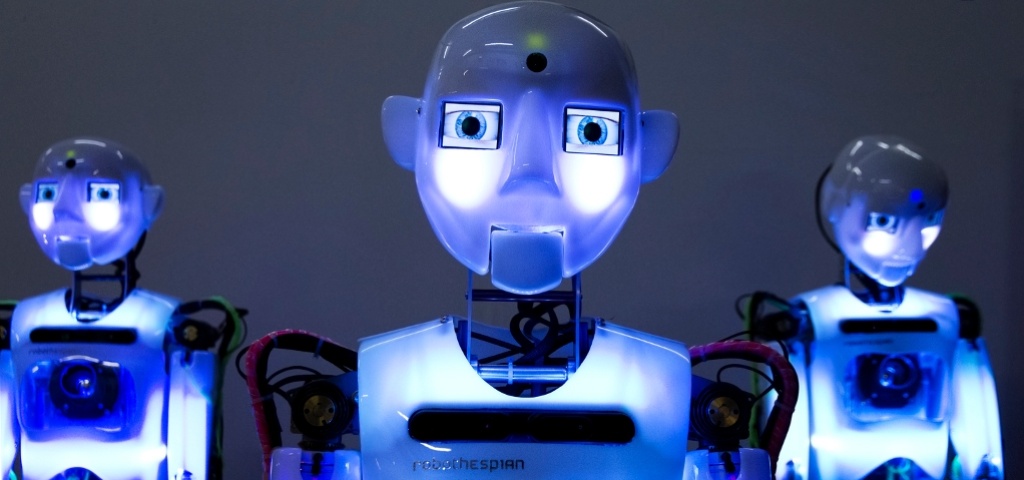

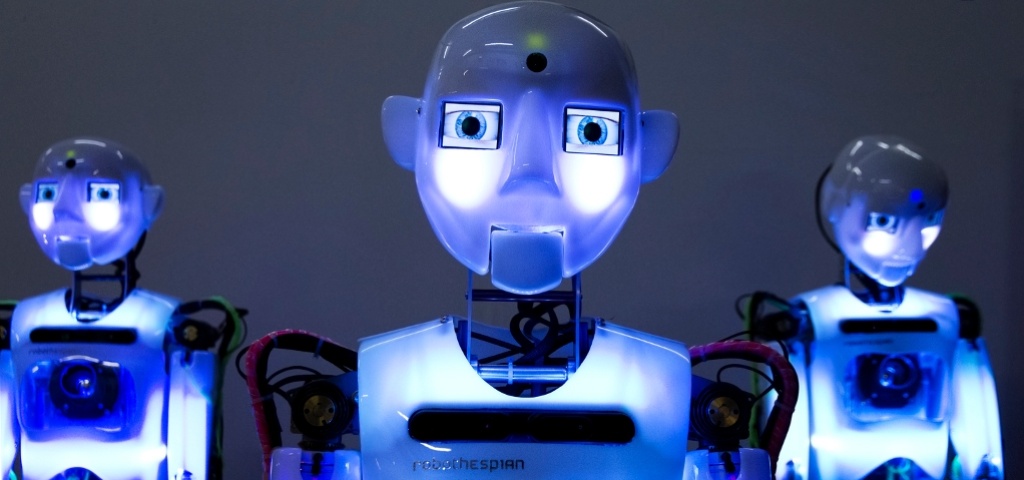

RoboThespian with Will Jackson

Transcript below

In today’s podcast, Ron Vanderkley speaks with Will Jackson from Engineered Arts Limited about his team’s work making robot actors.

Engineered Arts was founded in October 2004 by Will Jackson, to produce mixed media installations for UK science centres and museums, many of which involved simple mechanical figures, animated by standard industrial controllers.

In early in 2005, the Company began work on the Mechanical Theatre for the Eden Project. This involved three figures, with storylines focused on genetic modification. Rather than designing another set of figures for this new commission, Engineered Arts decided to develop a generic programmable figure that would be used for the Mechanical Theatre, and the succession of similar commissions that would hopefully follow. The result was RoboThespian Mark 1 (RT1).

From thereon, Engineered Arts took a change of direction and now concentrates entirely on development and sales of an ever expanding range of humanoid and semi-humanoid robots featuring natural human-like movement and advanced social behaviours.

RoboThespian, now in its third version, is a life sized humanoid robot designed for human interaction in a public environment. It is fully interactive, multilingual, and user-friendly. Clients range from NASA’s Kennedy Space Centre through to Questacon, The National Science and Technology Centre in Australia. You can watch it in action in the video below.

Will Jackson

Will Jackson has a BA in 3D design from University of Brighton, UK and is the Founder of Engineered Arts Ltd.

Will Jackson has a BA in 3D design from University of Brighton, UK and is the Founder of Engineered Arts Ltd.

Links:

- Download mp3 (13.5MB)

- Subscribe to Robots using iTunes

- Subscribe to Robots using RSS

- Engineered Arts

tags: c-Arts-Entertainment, EU