Robohub.org

Drone flight through narrow gaps using onboard sensing and computing

by Davide Falanga, Elias Müggler, Davide Scaramuzza and Matthias Fässler

In this work, we address one of the main challenges towards autonomous drone flight in complex environments, which is flight through narrow gaps. One-day micro drones will be used to search and rescue people in the aftermath of an earthquake. In these situations, collapsed buildings cannot be accessed through conventional windows, so that small gaps may be the only way to get inside. What makes this problem challenging is that a gap can be very small, such that precise trajectory-following is required, and can have arbitrary orientations, such that the quadrotor cannot fly through it in near-hover conditions. This makes it necessary to execute an agile trajectory (i.e., with high velocity and angular accelerations) in order to align the vehicle to the gap orientation.

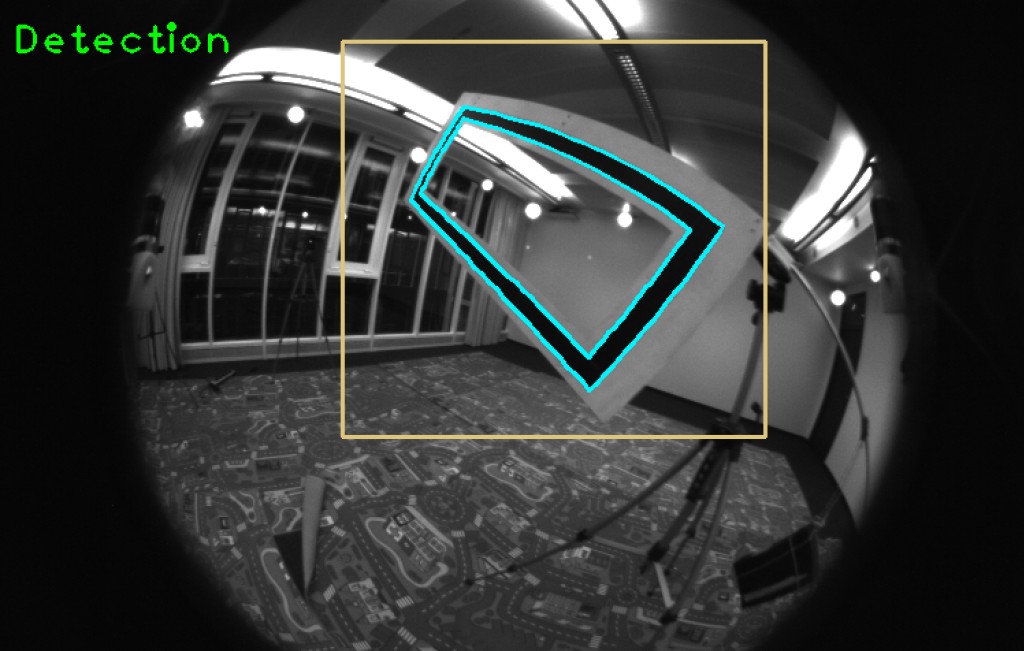

Previous works on aggressive flight through narrow gaps have focused solely on the control and planning problem and therefore used motion-capture systems for state estimation and external computing. Conversely, we focus on using only onboard sensors and computing. More specifically, we address the case where state estimation is done via gap detection through a single, forward-facing camera and show that this raises an interesting problem of coupled perception and planning: for the robot to localize with respect to the gap, a trajectory has be selected, which guarantees that the quadrotor always faces the gap (perception constraint) and has to be replanned multiple times during its execution to cope with the varying uncertainty of the state estimate. Furthermore, during the traverse, the quadrotor has to maximize the distance from the edges of the gap (geometric constraint) to avoid collisions and, at the same time, it has to be able to do so without relying on any visual feedback (when the robot is very close to the gap, this exits from the camera field of view). Finally, the trajectory has to be feasible with respect to the dynamic constraints of the vehicle. In order to recover and lock into stable hovering after passing the gap, we used the recovery procedure described in our former ICRA’15 paper and also described in a former Robohub article.

Our proposed trajectory generation approach is independent of the gap-detection algorithm being used; thus, to simplify the perception task, we used a gap with a simple black-and-white rectangular pattern. One technical aspect to point out is that, in order to allow the quadrotor actuators to quickly change the vehicle orientation that would allow always facing the window during the approach maneuver, the propellers had to be tilted by 15 degrees. Tilting the propellers provided three times more yaw-control action, while only losing 3% of the collective thrust.

We successfully evaluated our approach with gap orientations of up to 45 degrees vertically and up to 30 horizontally. Our vehicle weighs 830 grams and has a thrust-to-weight ratio of 2.5. Our trajectory generation formulation handles trajectories up to 90-degree gap orientations although the quadrotor used in these experiments is too heavy and the motors saturate for more than 45-degree gap orientations. The vehicle reaches speeds of up to 3 meters per second and angular velocities of up to 400 degrees per second, with accelerations of up to 1.5 g. We can pass through gaps 1.5 times the size of the quadrotor, with only 10 centimeters of tolerance. Our method does not require any prior knowledge about the position and the orientation of the gap. No external infrastructure, such as a motion-capture system, is needed. This is the first time that such an aggressive maneuver through narrow gaps has been done by fusing gap detection from a single onboard camera and IMU.

Passing through narrow gaps is even challenging for human pilots! We invited two Swiss professional FPV drone-racing pilots to come to our lab and demo flight through the same gap we used in our experiments, using FPV glasses. It was not easy at all and they only managed after several attempts:

This work has been submitted to ICRA 2017: Aggressive Quadrotor Flight through Narrow Gaps with Onboard Sensing and Computing, by Davide Falanga, Elias Mueggler, Matthias Faessler, and Davide Scaramuzza.

tags: c-Aerial, Davide Scaramuzza, University of Zurich