Robohub.org

New insect-inspired vision strategy could hasten development of mini-drones

New research published today in the Journal of Bioinspiration and Biomimetics by the Micro Air Vehicle laboratory of TU Delft shows that an insect-inspired vision strategy can help indoor flying drones to perceive distances with a single camera – a key requirement for controlled and safe landing. With indoor drones no longer needing to bear the weight of additional sonar equipment, the strategy should hasten the miniaturization of indoor autonomous drones.

The research found that drones with an insect-inspired vision strategy become unstable as they approach an object, and that this happens at a specific distance. Turning this weakness into a strength, drones can use the timely detection of that emerging instability to estimate distance.

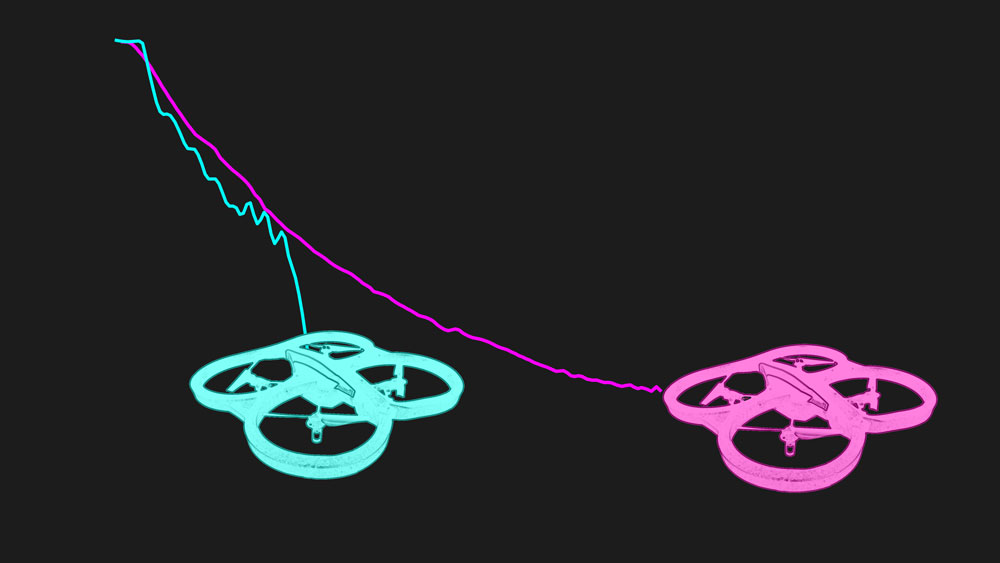

Two landing trajectories over time, starting from 4 meters. The light blue drone has stronger reactions to optical flow deviations than the magenta drone, and hence starts to oscillate further away from the landing surface.

In an effort to make ever smaller drones navigate by themselves, researchers increasingly turn to flying insects for inspiration. For example, current consumer drones for indoor flight use an insect-inspired strategy for estimating their velocity. They use a downward looking camera that determines optical flow – the speed at which objects move through the camera’s field of view. However, optical flow only provides information on the ratio between distance and velocity. Hence, an additional sonar is generally added to indoor flying drones. The sonar provides the distance to the ground, after which the velocity can be calculated from the optical flow. With the new strategy it becomes possible to get rid of the sonar, allowing indoor flying drones to become even smaller.

Soft landings

Although flying insects such as honeybees have two facet eyes, these are placed so close together that they cannot use stereo vision to estimate distances useful for navigation. They heavily rely on the distance-less cue of optical flow, and yet they lack the sensors to retrieve the actual distance to objects in their environment. So how do insects navigate successfully? Previous research has shown that simple optical flow control laws ensure safe navigation. For instance, keeping the optical flow constant during descent ensures that a drone makes a soft landing.

Further reading:

Fully autonomous flapping-wing micro air vehicle weighs about as much as 4 sheets of A4 paper

Parrot AR.Drone app harnesses crowd power to fast-track vision learning in robotic spacecraft

DALER: A bio-inspired robot that can both fly and walk

Bioinspired robotics: Materials, manufacturing & design, with Robert Wood

Sign up for our newsletter.

However, implementing this strategy on a real drone turned out to be very difficult as it is extremely difficult to recreate fast and smooth optical flow landings because the drones tend to oscillate up and down as they approach the ground when landing.

While I initially thought that this was due to the computer vision algorithms not working well enough when close to the ground, I eventually determined that the effect is still there even when the drone has perfect vision.

Using instability

In fact, theoretical analysis of the drone’s control laws showed that a robot that is trying to keep the optical flow constant will start to oscillate at a specific distance from the landing surface. The oscillations are induced by the robot itself, because a movement that occurs close to the ground has a much quicker and larger effect on the optical flow than a movement that occurs further away from the ground.

The core idea is that timely detection of such oscillations allows the drone to know how far it is from the landing surface. In other words, the robot exploits the impending instability of its control system to perceive distances. This could be used to determine when to switch off its propellers during landing, for instance.

Of course I had many strange looks in the flight arena when I was cheering on a flying robot that seemed to be on the verge of going out of control!

A Parrot AR drone making a constant optical flow landing outdoors.

Biological hypothesis

A closer look at the biological literature shows that flying insects do have some behaviours that are triggered at specific distances. For example, both honeybees and fruitflies extend their legs at a fairly consistent distance from the landing surface [1,2]. The new theory provides a hypothesis on how insects may perceive the distances necessary for such behaviors.

More info

‘Monocular distance estimation with optical flow maneuvers and efference copies: a stability-based strategy’ in Bioinspiration & Biomimetics, article published online on 7 January 2016.

http://mavlab.tudelft.nl/

http://www.bene-guido.eu/

Contact

Guido de Croon: +31 (0)15 278 1402, G.C.H.E.deCroon@tudelft.nl

References

[1] Van Breugel F and Dickinson M H 2012 The visual control of landing and obstacle avoidance in the fruit fly Drosophila melanogaster J. Exp. Biol. 215 1783–98

[2] Evangelista C, Kraft P, Dacke M, Reinhard J and Srinivasan M V 2010 The moment before touchdown: landing manoeuvres of the honeybee apis mellifera J. Exp. Biol. 213 262–70

tags: bio-inspired, c-Research-Innovation, cx-Aerial, EU, Netherlands, TU Delft