Robohub.org

No, a Tesla didn’t predict an accident and brake for it

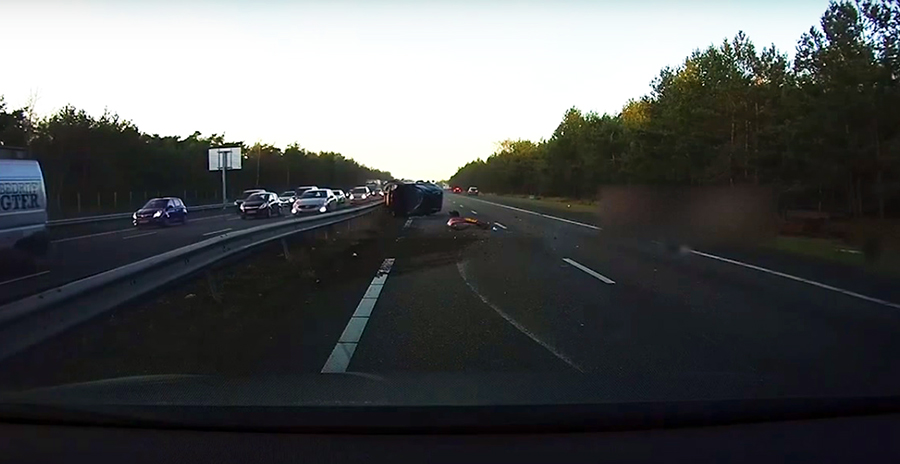

You may have seen a lot of press around a dashcam video of a car accident in the Netherlands. It shows a Tesla in AutoPilot hitting the brakes around 1.4 seconds before a red car crashes hard into a black SUV that isn’t visible from the viewpoint of the dashcam. Many press have reported that the Tesla predicted that the two cars would hit, and because of the imminent accident, it hit the brakes to protect its occupants.

The accident is brutal but apparently nobody was hurt.

https://www.youtube.com/watch?v=lqqN5iRrAiM

The press speculation is incorrect. It got some fuel because Elon Musk himself retweeted the report linked to, but Telsa has in fact confirmed the alternate and more probable story which does not involve any prediction of the future accident. In fact, the red car plays little to no role in what took place.

Tesla’s autopilot uses radar as a key sensor. One great thing about radar is that it tells you how fast every radar target is going, as well as how far away it is. Radar for cars doesn’t tell you very accurately where the target is (roughly it can tell you what lane a target is in.) Radar beams bounce off many things, including the road. That means a radar beam can bounce off the road under a car that is in front of you, and then hit a car in front of it, even if you can’t see the car. Because the radar tells you “I see something in your lane 40m ahead going 20mph and something else 30m ahead going 60mph” you know it’s two different things.

The Tesla radar saw just that — the black SUV was hitting the brakes (possibly for a dirt patch that appears to show on the video) and the red car wasn’t. Regardless of the red car being there, the autopilot knew that if another car ahead was braking hard, it should also brake hard, and it did. Yes, it’s possible that it could also calculate that the red car, if it keeps going, will hit the black car, but that’s not entirely relevant — it’s clear that the Tesla should stop, regardless of what the red car is going to do. Tesla reported in their blog about how they were doing more with the radar, including tracking hidden vehicles with it. The ability of automotive radar to do this has been known for some time, and I have always presumed most teams have taken advantage of it. You don’t always get returns from hidden cars, but it’s worth using them if you do.

In the future, we will see robocar systems predicting accidents, but I am not aware of this being announced by any team. All robocars are tracking all objects ahead of them, for position and velocity, and they are extrapolating their velocity and predicting where they will go. Those predictions would also include detecting that vehicles might hit (if they continue their current course) and also if they could not avoid hitting at a certain point. If an imminent accident is predicted, it would make sense to know that and also react to it in advance. A car might even be able to predict a bit of what will happen after the accident, though that is chaotic.

A system like that would outperform the autopilot or any automatic emergency braking system. Presently, those systems largely track objects in their lane. They don’t brake because cars are stopped in adjacent lanes, because that would mean they could not work in traffic jams or carpool lanes when there are lanes at different speeds, and they could not deal with stalled cars on the side of the road.

However, if you saw what the Tesla saw from the lane to the right, it would still be a very smart thing to brake. Tesla has not commented on this, but I presume its system would not have braked if it had been in that lane, at least not braked before the accident. It might brake because other cars like the red car immediately moved into the right lane.

If you liked this post on robocars, you’ll also enjoy these articles:

- What if the city ran Waze and you had to obey it? Could this cure congestion?

- What are the right disability rules for robotaxis?

- Google car is now Waymo

- Comma.ai cancels comma-one add-on box after threats from NHTSA

- Robocar news: Comma One goes open source, creating simulations for robocars in New Zealand earthquakes

See all the latest robotics news on Robohub, or sign up for our weekly newsletter

tags: Automotive, autopilot, opinion, Robot Car, Robotics technology, Tesla