Robohub.org

UAV-based crop and weed classification for future farming

by Philipp Lottes, Raghav Khanna, Johannes Pfeifer, Cyrill Stachniss and Roland Siegwart

Crops are key for a sustainable food production and we face several challenges in crop production. First, we need to feed a growing world population. Second, our society demands high-quality foods. Third, we have to reduce the amount agrochemicals that we apply to our fields as it directly affects our ecosystem. Precision farming techniques offer a great potential to address these challenges, but we have to acquire and provide the relevant information about the field status to the farmers such that specific actions can be taken.

This paper won the IEEE Robotics & Automation Best Automation Paper Award at ICRA 2017.

Modern approaches as agriculture system management and smart farming typically require detailed knowledge about the current field status. For successful on-field intervention, it is key to know the type and spatial distribution of the crops and weeds on the field at an early state. The earlier mechanical or chemical weeding actions are executed, the higher the chances for obtaining a high yield. A prerequisite to trigger weeding and intervention task is a detailed knowledge about the spread of weeds. Therefore, it is important to have an automatic perception pipeline that monitors and analyzes the crops automatically and provides report about the status on the field.

Fig. 1 illustrates the input and output of our approach. We use a comparably cheap, out-of-the-box UAV system to capture images of a field and compute a class label to each pixel, i.e., determine if that pixel belongs a crop or a weed. Through the use of GNSS and bundle adjustment procedures, we can obtain the spatial distribution of the detected crops and weeds in a global reference frame.

Use Case 1: Farmer Support: In order to reduce the investments on herbicides, pesticides, and fertilizers by keeping or even increasing the yield, farmers must meet the following key questions: Where are weeds in the field? as well as where do the crops grow abnormally? Given the knowledge about the plant specific spatial distribution as illustrated in Fig. 2, farmers can decide, which and how much agro-chemicals are needed to address their specific needs. Furthermore, they can perform individual actions at specific locations in the field.

Figure 1: Low-cost DJI Phantom 4 UAV used for field monitoring (left) as well as an example image analyzed by our approach (right). Here, green refers to detected sugar beets, which we consider as crop, and purple refers to weeds.

Use Case 2: Autonomous Robots: In the future, autonomous robotic systems are likely to be used to manage arable farm land. Robots have the potential to survey the current field status on a continuous time scale and perform specific tasks such as weed control on a per-plant basis only in regions on a field where an action is actually needed. This work is part of the FLOURISH project (flourishproject.eu) were we are developing teams of autonomously and collaboratively operating UAVs and UGVs. Here, the UAVs offer excellent survey capabilities at low cost and can be used to analyze the field status. The analysis of crop and weed growth on the field is an essential building block to infer target locations for a targeted ground intervention. Thus, the UAV can send an UGV to those locations to execute interventions task.

Approach

We address the problem of analyzing UAV imagery to inspect the status of a field in terms of weed types and spatial crop and weed distribution. We focus on a detection on a per-plant basis to estimate the amount of crops as well as various weed species to provide this information to the farmers or as a part of an autonomous robotic perception system. In our work, we propose a vision-based classification system for identifying crops and weeds in both, RGB-only as well as RGB combined with near infra-red (NIR) imagery of agricultural fields captured by an UAV. Our perception pipeline is capable of detecting plants in such images only based on their appearance. Our approach is also able to exploit the geometry of the plant arrangement without requiring a known spatial distribution to be specified explicitly. Optionally, we can utilize prior information regarding the crop arrangement like crop rows. In addition to that it can deal with vegetation, which is located within the intra-row space.

Figure 2: Analyzed field status by our approach. Based on these informations the farmers can schedule their next individual actions and estimate the needed amount of agro-chemicals for their field.

Processing Pipeline

The three key steps of our systems are the following: First, we apply a preprocessing in order to normalize intensity values on a global scale and detect the vegetation in each image. Second, we extract features only for regions, which correspond to vegetation, exploiting a combination of an object-based and a keypoint based approach. We compute around 550 visual features containing intensity statistics, texture and shape information. In addition to that, we exploit geometric features, which encode spatial relationships between the vegetation as well as optional crop row features, which consider the distance from the vegetation to estimated crop rows. Third, we apply a multi-class Random Forest classification and obtain a probability distribution for the predicted class labels.

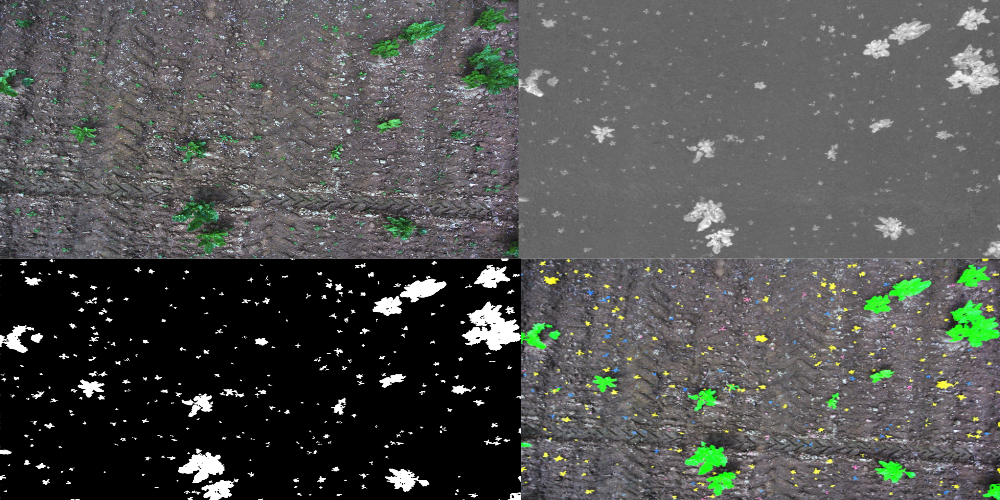

Figure 3: Example image captured by a DJI Phantom 4 at low altitude at different levels of progress within the classification pipeline. From left to right: normalized RGB image, computed Excess Green Index (ExG), vegetation mask that reflect the spatial distribution of the vegetation and multi-class classification output of our plant/weed classification system

Fig. 3 illustrates the key steps of our pipeline and Fig. 4 depicts an example of the detected crop rows. The papers [1] and [2] provide a detailed description of the pipeline as well as the features we are using for the classification.

Results and Conclusion

Figure 4: Detected set of crop rows (red) estimated based on Hough transform followed by a search algorithm in order to find the best set of parallel lines, which is supported by the vegetation. Example result from a sugar beet field with high weed pressure.

We implemented and tested our approach using different types of cameras and UAVs, on real sugar beet fields. Our experiments suggest that our proposed system is able to perform a classification of sugar beets and different weed types in RGB images captured by a commercial low cost UAV system. We show that the classification system achieves good performance in terms of overall accuracy of up to 96% even in images where neither a row detection of the crops nor a regular pattern of the plantation can be exploited for geometry-based detection. Furthermore, we evaluate our system using RGB+NIR images captured with a comparable expensive camera system to those obtained using the standard equipped RGB camera mounted on a consumer quad-copter (DJI Phantom 4 and camera for around $1,500). Fig. 5 depicts example results achieved on the different datasets respectively.

With our approach, we enable farmers to analyze the field status in terms of the spatial distribution of the crops and weeds on their field by using a low-cost UAV system, which can be used out-of-the-box. Typically, these systems are easy to use without requiring expertise on flying or aerial dynamics. The farmers only has to provide their expertise in terms of labeling into the perception pipeline. The farmers are predestined to perform this task because nobody knows better, which plants and weed types commonly appear on their specific fields. Compared to the area of the field only a small amount of data has to be labeled for analyzing the whole field. In terms of the usage withing autonomous robotic systems in agriculture this approach can be used as part of the perception pipeline of the system. Based on the classification results of the images taken by the UAV a program for decision making or planning can execute different task to achieve the destined goals, e.g. to send the UGV to a target location.

Figure 5: Zoomed view of images analyzed by our plant/weed classification system (left column) and corresponding ground truth images (right column). Top row: Multi-class results for the dataset captured by the DJI Phantom 4 UAV. Here, the crops do not follow a row structure. Thus, the results are based on the visual as well as geometric features. Center row: Result for the dataset captured with an 4-channel RGB+NIR camera system. Bottom row: Result for the dataset captured with a DJI Matrice 100 UAV. Arrows point to weeds detected in intra-row space. Sugar beet (green), chamomile (blue), saltbush (yellow) and other weeds (red).

tags: cx-Aerial, Environment-Agriculture, robotics, Sensing, software, UAVs