Robohub.org

The next era of conversational technology

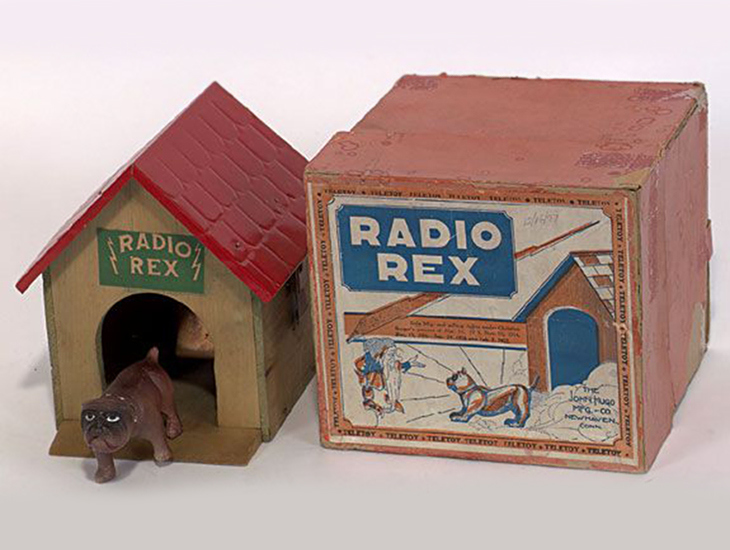

In 1922, the Elmwood Button Co. commercialized a toy that could respond to voice commands. The toy, called Radio Rex, was a dog made of celluloid that would leap out of its house when you called its name: “Rex!” That was two decades before the first digital computer was even conceived, and at least three generations before the Internet would jet our lives from the real world into the virtual realms of e-commerce, social networks, and cloud computing. Radio Rex’s voice recognition technology, so to speak, was quite crude. Practically speaking, the vibration produced by the “r” in its name, when spoken loudly, would cause a thin metal blade to open a circuit that powered the electromagnet that held Rex in its house, causing Rex to jump out. A few years ago, I watched in awe one of the few remaining original Radio Rex toys in action. It was still working.

Some people recognize Radio Rex as the first speech recognition technology applied to a commercial product. Whether that could be called speech recognition or not, we have to agree that the little celluloid dog was probably one of the first examples of a voice controlled consumer robot. While rudimentary, I can imagine the delight and wonder that people felt when Rex responded to their own voice. Magic!

Indeed, speech recognition is something magical. That’s why I have spent more than 30 years of my life building machines that understand speech, and many of my colleagues have done the same. The machines we have built “understand” speech so well that there is a billion dollar industry based on this specific technology. Millions of people talk to machines every day, whether it is to get customer support, find a neighborhood restaurant, make an appointment in their calendar, or search the Web.

But these speech-understanding machines do not have what Radio Rex had, even in its own primitive simplicity: an animated body that would respond to voice. Today, social robots do. And even more: they can talk back to you, see you, recognize who you are and where you are, respond to your touch, display text or graphics, and express emotions that can resonate with your own.

When we consider voice interaction for a social robot, what type of speech and language capabilities it should have? What are the challenges? Yes, of course Siri and Google Now have paved the way to speech-based personal assistants, but despite their perceived popularity, is speech recognition technology ready for a consumer social robot? The answer is yes.

First, let me explain the difference among the speech recognition technologies used for telephone applications (à la “Please say: account, payments, or technical support”), for personal assistants (like Siri, Google Now, and the most recently Cortana) and for a social robot.

In telephone-based applications, the speech recognition protocol is synchronous and typically initiated by the machine. The user is expected to speak only during well-determined intervals of time in response to a system prompt (“Please say …”). If the user does not speak during that interval, or if the user says something that is not encoded in the system, the speech recognition machine times out, and the system speaks a new prompt. This continues until something relevant is said and recognized, or either the machine or user lose their patience and bail out. We have all experienced this frustration at some point.

In telephone-based systems, any type of information has to be conveyed through the audio channel, including instructions for users on what to say, the information requested, and the possible clarification of any misunderstanding between the human and the machine (including the infamous “I think you said …”). Anything spoken has to be limited in time, in a rigid, turn-taking fashion between the system and the user. That is why telephone-based systems are so “clunky”, to the point where more often than not, they are quite annoyingly mechanical and repetitive. Although I have built some of the most sophisticated and well-crafted telephone-based speech recognition systems for over a decade — and these systems are used by millions of people every week, — I never heard anyone say: “I love my provider’s automated customer care service. It is so much fun to use it that I call it every day!”

Then came the smartphone. The fact that a smartphone includes a telephone is marginal. A smartphone is a computer, a camera, a media appliance, an Internet device, a computer game box, a chatterbox, a way to escape from boring meetings, and yes, also a telephone, for those who insist on making calls. Although it hasn’t happened yet, smartphones have the potential to make clunky, telephone-based speech understating systems obsolete. Why? Because with a smartphone, you are not forced to communicate only through the narrow audio channel. In addition to speech, you can enter text and use touch. A smartphone can talk back to you, and display text, images, and videos. The smartphone speech recognition revolution started with Siri and Google voice search. Today, more and more smartphone services are adopting speech as an alternative interface blended with text and touch modalities.

The distinction between telephone-based, audio-only services versus smartphone speech understanding is not just in the multi-modality of the communication protocol. The advent of fast broadband Internet has freed us from the necessity of having all the software on one device. Speech recognition can run in the cloud with the almost unlimited power of server farms and can truly recognize pretty much everything that you say.

Wait a minute. Just recognize? How about understanding the meaning of what you say?

There is a distinction between recognition, which is the mere transcription of the spoken words, and understanding, which is the extraction and representation of the meaning. The latter is the domain of natural language processing. While progress in speech recognition has been substantial and persistent over the past decades, natural language has moved forward more slowly. Siri and its cloud-based colleagues are capable of understanding more and more, and even some of the nuances of our languages. The time for speech recognition and natural language understanding is finally ripe for natural machine and human communication.

But…is that all? Is talking to our phones and tablets or open rooms — as in the upcoming home automation applications — the ultimate goal of spoken communication with machines?

No. Social robots are the next revolution.

It has been predicted by the visionary work of pioneers like Cynthia Breazeal, and corroborated and validated by the recent announcements of social robot products coming to market. This is made possible by the confluence of the necessary technologies that are available today.

Social robots have everything that personal assistants have—speech, display, touch—but also a body that can move, a vision system that can recognize local environments, and microphones that can locate and focus on where sounds and speech are coming from. How will a social robot interact with speech among all the other modalities?

We went from prompted speech, like in the telephone systems, to personal assistants that can come to life on your smartphone. For a social robot, there should neither be a “speak now” timeout window, nor the antiquated “speak after the beep” protocol, nor a button to push to start speaking. Instead, a social robot should be there for you all the time and respond when you talk to it. You should be able to naturally cue your robot that you are addressing it by speaking its name (like Star Trek’s “Hello Computer!”) or simply by directing your gaze toward it while you talk to it. Fortunately, the technology is ripe for this capability, too. Highly accurate hot-word speech recognition—like in Google Now—and phrase spotting (a technology that allows the system to ignore everything that is spoken except for defined key-phrases) are available today.

While speech recognition accuracy is always important, we should always consider that social robots can have multiple communication channels: speech, touch, vision, and possibly others. In many cases, the different channels can reinforce each other, either simultaneously or sequentially, in case one of them fails. Think about speech in a noisy situation. When speech is the only alternative, the only recourse is to repeat (at nauseam) the endless “I did not understand what you said, please say that again?” For social robots, however, touch and gesture are powerful alternatives to get your intent across when placed in situations where speech is difficult to process.

In certain situations, two alternative modalities can also complement each other. Consider the problem of identifying a user. This can be done either via voice with a technology, called speaker ID, or through machine vision using face recognition. These two technologies can be active at the same time and cooperate, or used independently — speaker ID when the light is too low, or face ID when the noise is too high.

Looking ahead, speech, touch, and image processing can also cooperate to recognize a person’s emotion, locate the speaker in the room, understand the speaker’s gestures, and more. Imagine the possibilities when social robots can understand natural human behavior, rather than the stilted conventions we need to follow today for machines to understand what we mean.

I have spent my career working at the forefront of human-machine communication to make it ever more natural, robust, textured – and, yes, magical. Now, we are at the dawn of the era of social robots. I am thrilled to help bring this new experience to the world. Just wait and see.

If you liked this article, you may also be interested in:

- Embodied communication: Looking for systems that mean what they say

- In-corporating body language into NLP (or, More notes on the design of automated body language)

- The death of search (or, My dysfunctional relationship with Siri)

- Google’s response to Apple’s Siri: Buy Cleversense

See all the latest robotics news on Robohub, or sign up for our weekly newsletter.

tags: c-Consumer-Household, conversational technology, cx-Research-Innovation, human-robot interaction, Natural Language Processing, social robotics