Robohub.org

Real-time appearance-based mapping in action at the Microsoft Kinect Challenge, IROS 2014

Last month at IROS the SV-ROS team Maxed-Out won the Microsoft Kinect Challenge. In this post Mathieu Labbé describes the mapping approach the team used to help them win.

One week before the IROS 2014 conference, I received an email from the Maxed-Out team telling me that they were using my open source code RTAB-Map for the Kinect Robot Navigation Contest that would be held during the conference. I am always glad to know when someone is using what I have done, so I said I would answer whatever questions they had before the contest. Since I was already in Chicago to present a paper, I met them in person at the conference and helped them with the algorithm on site before the contest … and good surprise, they won the contest! See their official press release here.

This contest featured robots (equipped only with a Kinect) navigating autonomously across multiple waypoints in a typical office environment. The first part of the contest was to map the environment so that the robot could plan trajectories in it. The robot was driven through the environment to incrementally create a 2D map (like a top view map of an office), using only its sensors. Sensors are not perfect, so maps can become misaligned over time. These errors can be minimized when the robot detects when it comes back to a previously visited area, a process called loop closure detection. This mapping approach is known as Simultaneous Localization And Mapping (SLAM). SLAM is used when the robot doesn’t have a map already built of the environment, and when it doesn’t have access to external global localization sensors (like a GPS) to localize itself. The mapping solution chosen by the SV-ROS team was my SLAM approach called RTAB-Map (Real-Time Appearance-Based Mapping).

In this article I share some of the technical details of the RTAB-Map.

The robot

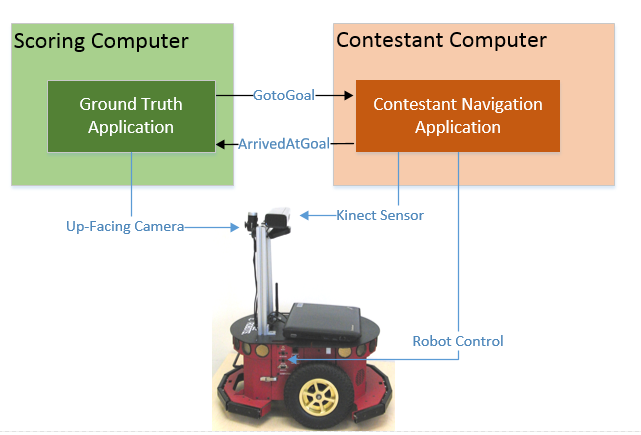

The robot was an Adept MobileRobots Pioneer 3 DX equipped with a Kinect sensor. The up-facing camera was only used by the scoring computer (detecting patterns on the ceiling to localize the robot). The RTAB-Map ROS setup was based on the “Kinect + Odometry + Fake 2D laser from Kinect” configuration. The “2D laser” was simulated using the Kinect and the depthimage_to_laserscan ros-pkg. In this configuration, the robot is constrained to a plane (x,y and yaw).

Source: IROS 2014

Contest environment

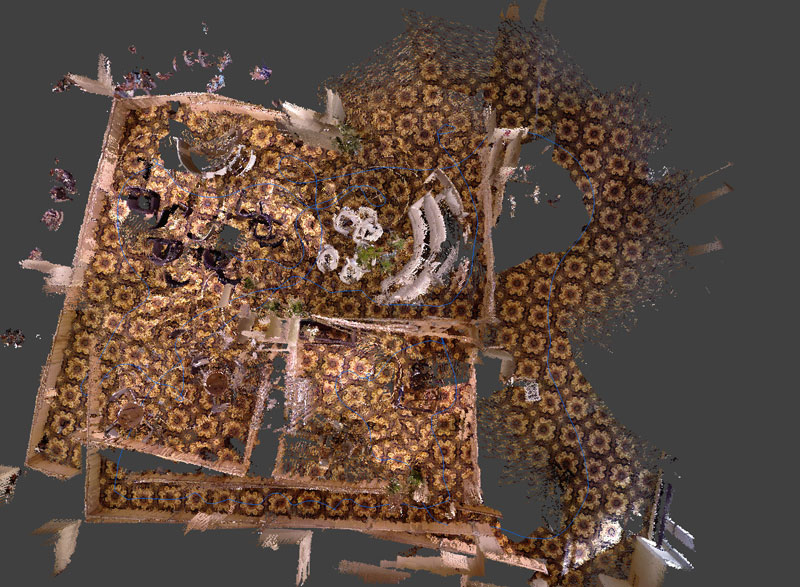

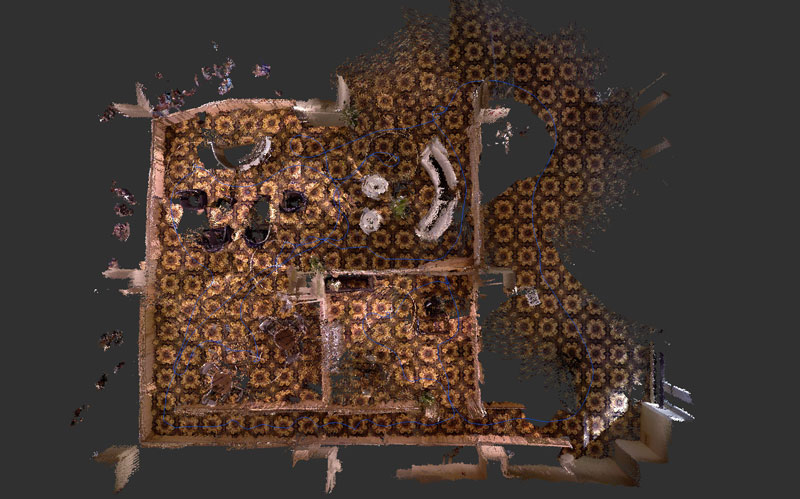

The challenge was held in the exhibit room in the Palmer House Hotel. Since RTAB-Map’s loop closure detection is based on visual appearance of the environment, the repetitive texture on the carpet made it very challenging. When there were only discriminative visual features on the floor (like a long hall), loop closures could be found with images from different locations, thus causing big errors in the map. To avoid this problem, just two minutes before the official mapping run, we set a parameter to ignore 30% of the bottom image for features extraction. We didn’t have the chance to test it before the official mapping run – all we could do was to cross our fingers and hope that no wrong loop closures would be found!

Contest environment

Filtering visual features of the floor

Results

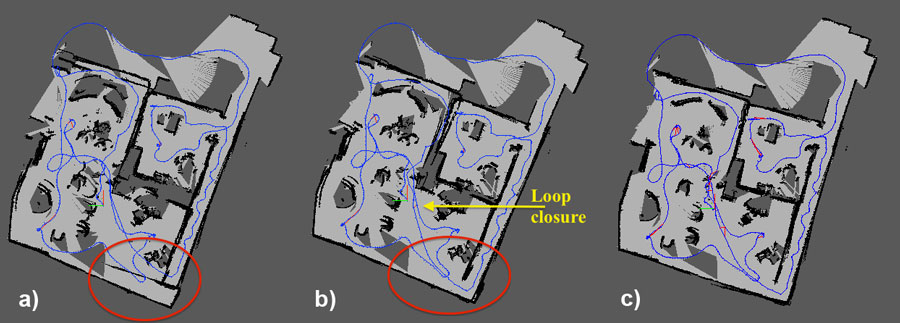

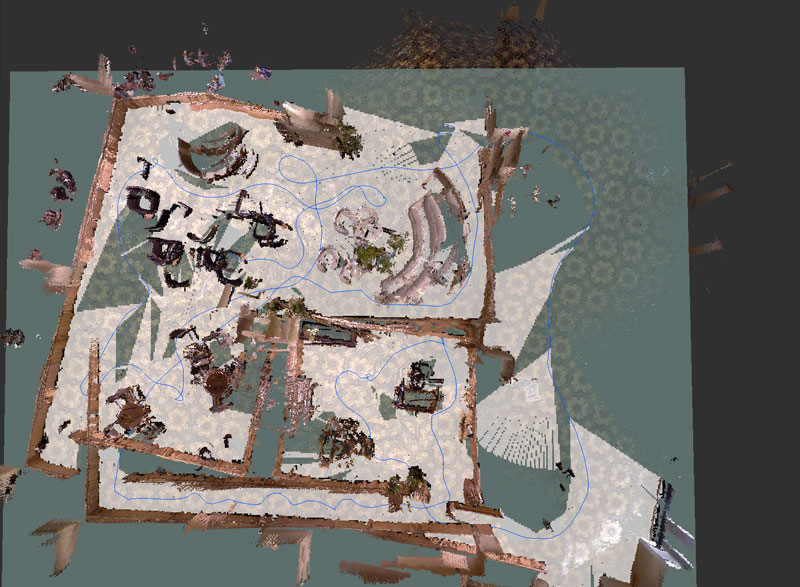

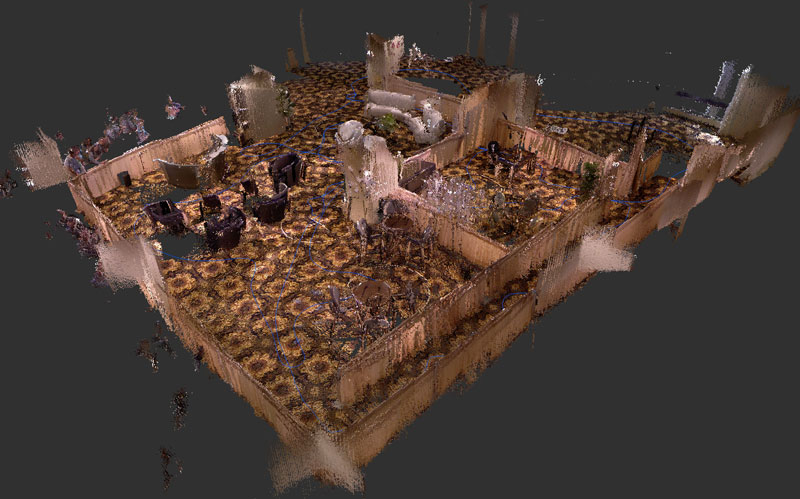

After mapping, no wrong loop closures were found. However, the robot missed a loop closure on the bottom of the map (because the camera was not facing the same direction), causing a wall at the end of the hall. This was one case where, when exploring an unknown environment, it would be better to occasionally do a 360° turn. This would enable the system to see more images at different angles, and would result in more loop closures. However, by using rtabmap-databaseViewer and reprocessing data included in the database, we could detect a loop closure between locations before and after exploring the hall. The result was an aligned bottom corner. There was still a misalignment on the middle-top, but given the time we had, the map was good enough for the navigation part of the contest. Below are results with these remaining errors corrected. The loop closures are shown in red.

During the autonomous navigation phase, RTAB-Map was set in localization mode with the pre-built map. By detecting “loop closures” with the previous map, the robot is relocalized so it can efficiently plan its trajectories through the environment.

Updated Results

In this section, I will show a parametrization of RTAB-Map that I did after the challenge to automatically find more loop closures. The video above can be reproduced using the data here:

- RTAB-Map database: IROS14-kinect-challenge.db

- RTAB-Map parameters: IROS14-kinect-challenge.ini

You can open RTAB-Map (standalone, not the ros-pkg) using the configuration file above, then set “Source from database” and select the database above. Set Source rate to 2 Hz and press start; this should reproduce the video above. Here the principal parameters modified from the defaults:

// -Filter 30% bottom of the image // -Maximum depth of the features is 4 meters Kp/RoiRatios=0 0 0 0.3 Kp/MaxDepth=4 // -Loop closure constraint, computed visually LccBow/Force2D=true LccBow/InlierDistance=0.05 LccBow/MaxDepth=4 LccBow/MinInliers=3 // -Refining visual loop closure constraint using // 2D icp on laser scans LccIcp/Type=2 LccIcp2/CorrespondenceRatio=0.5 LccIcp2/VoxelSize=0 // -Disabled local loop closure detection based on time. // -Optimize graph from the graph beginning, to // have same referential when relocalizing to this // map in localization mode. RGBD/LocalLoopDetectionTime=false RGBD/OptimizeFromGraphEnd=false // -Extract more SURF features SURF/HessianThreshold=60 // Run this for a description of the parameters: $ rosrun rtabmap rtabmap --params

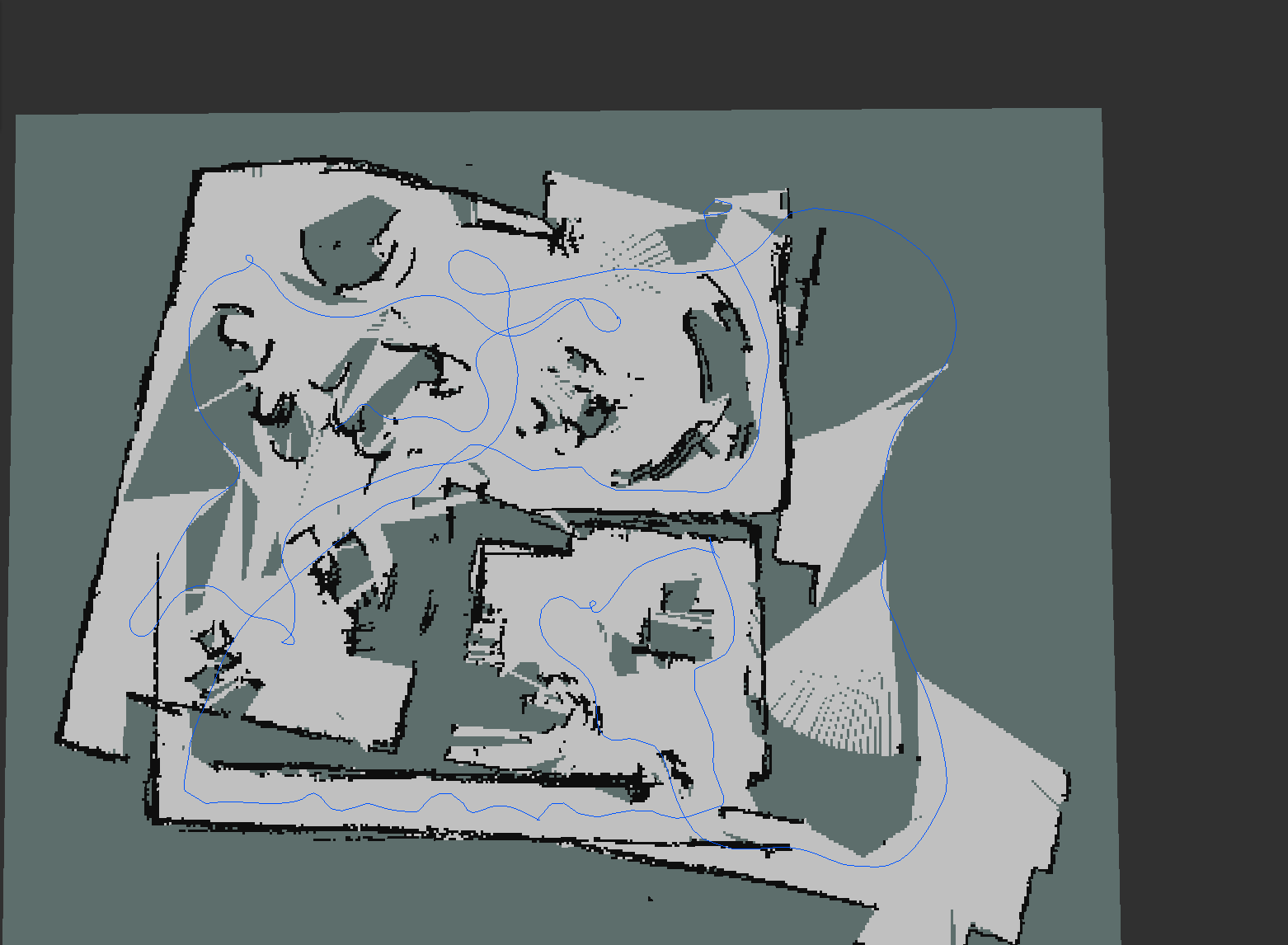

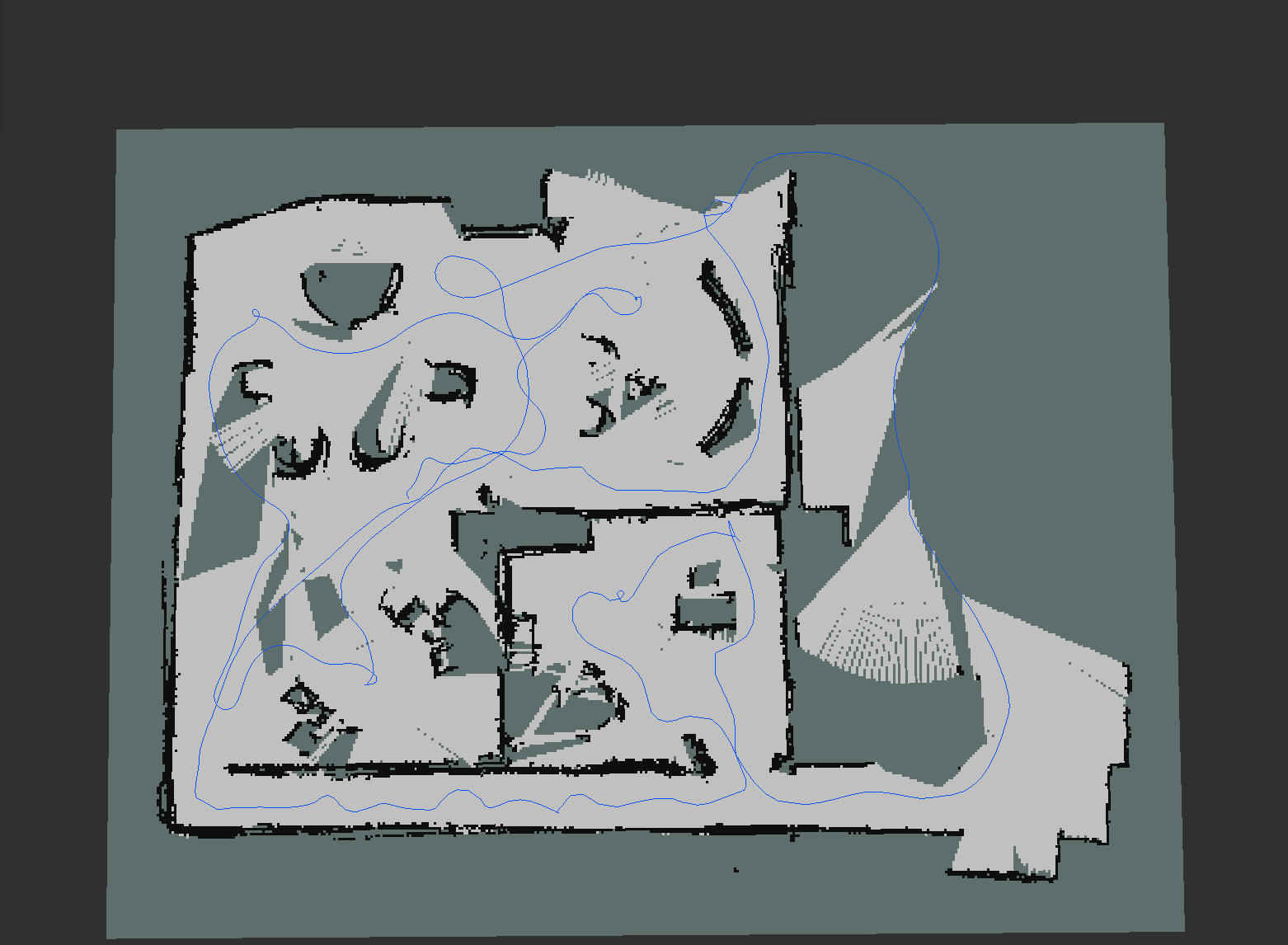

The following pictures show a comparison of the results with and without loop closures:

| Odometry Only | With Loop Closures | |

|---|---|---|

| 2D Map |  |

|

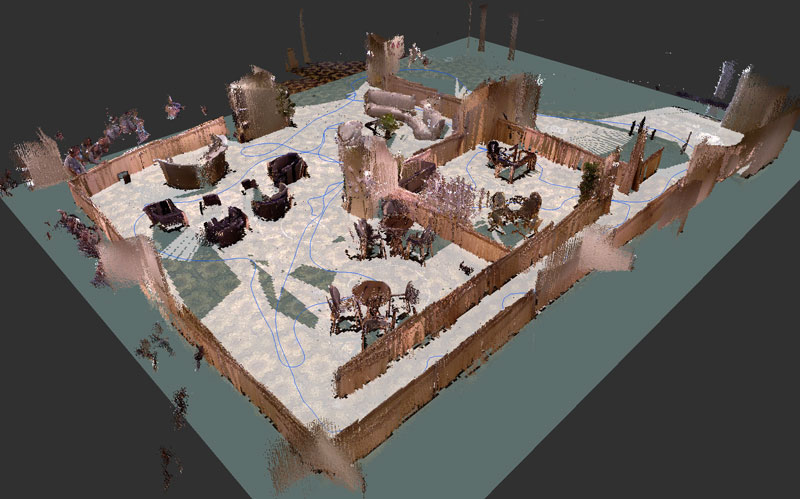

| 3D Cloud |  |

|

| 2D Map + 3D Cloud |

|

|

3D Cloud (download here: cloud.ply)

3D Cloud + 2D Map (saved with map_server: map.pgm and map.yaml)

Conclusion

SV-ROS team has created a great solution for autonomous navigation and they won the IROS 2014 Kinect Navigation Contest. I’m glad that my algorithm RTAB-Map was part of it. It was really nice to meet the team – they were very motivated, enthusiastic and all their efforts were rewarded. This post has provided a technical description of the mapping approach used by the team and shows some promising results using visual features for loop closure detection on a robot equipped only with a Kinect.

tags: c-Research-Innovation, Competition-Challenge, cx-Events, IROS 2014, Mapping-Surveillance, SLAM