Robohub.org

LIDAR vs RADAR: A detailed comparison

I was recently asked about the differences between RADAR and LIDAR. I gave the generic answer about LIDAR having higher resolution and accuracy than RADAR. And RADAR having a longer range and performing better in dust and smokey conditions. When prompted for why RADAR is less accurate and lower resolution, I sort of mumbled through a response about the wavelength. However, I did not have a good response, so this post will be my better response.

LIDAR

LIDAR—which is short for Light Detection and Ranging—uses a laser that is emitted and then received back in the sensor. In most of the LIDAR sensors used for mapping (and self driving vehicles) the time between the emission and reception is computed to determine the time of flight (ToF). Knowing the speed of light and (1/2 of the) time for the wave to return (since the signal traveled out and back) we can compute how far away the object was that caused the light to bounce back. That value is the range information that is reported by the sensor. LIDAR’s generally use light in the near-infrared, visible (but not really visible), and UV spectrum’s.

There are some sensors that use triangulation to compute the position (instead of ToF). These are usually high accuracy, high resolution sensors. These sensors are great for verifying components on an assembly lines or inspecting thermal tile damage on the space shuttle. However that is not the focus of this post.

LIDAR data. The top shows the reflectivity data. The bottom shows the range data with brighter points being farther away.

The laser beam can also be focused to have a small spot size that does not expand much. This small spot size can help give a high resolution. If you have a spinning mirror (which is often the case) then you can shoot the laser every degree or so (based on the accuracy of the pointing mechanism) for improved resolution. It is not uncommon to find LIDAR’s operating at 0.25 degrees of angular resolution.

RADAR

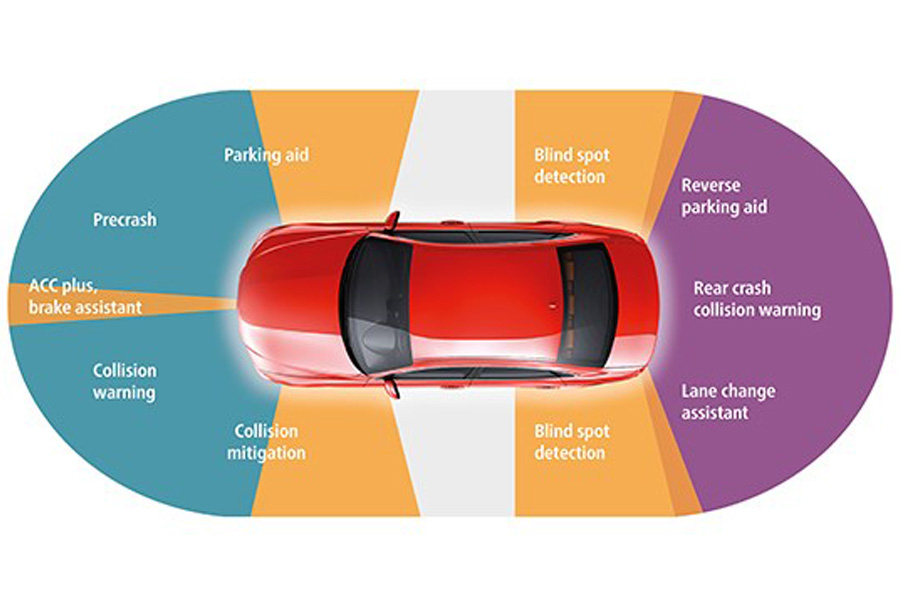

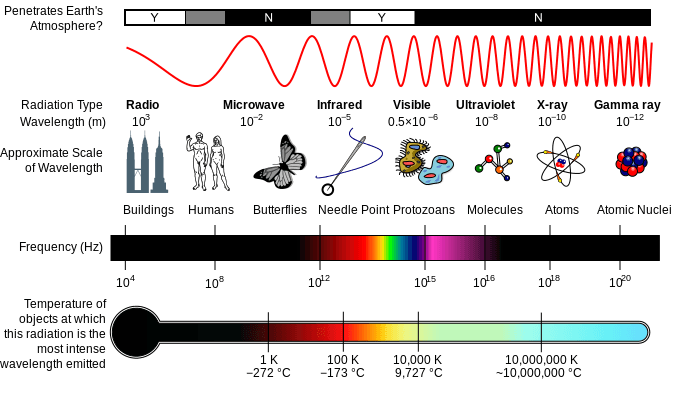

RADAR—which is short for Radio Detection and Ranging—uses radio waves to compute velocity, and/or range to an object. Radio waves have less absorption (so less attenuation) than the light waves when contacting objects, so they can work over a longer distance. As you can see in the image below the RF waves have a larger wavelength than the LIDAR waves. The down side is that if an object is much smaller than the RF wave being used, the object might not reflect back enough energy to be detected. For that reason many RADAR’s in use for obstacle detection will be “high frequency” so that the wavelength is shorter (hence why we often use mm-wave in robotics) and can detect smaller objects. However, since LIDAR’s have the significantly smaller wavelength, they will still usually have a finer resolution.

Electromagnetic spectrum showing radio waves all the way on the left for RADAR and near-infrared/visible/ultra-violet waves towards the right for LIDAR usage.

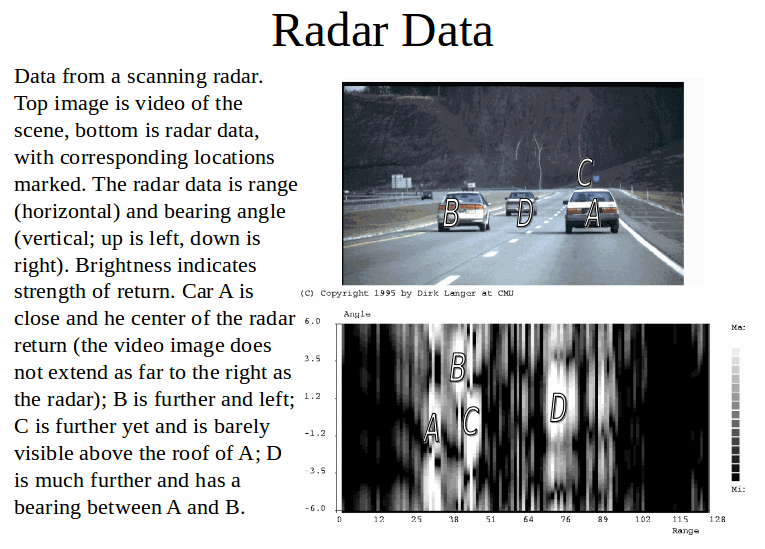

Most of the RADAR’s that I have seen will have a narrow field of view (10’s of degrees) and then just return a single value (or they might have up to a few dozen channels, see multi-mode RADAR below) for the range of the detected object. There are tricks that some systems can do using multiple channels to also get the angle for the range measurement. The angle will not be as high resolution as most LIDAR’s. There are also some RADAR’s on the market that scan to get multiple measurements. The 2 approaches are a spinning antenna (such as what you see at airports or on ships) or electronically “spinning” which is a device using multiple internal antennas with no moving parts. More advanced (newer) RADAR’s can do things like track multiple objects. In many cases they will not actually return the points that outline the objects (like a LIDAR), but will return a range, bearing, and velocity to an estimated centroid of the detected item. If multiple objects are near each other, the sensor might confuse them as being one large object and return one centroid range [Here is the source and a good reference to read].

Using the Doppler frequency shift, the velocity of an object can also be easily determined with relatively little computational effort. If the RADAR sensor and the detected object are both moving than you get a relative velocity between the two objects.

With RADAR there are commonly two operating modes:

1. Time of Flight – This operates similar to the LIDAR sensor above, however it uses radio wave pulses for the Time of Flight calculations. Since the sensors are pulsed, it knows when the pulse was sent. So, computing the range can be easier than with the continuous wave sensors (described below). The resolution of the sensor can be adjusted by changing the pulse width and the length of time you listen for a response (a ping back). These sensors often have fixed antennas leading to a small operating field of views (compared to LIDAR).

There are some systems that will combine multiple ToF radio waves into one package with different pulse widths. These will allow for various ranges to be detected with higher accuracy. These are sometimes called multi-mode RADAR

2. Continuous Wave – This approach frequency modulates (FMCW) a wave and then compares the frequency of the reflected signal to the transmitted signal in order to determine a frequency shift. That frequency shift can be used to determine the range to the object that reflected it. The larger the shift the farther the object is from the sensor (within some bounds). Computing the frequency shift and the corresponding range is computationally easier than ToF, plus the electronics to do so are easier and cheaper. This makes continuous frequency modulated systems popular. Also, since separate transmit and receive antennas are often used, this approach can continuously be transmitting and receiving at the same time unlike the pulsed ToF approach, which needs to transmit then wait for a response. This feature and the simple electronics can make FMCW RADAR very fast at detecting objects.

There is another version of the Continuous Wave RADAR where the wave is not modulated. These systems are cheap and good for quickly detecting speed using the Doppler effect, however they can not determine range. They are often used by police to detect vehicle speed where the range is not important.

SONAR

Unrelated, I should note that SONAR or Sound Navigation and Ranging, can work in both of the modes as RADAR. The wavelength used is even larger than RADAR. It is farther to the left in the spectrum image shown before, and is off the chart.

I should point out that there are cool imaging SONAR sensors. The general idea is that you can make the sensing wave vertical so that the horizontal resolution is very fine (<1 degree) and the vertical resolution is larger (10+ degrees). You can then put many of these beams near each other in a sensor package. There are similar packages that do this with small wave length RADAR.

Cost

LIDAR Sensors tend to cost more than RADAR sensors for several reasons:

- LIDAR using ToF needs high speed electronics that cost more.

- LIDAR sensors needs CCD receivers, optics, motors, and lasers to generate and receive the waves used. RADAR only needs some stationary antennas.

- LIDAR sensors have spinning parts for scanning. That requires motors and encoders. RADAR only needs some stationary antennas. (I know this is sort of similar to the line above).

Computationally Speaking

RADAR sensors tend to generate a lot less data as they just return a single point, or a few dozen points. When the sensor is multi-channel it is often just returning a range/velocity to a few centroid(ish) objects. The LIDAR sensors are sending lots of data about each individual laser point of range data. It is then up to the user to make that data useful. With the RADAR you have a simple number, but that number might not be the best. With LIDAR it depends on the roboticist to generate algorithms to detect the various objects and discern what is being viewed by the sensor.

If your goal is to detect a car in front of you (or driving towards you) and get its velocity, the RADAR can be great. If you are trying to determine the precise location of an item, generate a surface map, or find a small fence-post, a LIDAR might do better. Just remember that if you are in dust or rain the LIDAR might return a cloud of points right near the sensor (as it reads all of those particles/drops); while the RADAR might do much better.

tags: Automotive, c-Education-DIY, education, LIDAR, Mapping-Surveillance, Robot Car, Robotics technology, self-driving cars, Sensing