Robohub.org

Self-driving cars, networks, and the companies and people that are stimulating development

Intel is establishing an autonomous driving division; hacker George Hotz is open-sourcing his self-driving software in a bid to become a network company; LiDAR and distancing devices are changing. What does it all mean?

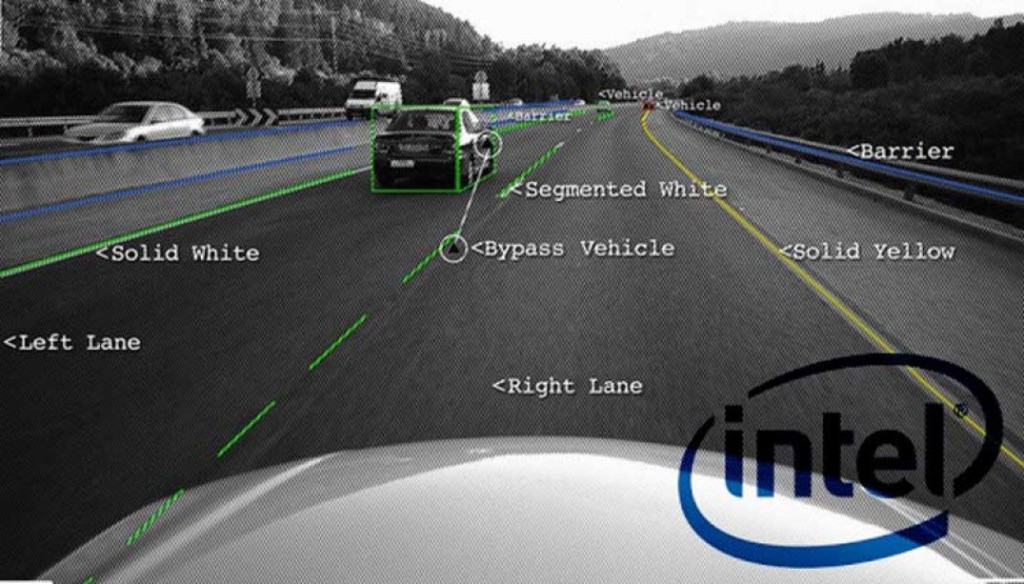

Intel

Semiconductor giant Intel, in a strategic move to become THE supplier of chips to the fast-emerging self-driving industry, is re-organizing and creating a new division. Fortune magazine said:

“The decision is a bet not just on a future of connected devices; it’s also a wager that vehicles will be the centerpiece or at least a core component in the world of IoT. Intel CEO Brian Krzanich has predicted that by 2020, the Internet of Things will include 50 billion devices and each user of those gadgets will generate 1.5 gigabytes of data every day. But, he has said, the average autonomous car will create about 40 gigabytes of data each minute. That’s a lot of data to process and who better to handle the load? Intel.”

Intel in recent months has made key acquisitions and strategic partnerships in pursuit of their autonomous driving division’s plans:

- A partnership with German automaker BMW and Israeli Mobileye to produce self-driving cars for city streets. The companies plan to develop the technology as an open platform that can be used by other automakers or ride-sharing companies.

- Delphi and Mobileye announced they will use Intel’s “system on chip” for autonomous vehicle systems.

- Intel acquired three companies with related products and plans for self-driving cars:

- Yogitech is a semiconductor design firm that specializes in adding safety functions to chips used in self-driving cars and other autonomous devices.

- Arynga, which was acquired by Intel’s Wind River Unit, develops software that enables vehicles to received over-the-air updates—a capability used frequently by automaker Tesla to enhance performance and fix security bugs in its cars.

- Itseez, a company that specializes in machine vision technology that lets computers see and understand their surroundings – a necessity for autonomous vehicles which need to be able to perceive the world around it.

Comma.ai and George Hotz

In a move toward becoming a network provider, and in response to a recent NHTSA letter which caused Hotz to cancel his self-driving aftermarket kit, Hotz announced his plans to open his software code and hardware designs to the world. On November 30th all of the code and plans were posted on Github.

Hotz is encouraging people to build their own self-driving kits. His software enables adaptive cruise control and lane-keeping assist for certain Honda and Acura models.

Fortune magazine quoted Hotz as saying:

“The future is not hardware. If you look at a lot of companies’ business models now it has a lot more to do with ownership of networks than hardware sales. We want to make this as easy as possible for as many people as possible,” Hotz told Fortune, noting that the company even redesigned parts of the Comma Neo to make it easier for hobbyists to build.

nuTonomy

NuTonomy, an MIT spin-off and autonomous vehicle systems startup that’s been testing its technology in Singapore, announced it will begin testing its self-driving Renault Zoe electric vehicles in an industrial park in South Boston in the next few weeks. Although the area is a public area, NuTonomy will, because of the nature of the industrial park, still be somewhat removed from normal vehicle and pedestrian traffic.

Oryx Vision

Oryx Vision, an Israeli startup which recently got $17 million in a Series A funding round, shows off its new coherent optical radar which uses a laser to illuminate the road ahead, but radar to treat reflected signals as a wave rather than a particle. In an article in IEEE Spectrum, it was revealed that:

“The laser in question is a long-wave infrared laser, also called a terahertz laser because of the frequency at which it operates. Because human eyes cannot focus light at this frequency, Oryx can use higher power levels than can lidar designers. Long-wave infrared light is also poorly absorbed by water and represents only a tiny fraction of the solar radiation that reaches Earth. This means the system should not be blinded by fog or direct sunlight, as lidar systems and cameras can be.”

One of the potential cost savings of Oryx’s technology comes from the fact that its laser does not need to be steered with mechanical mirrors or a phased array in order to capture a scene. Simple optics spread the laser beam to illuminate a wide swathe in front of the vehicle. (Oryx would not say what the system’s field of view will be, but if it is not 360 degrees like rooftop lidars, a car will need multiple Oryx units facing in different directions.)

The clever bit—and what has prevented anyone from building a terahertz lidar before now—is what happens when the light bounces back to the sensor. A second set of optics direct the incoming light onto a large number of microscopic rectifying nanoantennas. These are what Oryx’s co-founder, David Ben-Bassat, has spent the past six years developing.

“Today, radars can see to 150- or 200 meters, but they don’t have enough resolution. Lidar provides great resolution but is limited in range to about 60 meters, and to as little as 30 meters in direct sunlight,” says Wellingstein. He expects Oryx’s coherent optical radar to accurately locate debris in the road at 60 meters, pedestrians at 100 meters, and motorcycles at 150 meters—significantly better than the performance of today’s sensor systems.

Regarding other industry improvements and inventions, the IEEE Spectrum article also said:

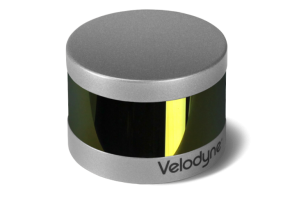

“But don’t write off lidar just yet. Following a $150 million investment by Ford and Chinese search giant Baidu this summer, Velodyne expects an “exponential increase in lidar sensor deployments.” The cost of the technology is still dropping, with Velodyne estimating that one of its newer small-form lidars will cost just $500 when made at scale [at present, the price on the website is $7,999 for the new PUCK shown on the right].

Solid-state lidars are also on the horizon, with manufacturers including Quanergy, Innoluce, and another Israeli start-up, Innoviz, hinting at sub-$100 devices. Osram recently said that it could have a solid-state automotive lidar on the market as soon as 2018, with an eventual target price of under $50. Its credit card–size lidar may be able to detect pedestrians at distances up to 70 meters.

That’s not quite as good as Oryx promises but is probably fine for all but the very fastest highway driving. The window for disrupting lidar’s grip on autonomous vehicles is closing fast.”

What’s it all mean:

Fast-emerging may be a catch-all phrase but it seems appropriate to the various parts of the self-driving vehicle marketplace. There are multiple stakeholders about to be disrupted, as I wrote about in April:

“The stakeholders are numerous and the effects can be devastating. Think secretaries, airline reservationists, and all the others disrupted during the emergence of the digital era. The transition to self-driving vehicles and car-sharing systems is likely to cause similar worldwide disruptions.

Consider the insurance industry. If accident rates go down by 90%, as many are predicting, premiums will need to go down too because the reserves for payouts, which are built into the rates, will go down as well. Also, there will likely be fewer insured drivers and car owners thereby lowering the pool of insured people.

Consider hotels and motels and their real estate values and employees – particularly those along the major highways. Why stop at a motel when you can snooze comfortably in a long-haul high-speed driverless car? This will likely affect short-haul airlines and airline employees as well.

Think about professional drivers – truckers, taxies, limos, buses, shuttles. It is expected that many will be replaced by on-demand point-to-point self-driving devices.”

The list of stakeholders also goes on in the auto manufacturing industry and all the Tier 1, 2, 3 and 4 component providers and wannabees. As self-driving vehicles show their needs – for example, the need for low-cost distancing vision systems – new products will be developed and new vendors could replace old ones, hence the posturing by Velodyne, LeddarTech, Quanergy, Bosch, Valeo and all the other LiDAR providers.

As the technologies emerge, it becomes clear that vehicles will be the core component in a larger connected world, streaming massive amounts of data to be analyzed in ways we haven’t even fathomed yet, hence Intel’s new division and the new chips from Nvidia, Qualcomm and others. It’s a fast-emerging marketplace on multiple fronts.

Times and our definition of cars are changing day by day – and fast emerging – a true moving target!

tags: Autonomous Cars, autonomous vehicles, c-Business-Finance, comma.ai, Frank Tobe, Intel, self-driving cars, The Robot Report