Robohub.org

355

SLAM fused with Satellite Imagery #ICRA2022 with John McConnell

Underwater Autonomous Vehicles face challenging environments where GPS Navigation is rarely possible. John McConnell discusses his research, presented at ICRA 2022, into fusing overhead imagery with traditional SLAM algorithms. This research results in a more robust localization and mapping, with reduced drift commonly seen in SLAM algorithms.

Satellite imagery can be obtained for free or low cost through Google or Mapbox, creating an easily deployable framework for companies in industry to implement.

Links

- Download mp3 (12.2 MB)

- Sonar SLAM opensource repo

- Research Paper

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon

[00:00:00] I’m John McConnell:. This is overhead image factors for underwater sonar-based SLAM. So first let’s talk about SLAM. Slam allows us to estimate the vehicle state and map as we go. However, as mission progresses, drift will accumulate. We need loop closures to minimize this drift. However, these aren’t trajectory dependent and often ambiguous.

So the research question in this work is how can we use overhead images to minimize the drift in our sonar based SLAM system.

So first overhead images are free or very low cost from vendors like Mapbox and Google may come in at a similar resolution to our sonar sensor at five to 10 centimeters,

some key challenges for use overhead images are in RGB. Sonar is not, uh, overhead images also come in and they, uh, top-down view. Or sonar images are more of a water level view. [00:01:00] Uh, and obviously, uh, you know, the vessels may be in different locations between image capture, time and mission execution time.

okay. So what do we provide to the vehicle a priori? We have a functional slam solution, albeit with drift and initial GPS. And then this overhead image segmentation shown in green, this identifies the structure. That’s going to be useful as an aid to navigation in this algorithm.

so conceptually, we’re going to start at this red dot. We’re going to move along some trajectory to our current state. We’re going to say, "what should I see?" In terms of the green segmentation. We can compare that to what we actually see in the sonar imagery, resolve the differences in appearance, and then find the transformation between those two data structures.

okay. So top left green, with black background, we have the candidate overhead image, which is just what we should [00:02:00] see at our current state. We have a sonar image from the same time step, we’re going to take those and push them together into UNET. The output of UNET shown here in magenta with black background, we can use the output of UNET, which is the candidate overhead image transformed into the sonar image frame with the original candidate, overhead image in ICP to find the transformation between those two.

We can then roll that in to our sine graph..

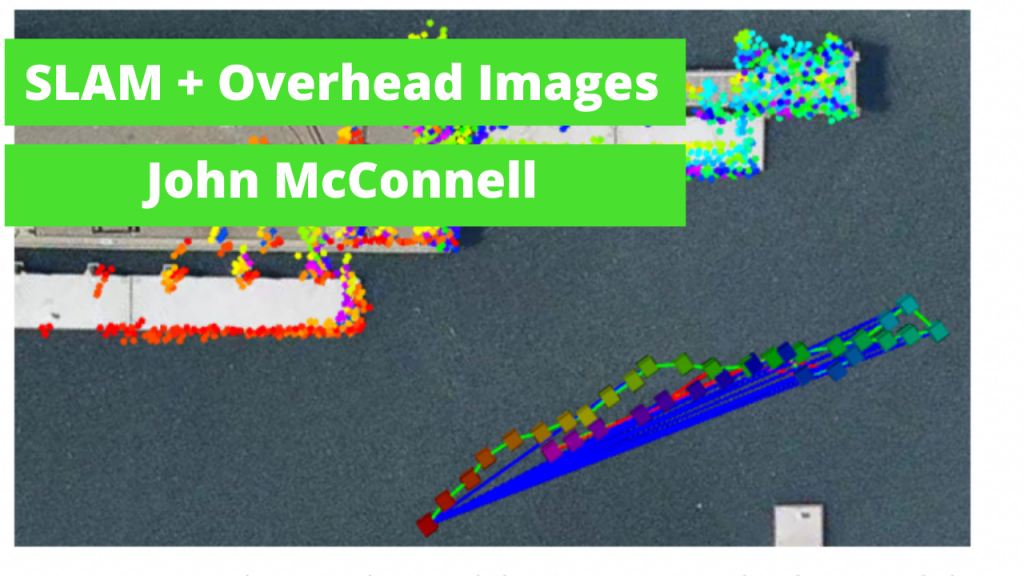

on the left. We have an example of slam mission without overhead image factors, green lines or odometry red lines are loop closures. You can see compared to the gray overhead image mask. Drift is heavily evident. When we add the blue lines on the right-hand side, the overhead image factors you can see, we drastically reduce that mission drift compared to the gray overhead image mask.

So to highlight the [00:03:00] novelty of our framework, we’re able to resolve the differences between the overhead images and the sonar images and roll these overhead image factors into our already functioning slam system. Reducing the mission drift. We’re also able to demonstrate in the paper that we can train in simulation and function on real world data.

Abate: Can you tell me a little bit about your presentation just now?

John McConnell: Sure. So we’re using overhead images which are satellite images or images captured from a low flying UAV as an assist for an underwater vehicle using a sonar based SLAM solution, uh, to reduce its drift.

Abate: Yeah. So this, you said this is, or a unmanned surface vehicles or underwater vehicles?

John McConnell: This is for unmanned underwater vehicles.

Abate: Okay. All right. Is it limited to unmanned underwater vehicles? Why not also use it for…?

John McConnell: You can use it for any system you’d want, um, that’s using sonar as the primary perceptual input. Uh, that’s also accumulating drift.[00:04:00]

The reason we focus on unmanned underwater vehicles is because GPS doesn’t work under water, right? So we’re, we’re doing is using these overhead images as a GPS proxy, basically to take a stable SLAM solution. That’s drifting with time, it’s getting worse with time and we’re taking look at these overhead images we’re using, uh, CNN convolutional, neural network.

To work out what exactly is in our sonar imagery and our overhead imagery to fuse them and reduce the slam drift.

Abate: Yeah. So basically, as you’re doing your slam, it’s pretty good at the piece to piece, uh, localization, but then it drifts over time and this is allowing you to stay locked in, in place.

John McConnell: Yeah.

We can just say, you know, keep it on the rails, right? Yeah.

Abate: So, and then the, um, so the imagery that you’re getting satellite imagery. Where are you getting this from?

John McConnell: Yeah. So this is a free or very low cost from [00:05:00] vendors like Mapbox, Google, and I’m sure there’s other ones out there. And if, uh, you know, you were working in a military application, you’d have access to some even better, yeah, satellite imagery, uh, or you could use, you know, uh, DGI Phantom to put it up over the survey area before you go out on it. So it’s, it’s pretty flexible with regard to the source of the overhead imagery, but we do segment it. Uh, so we identify the structure that we care about and the structure that we do not care about.

Abate: Yeah. So maybe for a high cost application, then you can actually get a drone, go out there and map it yourself.

John McConnell: Yeah. Or yeah. Or task a satellite. Yeah.

Abate: Or a task to settle. Yeah, so, and, um, well, so what’s the frequency rate that say the satellite images are generally updating by and then, is this something that you think about as you’re locating your SLAM algorithm on the satellite imagery?

John McConnell: Yeah. So your question is really, if I have my, uh, satellite image or my overhead image of the environment, right. And I take that picture [00:06:00] on a Tuesday. But I’m gonna go do my work on Friday, right. Have things changed?

And right. The answer is absolutely. Yes. Right. We’re working in a littoral environment. So nearshore environments and we test primarily in arenas.

So when you take that overhead image, you have a smattering of small boats, right? Those boats are not in the same place. Right? So that’s why we use this convolutional neural network to aid in the translation, not translation like X, Y, but translation:

"I see this in sonar and I have this prior, you know, sketched out of what should be there, given my overhead image", but we deliberately omit vessels from the overhead image segmentation and part of what the CNN is training to learn.

Is to also omit objects that are not present in the overhead imagery.

Abate: So you’re actually detecting like what type of object is this? Like you, you can understand this is a dynamic object. We don’t [00:07:00] expect it to be here tomorrow. Uh, but this is a landscape or this is a building or a port…

John McConnell: Or a pier yeah. Yeah. We rely heavily on structures, uh, that we expect to not move.

Right? So breakwaters, piers, things like that,

Abate: And this is all automatically calculated.

John McConnell: We don’t explicitly call out each object and say, okay, this is a vessel. You know, I don’t care about this. What we do is we provide a context clue, which we call in our work and a "candidate, overhead image". And we also use the sonar image.

We take those and push them into unit together and unit just learns to drop out. Uh, what’s not in the context clues.

Abate: Yeah. And have there been any challenges that you ran into?

John McConnell: I mean, many, many, many challenges, uh, when you test an algorithm like this, uh, one, the biggest question that comes up is ground truth.

Right? How do you grade? And how do you also generate enough training data for a data hungry CNN like unit? Right. So we have to deal with a lot of that, uh, by working in simulation. [00:08:00]

Abate: And do you expect this to come out, say to be open source or to industry? Yes. With any near timeframe?

John McConnell: Yes.

Abate: When do you expect?

John McConnell: Fully in the next six months?

We have our, uh, open-source SLAM framework, uh, which you can take a look. People can get my personal GitHub, https://github.com/jake3991. You’ll find a Repo called sonar slam that has the baseline slam system. And we’re expecting to incorporate the overhead image stuff in the next six months. Awesome. Thank you. Yeah. Thank you.

transcript

tags: c-Research-Innovation, cx-Research-Innovation, icra2022, Mapping-Surveillance, podcast, Research, software