Robohub.org

Smart Grasping System available on ROS Development Studio

Would you like to make a robot to grasp something, but you think that is impossible to you just because you can’t buy a robot arm? I’m here to tell that you can definitely achieve this without buying a real robot. Let’s see how:

How is that possible?

The Smart Grasping Sandbox built by Shadow Robotics is now available for everybody on the ROS Development Studio – a system that allows you to create and test your robot programs through simulations using only a web browser.

Wait, what is the Smart Grasping Sandbox?

The Smart Grasping Sandbox is a public simulation for the Shadow’s Smart Grasping System with theUR10 robot from Universal Robots. It allows you to make a robot to grasp something without having to learn everything related to Machine Learning, and being available on the ROS Development Studio, it allows you to test it without the hassle of installing all the requirements.

As Ugo Cupcic said:

I don’t want to have to specify every aspect of a problem — I’d rather the system learn the best way to approach a given problem itself.

Using the Development Environment for Grasping

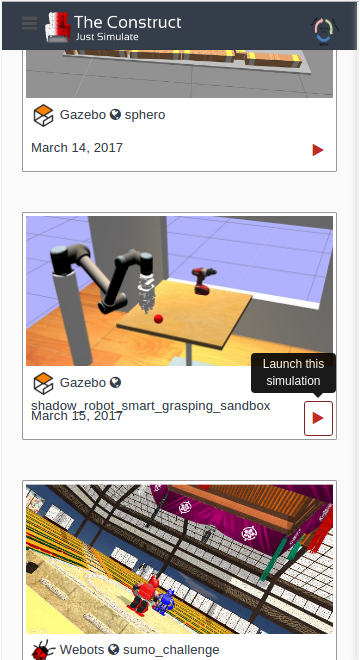

In order to user the Smart Grasping Sandbox on ROS Development Studio—also known as RDS—just go to http://rds.theconstructsim.com and Sign In. Once logged in, go to the Public Simulations, select the Smart Grasping Sandbox simulation and press the red Launch this simulation button.

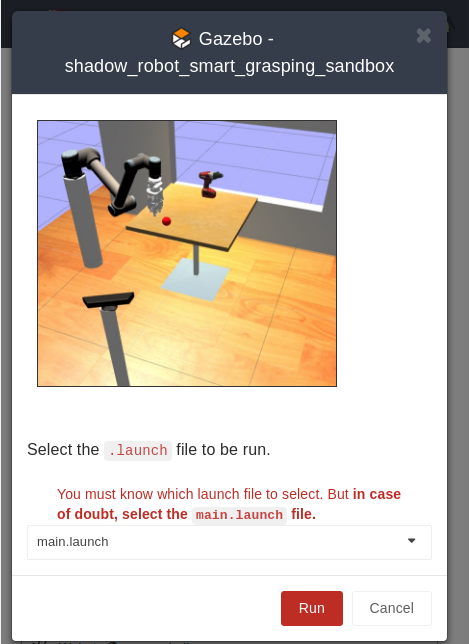

After pressing the Launch this simulation, a new screen will appears asking you to select the launch file. Just keep the default main.launch and press the red run button.

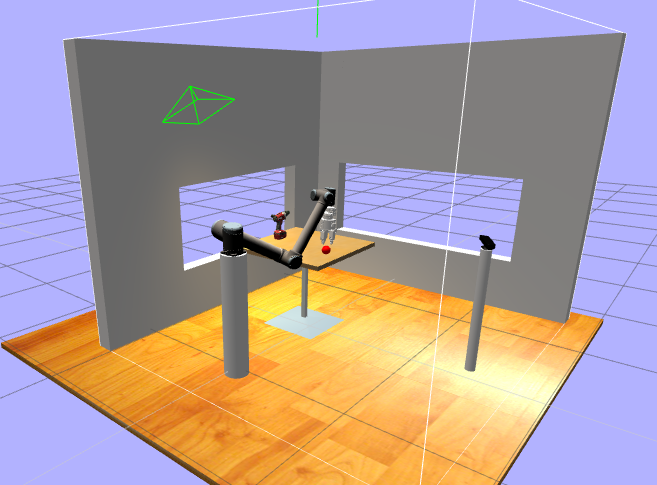

After a few seconds, the simulation will be loaded and you will be able interact with the simulation.

Once the simulation is loaded, you can see the instructions about how to control it on the left side.

In the center you have the simulation itself, and on the right side you can see the Integrated Development Environment and the Web Shell that allows you to modify the code and send Linuxcommands to the simulation respectively.

You can modify, the simulation, the control programs or even create your own manipulation control programs, make the grasping system learn using deep learning or else. This is up to you as developer!

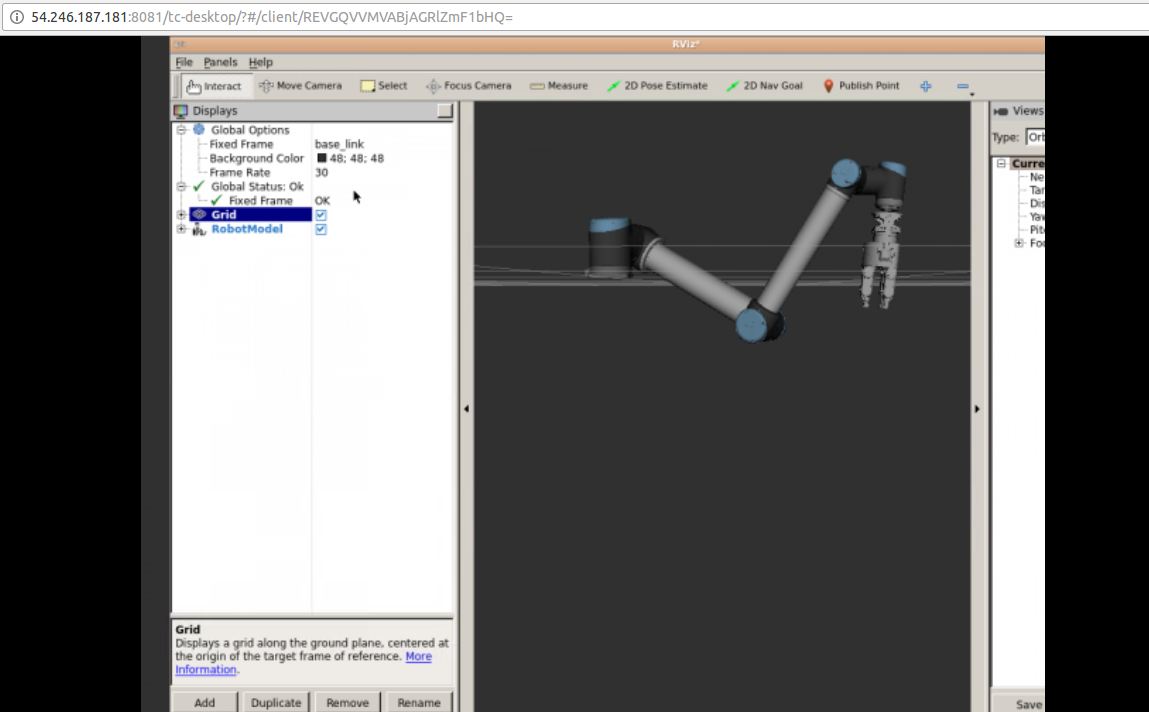

You can also see what the robot sees using the online ROS 3D Visualizer (RViz) typing the following command on the Web Shell:

$ rosrun rviz rviz

After running Rviz, you press the red Open ROS Graphic Tools button on the bottom left side of the system and a new tab should appear with Rviz.

Conclusion

Here we have seen how to use a simulation to programme a robot to grasp something.

The simulation uses ROS as the middleware to control the robot.

If you want to learn ROS or master your ROS Skills, we recommend you to give a try to Robot Ignite Academy.

If you are a robot builder and want to have your own simulation available for everybody on RDS, or you want to have a course specifically for your robot, contact us through the email info@theconstructsim.com.

tags: Actuation, Algorithm Controls, c-Education-DIY, coding, programming, Robotics technology, ROS, Sensing, software