Robohub.org

The Uncanny Valley of human-robot interactions

The device named “Spark” flew high above the man on stage with his hands waving in the direction of the flying object. In a demonstration of DJI’s newest drone, the audience marveled at the Coke can-sized device’s most compelling feature: gesture controls. Instead of a traditional remote control, this flying selfie machine follows hand movements across the sky. Gestures are the most innate language of mammals, and including robots in our primal movements means we have reached a new milestone of co-existence.

Madeline Gannon of Carnegie Mellon University is the designer of Mimus, a new gesture controlled robot featured in an art installation at The Design Museum in London, England. Gannon explained: “In developing Mimus, we found a way to use the robot’s body language as a medium for cultivating empathy between museum-goers and a piece of industrial machinery. Body language is a primitive yet fluid means of communication that can broadcast an innate understanding of the behaviors, kinematics and limitations of an unfamiliar machine.” Gannon wrote about her experiences recently in the design magazine Dezeen: “In a town like Pittsburgh, where crossing paths with a driverless car is now an everyday occurrence, there is still no way for a pedestrian to read the intentions of the vehicle…it is critical that we design more effective ways of interacting and communicating with them.”

So far, the biggest commercially deployed advances in human-robot interactions have been conversational agents by Amazon, Google and Apple. While natural language processing has broken new ground in artificial intelligence, the social science of its acceptability in our lives might be its biggest accomplishment. Japanese roboticist Masahiro Mori described the danger of making computer generated voices too indistinguishable from humans as the “uncanny valley.” Mori cautioned inventors from building robots that are too human sounding (and possibly looking) as the result elicits negative emotions best described as “creepy” and “disturbing.”

Recently, many toys have embraced conversational agents as a way of building greater bonds and increasing the longevity of play with kids. Barbie’s digital speech scientist, Brian Langner of ToyTalk, detailed his experiences with crossing into the “Uncanny Valley” as: “Jarring is the way I would put it. When the machine gets some of those things correct, people tend to expect that it will get everything correct.”

Kate Darling of MIT’s Media Lab, whose research centers on human-robot interactions, suggested that “if you get the balance right, people will like interacting with the robot, and will stop using it as a device and start using it as a social being.”

This logic inspired Israeli startup Intuition Robotics to create ElliQ—a bobbing head (eyeless) robot. The purpose of the animatronics is to break down barriers between its customer base of elderly patients and their phobias of technology. According to Intuition Robotics’ CEO, Dor Skuler, the range of motion coupled with a female voice helps create a bond between the device and its user. Don Norman, usability designer of ElliQ, said: “It looks like it has a face even though it doesn’t. That makes it feel approachable.”

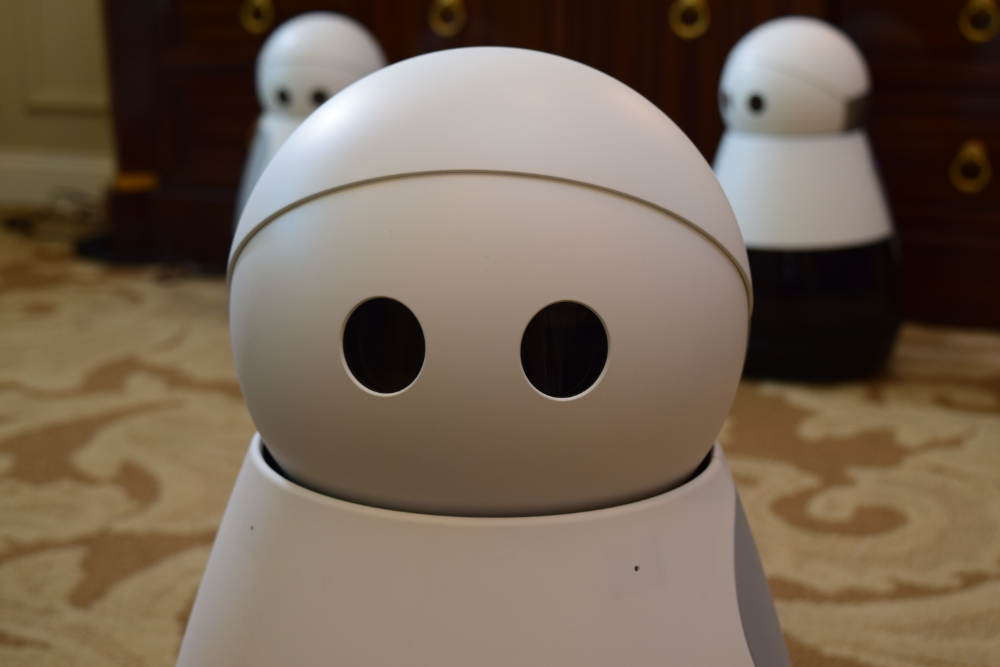

Mayfield Robotics decided to add cute R2D2-like sounds to its newest robot, Kuri. Mayfield hired former Pixar designers Josh Morenstein and Nick Cronan of Branch Creative with the sole purpose of making Kuri more adorable. To accomplish this mission, Morenstein and Cronan gave Kuri eyes, but not a mouth as that would be, in their words “creepy.” Conan shares the challenges with designing the eyes: “Just by moving things a few millimeters, it went from looking like a dumb robot to a curious robot to a mean robot. It became a discussion of, how do we make something that’s always looking optimistic and open to listen to you?” Kuri has a remarkable similarity to Morenstein and Cronan’s former theatrical robot, EVA.

In the far extreme of making robots act and behave human, RealDoll has been promoting six thousand dollar sex robots. To many, RealDoll has crossed the “Uncanny Valley” of creepiness with sex dolls that look and talk like humans. In fact, there is a growing grassroots campaign to ban RealDoll’s products globally, as it endangers the very essence of human relationships. Florence Gildea writes on the organization’s blog: “The personalities and voices that doll owners project onto their dolls is pertinent for how sex robots may develop, given that sex doll companies like RealDoll are working on installing increasing AI capacities in their dolls and the expectation that owners will be able to customize their robots’ personalities.” The example given is how the doll expresses her “feelings” for her owner on Twitter:

Obviously a robot companion has no feelings, however it is a projection of the doll owners’. “To anthropomorphize their dolls to sustain the fantasy that they have feelings for the owner. The Twitter accounts seemingly manifest the dolls’ independent existence so that their dependence on their owners can seem to signify their emotional attachment, rather than it following inevitable from their status as objects. Immobility, then, can be misread as fidelity and devotion.” The implications of this behavior is that their female companion, albeit mechanical, enjoys “being dominated.” The fear that the Campaign Against Sex Robots expresses is the objectification of women (even robotic ones) reinforces problematic human sexual stereotypes.

Today, with technology at our fingertips, there is growing phenomena of preferring one-directional device relationships over complicated human encounters. MIT Social Sciences Professor Sherry Turkle writes in her essay, Close Engagements With Artificial Companionship, that “over-stressed, overworked, people claim exhaustion and overload. These days people will admit they’d rather leave a voice mail or send an email than talk face-to-face. And from there, they say: ‘I’d rather talk to the robot. Friends can be exhausting. The robot will always be there for me. And whenever I’m done, I can walk away.’”

In the coming years humans will communicate more with robots in their lives from experiences in the home to the office to their leisure time. The big question will be not the technical barriers, but the societal norms that will evolve to accept Earth’s newest species.

“What do we think a robot is?” asked robot designer Norm. “Some people think it should look like an animal or a person, and it should move around. Or it just has to be smart, sense the environment, and have motors and controllers.”

Norm’s answer, like beauty, could be in the eye of the beholder.

tags: bio-inspired, c-Research-Innovation, Culture and Philosophy, cx-Politics-Law-Society, human-robot interaction, humanoid, opinion, robot, Uncanny Valley