Robohub.org

This robotic prosthetic hand can be made for just $1000

The Dextrus hand is a robotic hand that can be put together for well under £650 ($1000) and offers much of the functionality of a human hand. Existing prosthetic hands are magnificent devices, capable of providing a large amount of dexterity using a simple control system. The problem is that they cost somewhere between £7,000-£70,000 ($11,000-$110,000) — far too much for most people to afford, especially in developing countries. Through the Open Hand Project, an open source project with the goal of making robotic prosthetic hands more accessible to amputees, a fully-functional prototype has already been developed. An indiegogo campaign is currently underway to provide funds for refining and testing the design.

In order to broaden the reach of prosthetic devices, I decided to create a low-cost prosthetic hand while in my final year at the University of Plymouth. I ended up creating a fully functioning prototype that went on to win three awards for innovation and excellence.

The Open Hand Project tackles a number of problems to bring the cost down. One of these is the customized nature of prosthetic devices. Usually they need to be custom-fitted to the user’s remaining arm, which can rack up medical bills with consultations and fittings. The Dextrus hand connects directly to an NHS fitted, passive prosthesis. This means no additional custom fitting and no extra cost.

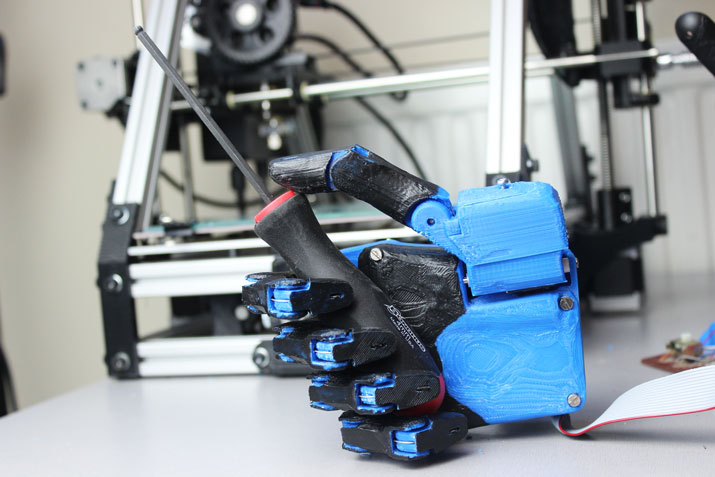

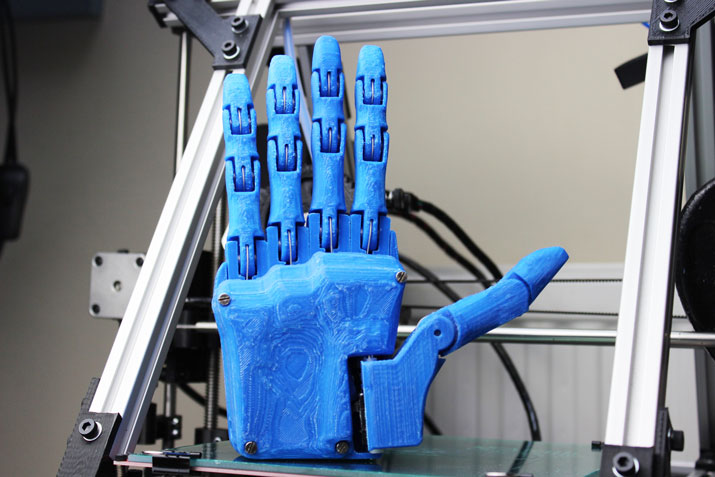

The Dextrus hand works much like a human hand. It uses electric motors instead of muscles and steel cables instead of tendons. 3D printed plastic parts work like bones and a rubber coating acts as the skin. All of these parts are controlled by electronics to give it a natural movement that can handle all sorts of different objects. It uses stick-on electrodes to read signals from the users remaining muscles, which can control the hand, telling it to open or close.

Using 3D printing offers vast benefits to the project, because the user can select any colour, it’s easy to switch from a right hand to a left hand, and parts can be re-printed with ease should anything break.

To take this project to the next level, I need to design and prototype the rest of the electronics and build everything onto printed circuit boards. The design of the hand needs to be refined and tested to make sure that it’s robust and functional as well as aesthetically pleasing.

This is why I’m using crowd-funding to raise the money to support the project and have launched a campaign on indiegogo. If the crowd-funding campaign is successful, the money will go towards funding the project for an entire year. This will include prototyping PCB designs, materials for additional prototypes of the whole hand and equipment for assembling the electronics. Since I’ll be working full time on this, some of it will also go towards a modest salary to keep me going for the year.

tags: c-Research-Innovation, Crowd Funding, crowdfunding, crowdfunding campaign, cx-Health-Medicine, prosthetic hand, Service Professional Medical Prosthetics