Robohub.org

279

Safe Robot Learning on Hardware with Jaime Fernández Fisac

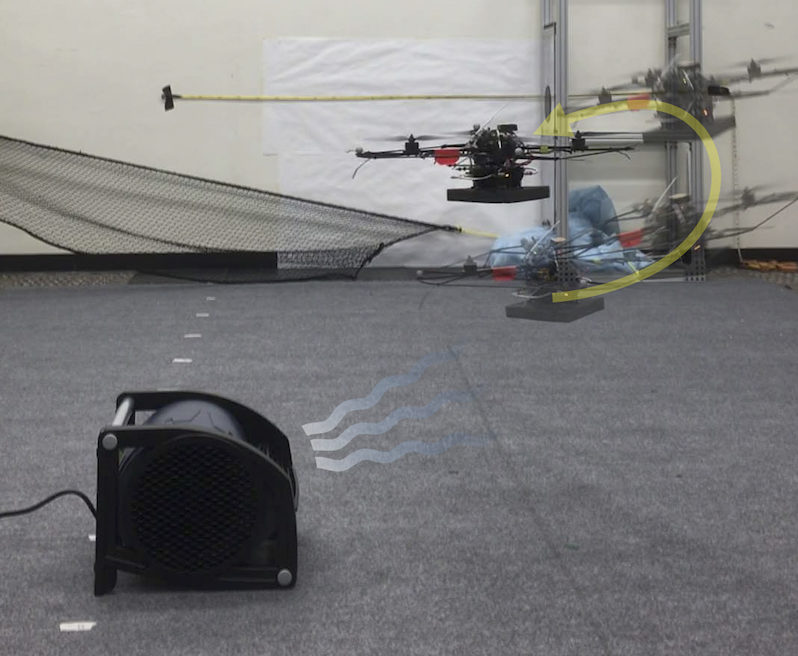

In this interview, Audrow Nash interviews Jaime Fernández Fisac, a PhD student at University of California, Berkeley, working with Professors Shankar Sastry, Claire Tomlin, and Anca Dragan. Fisac is interested in ensuring that autonomous systems such as self-driving cars, delivery drones, and home robots can operate and learn in the world—while satisfying safety constraints. Towards this goal, Fisac discusses different examples of his work with unmanned aerial vehicles and talks about safe robot learning in general; including, the curse of dimensionality and how it impacts control problems (including how some systems can be decomposed into simpler control problems), how simulation can be leveraged before trying learning on a physical robot, safe sets, and how a robot can modify its behavior based on how confident it is that its model is correct.

Below are two videos of work that was discussed during the interview. The top video is on a framework for learning-based control, and the bottom video discusses adjusting the robot’s confidence about a human’s actions based on how predictably the human is behaving.

Jaime Fernández Fisac

Jaime Fernández Fisac is a final-year Ph.D. candidate in Electrical Engineering and Computer Sciences at the University of California, Berkeley. He received a B.S./M.S. degree in Electrical Engineering from the Universidad Politécnica de Madrid, Spain, in 2012, and a M.Sc. in Aeronautics from Cranfield University, U.K., in 2013. He is a recipient of the La Caixa Foundation fellowship. His research interests lie between control theory and artificial intelligence, with a focus on safety assurance for autonomous systems. He works to enable AI systems to reason explicitly about the gap between their models and the real world, so that they can safely interact with uncertain environments and human beings, even under inaccurate assumptions.

Jaime Fernández Fisac is a final-year Ph.D. candidate in Electrical Engineering and Computer Sciences at the University of California, Berkeley. He received a B.S./M.S. degree in Electrical Engineering from the Universidad Politécnica de Madrid, Spain, in 2012, and a M.Sc. in Aeronautics from Cranfield University, U.K., in 2013. He is a recipient of the La Caixa Foundation fellowship. His research interests lie between control theory and artificial intelligence, with a focus on safety assurance for autonomous systems. He works to enable AI systems to reason explicitly about the gap between their models and the real world, so that they can safely interact with uncertain environments and human beings, even under inaccurate assumptions.

Links

- Download mp3 (28.0 MB)

- Jaime Fernández Fisac’s website

- InterACT Lab

- Subscribe to Robots using iTunes

- Subscribe to Robots using RSS

- Support us on Patreon

tags: Algorithm Controls, c-Research-Innovation, cx-Aerial, cx-Research-Innovation, podcast, Research