Robohub.org

Up and flying with the AR.Drone and ROS: Joystick control

This is the second tutorial in the Up and flying with the AR.Drone and ROS series.

In this tutorial we will:

- Talk about the ROS communication hierarchy

- Setup a joystick to work with ROS

- Fly the AR.Drone with a joystick

In the previous tutorial we:

- Installed ROS, the AR.Drone driver and AR.Drone keyboard controller then flew the AR.Drone using the provided keyboard controller (link)

In the next tutorials, we will:

- Look more closely at the data sent back from the drone and play with the on-board tag detection (link)

- Write a controller for the drone to enable tag following

- Write a controller for the drone which allows us to control drone velocity, rather than body angle

What you’ll need for the tutorial

To complete this tutorial you will need:

- An AR.Drone!

- A computer or laptop with WiFi

- Linux, either the ARDroneUbuntu virtual machine, or a custom installation

- A (linux compatible) USB joystick or control pad

I also assume that you’ve completed the previous tutorial, which details how to setup the software, connect to the drone, etc.

Warning!

In this tutorial, we will be flying the AR.Drone. Flying inside can be dangerous. When flying inside, you should always use the propellor-guard and fly in the largest possible area, making sure to stay away from walls and delicate objects. Even when hovering, the drone tends to drift, so always land the drone if not being flown.

I accept no responsibility for damages or injuries that result from flying the drone.

Updating

It has been three weeks since the launch of the first tutorial and I am very happy with the response so far. The tutorial has been viewed over 720 times and ARDroneUbuntu downloaded over 50 times. I was also given the honor of partaking in RobotGarden’s very first Quadrocoptor Workshop, where we worked through the tutorial (and ironed out the bugs) together.

This response has taught me a few things about how to structure a tutorial series, and how to release a virtual machine. Based on what I have learned, I will be restructuring the source-code for the following tutorials. I will also be updating the virtual machine to reflect these changes.

When writing the first tutorial, I thought it would be better to have a separate repository for each tutorial to keep everything atomic and isolated to ensure the addition of a new tutorial would not affect anything in the past – However having learned from the video-hangout with RobotGarden, I realize this could cause an update nightmare down the track. For this reason, I have decided to operate from a single repository and will maintain a stable branch for each tutorial, with the master branch always synchronized with the stable version of the latest tutorial. The source-code for each tutorial will also contain all source-code required to run the previous tutorials.

What this means for you:

- If you downloaded the ARDroneUbuntu virtual machine before the release of this tutorial, you will need to click the “Update Tutorials” button twice. After this, your virtual machine will be updated to reflect the new changes.

- If you installed the tutorials manually, you will need to run the following at the terminal:

# roscd # rm -rf ardrone_tutorials_getting_started # git clone https://github.com/mikehamer/ardrone_tutorials

- If you have not yet downloaded the VM, no problems! I have released an up-to-date version that you can download from here

Sorry for any hassle caused by the above, I hope this new structure will allow me to more easily deliver the next tutorials!

So without further ado, lets check out some data!

- Connect to the AR.Drone as detailed in the first tutorial (remembering to disconnect other network adaptors if they use the 192.168.1.x address range)

- From within your Linux installation, launch two terminal windows (ARDroneUbuntu users, refer to the first tutorial if you’re not sure what I mean)

- In the first window, launch the KeyboardController from the first tutorial, using the command:

$ roslaunch ardrone_tutorials keyboard_controller.launch

- Wait for everything to load and the video display to become active, then in the second terminal window enter the command:

$ rostopic list

This command lists all active “topics”, the communication channels through which ROS programs (called “nodes”) communicate.

- To view the currently running ROS Nodes, type:

$ rxgraph

The window that appears will show a network of nodes, and how they are connected through the topics that we saw in the previous step.

- When you’re ready, close this window. We’re now going to have a look at the messages that are being sent between nodes. At the terminal, type:

$ rostopic echo /ardrone/navdata

The navdata message is published by the AR.Drone driver at a rate of 50Hz.

- Press Ctrl-C to stop echoing the navdata message then switch to the other terminal window and press Ctrl-C again, to stop the roscore program

So what are we setting up today?

After that whirlwind tour of the ROS communication hierarchy, lets take a look at what we’ll be building today:

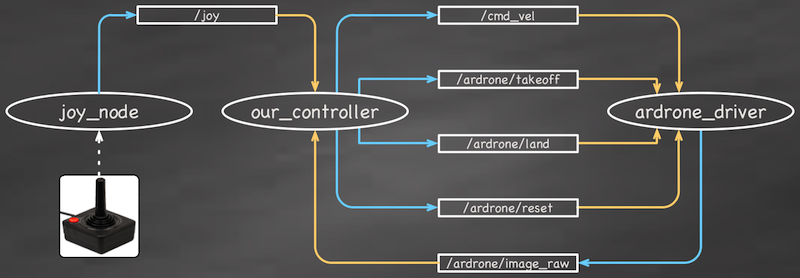

As shown above, today we’ll be setting up the joy_node (a standard ROS package) to listen for input from a joystick or control-pad. This input will be converted into a ROS message, then sent to our controller over the joy topic.

Our controller subscribes to the joy topic and reacts to the received messages by translating joystick movement into drone movement commands, which are then published to the cmd_vel topic; and button presses to takeoff, land, or emergency commands, which are each published to a respective channel.

The ardrone_driver receives these various commands and then sends them via the wireless network to the physical drone, which in turn sends back a video stream. This video stream is published by the ardrone_driver onto the /ardrone/image_raw topic, from where it is received and subsequently displayed by our controller.

Setting up a joystick

Firstly, we need to connect the joystick and set it up to work with ROS.

- Open a terminal window (or reuse one from before), then type:

$ ls /dev/input

- Take note of the jsX devices (where X is a number). These are not your joystick.

- Now connect your joystick to the computer and again type:

$ ls /dev/input

- If you see a new jsX device appear, congratulations, you’ve successfully connected the joystick, take note of which device this is, then progress to the next section of the tutorial

- If no new jsX device appears when you connect the joystick, you’re most likely using a virtual machine and may need to add the USB device manually.

- If you are using the ARDroneUbuntu virtual machine, open the Machine > Settings window, then the Ports > USB tab. With the joystick plugged in, add a new USB device. Your joystick should appear in the dropdown list.

- Click OK, then unplug the joystick from the computer

- Repeat steps 3 and 4, plugging the joystick into a different USB port if it is not detected in step 4.

Testing the joystick

Now that we have the joystick installed, let’s check to make sure everything is working. Type the following at a terminal, substituting jsX for the joystick device you previously identified:

$ jstest /dev/input/jsX

If you are greeted with a “jstest: Permission denied” error, type:

$ sudo chmod a+r /dev/input/jsX

Which enables all users to read the joystick device (ARDroneUbuntu users, the password is: ardrone). After running jstest, moving the joystick should cause the numbers on the screen to update, if not, try running jstest for the other jsX devices. Congratulations, your joystick device is working! Press Ctrl-C to exit.

Using the joystick with ROS

Now we’re ready to integrate the joystick device with the ROS ecosystem using ROS’s “Joy” package.

- Open up three terminal windows. In the first, launch roscore:

$ roscore

- In the second, we launch ROS’s joystick launcher, replacing jsX with the joystick device you previously identified:

$ rosparam set joy_node/dev "/dev/input/jsX" $ rosrun joy joy_node

- Now in the third terminal window, we echo the Joy messages, sent out by the joy_node that we just launched:

$ rostopic echo joy

- If all is working, you should see a Joy message print on the console whenever the joystick is moved (or a button is pressed).

Identifying Control Buttons & Axes

We now have the task of identifying the various axis and buttons used to fly the drone. All in all, you will need to identify axes to control the AR.Drone’s roll, pitch, yaw-velocity and z-velocity; and buttons to trigger an emergency, command a takeoff, and command a landing.

To identify the emergency button for example, press the button you would like to trigger an emergency, and watch the terminal to see which button is enabled. In my case, I use the joystick’s trigger button for emergency (being able to react quickly to an emergency is very important). With the button pressed, the following is printed in the terminal window:

axes: [-0.0, -0.0, -0.0, -0.0, 0.0, 0.0] buttons: [1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

From this I can identify that: Emergency = Button 0 (note that indexing starts from zero). The process follows similarly for identifying an axis:

- Press the joystick fully left. The axis that changes (the first number in the list is axis 0) is the roll axis, the number if changes to is your roll scale (usually + or -1)

- Press the joystick fully forward. The axis that changes is your pitch axis, and the number it changes to is the pitch scale (usually + or -1)

- Rotate your joystick fully counter clockwise, the axis that changes is your yaw axis and the number it changes to is the yaw scale (usually + or -1)

- Push your throttle stick fully forwards. The axis that changes is your z-axis and the number it changes to is the z-scale (usually + or -1).

axes: [1.0, -0.0, -0.0, -0.0, 0.0, 0.0] buttons: [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

From this I identify that the Roll Axis = 0 and Roll Scale = 1.

After you have identified all axes and buttons, press Ctrl-C in all terminal windows to stop the processes.

Configuring the Joystick Controller

In ROS, a “Launch File” is used to configure and launch a set of nodes. We’ve already seen this in the first tutorial (and at the beginning of this one), where we typed:

$ roslaunch ardrone_tutorials keyboard_controller.launch

In this command, keyboard_controller.launch configures the ARDrone driver for indoor flight, then launches both the driver and keyboard controller. Similarly in this tutorial, we will be launching the joystick controller (and related nodes) using a launch file.

Go ahead and open a terminal window, then type:

$ roscd ardrone_tutorials $ geany launch/joystick_controller.launchtemplate

This will open the joystick_controller.launch file in the text editor (non ARDroneUbuntu users can use a text editor of choice). You will need to customize this file for your setup.

- Update line 21 to reflect your joystick device (the /dev/input/jsX that you identified earlier).

- Update lines 27-29 to reflect your button mapping

- Update lines 32-35 to reflect your axis mapping

- Update lines 38-41 to reflect your scales (usually either 1 or -1)

These scaling factors determine for example the drone roll direction when the joystick is tilted along the roll axis. If your drone does the opposite of what you expect, change the sign on the relevant scaling factor (eg 1 becomes -1)

Feel free to have a look at some of the parameters used on the drone. The drone’s control parameters can potentially be increased to allow for more aggressive flight.

Once you’re happy with the settings, save this file as joystick_controller.launch, then close the geany application.

Finally flying!

So, after that rather long endeavor (which you should only need to do once), lets fly!

Connect to the drone, disconnect other network devices, then open a terminal and type:

$ roslaunch ardrone_tutorials joystick_controller.launch

This will launch the nodes as you configured in launch file. If all goes well, you should now be able to fly the drone using your joystick.

Before commanding the drone to take-off, you should first check that your emergency button works. With the drone still landed, press the emergency button once. All onboard LEDs should turn red. Pushing the button again will reset the drone, and the LEDs will once again turn green. In addition to this, whenever a (mapped) button is pressed, the joystick_controller will print a message to the terminal, such as “Emergency Button Pressed”. This is a further indication that things are working!

You’re now ready to take off. Have fun!

Going Further

The above child’s play? Heres some ideas for exploring the ROS and ARDrone environment:

- While the joystick controller is running, open a new terminal window and type:

$ rxgraph

This will display the node communication diagram that we investigated at the start of the post

- Take a look at the data being sent back from the drone:

$ rostopic echo /ardrone/navdata

- Plot your flight speeds (or any other data of interest) with:

$ rxplot /ardrone/navdata/vx,/ardrone/navdata/vy

- Adjust drone parameters in the joystick_controller.launch file to fly more aggressively

Troubleshooting

- The joystick doesn’t do anything

Check that the joystick device you configured in the launch file corresponds to your joystick. To do this, repeat the section “Testing the Joystick” - My joystick device is correct, but the joystick doesn’t do anything

Assuming you’ve gone through the “Testing the Joystick” section successfully, launch the joystick controller again and in a separate terminal, type:

$ rostopic echo joy

Assuming everything is configured correctly, this will print joystick messages to the terminal when the joystick is removed.

- If nothing is printed, check to make sure you’ve configured the correct joystick device in the launch file, and that the joystick permissions are correct (see “Testing the Joystick”).

- If something is printed, check to make sure your axis and button mappings in the launch file are correct. If this is the case, leave a comment to this tutorial and I will be happy to help!

- The drone does the opposite of what I expect

For example flying backwards when the joystick is tilted forwards. To solve this, determine the axes that respond counterintuitively then invert the sign of the respective scaling factors in the launch file. For example, if the pitch axis (forward-backward) is the opposite of what you would expect, the ScalePitch parameter would change from 1 to -1. - I cannot connect to the drone

Check that your computer is connected to the drone via wireless, and that all other network devices are disconnected - The drone will not take off

- Are all the onboard LEDs red? If so, try pressing the emergency button. Do they toggle to green? Perfect – you’re ready to fly!

- If the LEDs are green and the drone still won’t take off, check that your button mapping is correct in the launch file

- Other issues?

If you have any questions, or comments about what you would like to see in the next tutorials, please send me a message or leave a comment to this post!

You can see all the tutorials in here: Up and flying with the AR.Drone and ROS.

If you liked this article, you may also be interested in:

- Parrot AR.Drone app harnesses crowd power to fast-track vision learning in robotic spacecraft

- Taking your first steps in robotics with the Thymio II

- Discovering social robotics with the Aisoy1

- Classroom robotics: Motivating independent learning and discovery

See all the latest robotics news on Robohub, or sign up for our weekly newsletter.

tags: AR Drone, c-Education-DIY, cx-Aerial, DIY, education, Flying, Parrot AR.Drone Tutorial, tutorial