Robohub.org

257

Learning Robot Objectives from Physical Human Interaction with Andrea Bajcsy and Dylan P. Losey

In this interview, Audrow speaks with Andrea Bajcsy and Dylan P. Losey about a method that allows robots to infer a human’s objective through physical interaction. They discuss their approach, the challenges of learning complex tasks, and their experience collaborating between different universities.

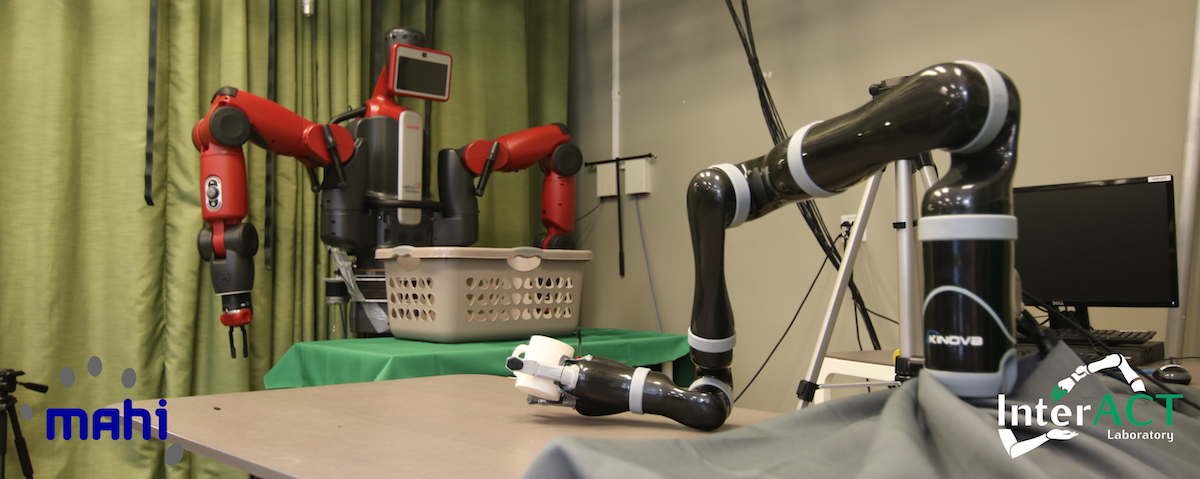

Some examples of people working with the more typical impedance control (left) and Bajcsy and Losey’s learning method (right).

To learn more, see this post on Robohub from the Berkeley Artificial Intelligence Research (BAIR) Lab.

Andrea Bajcsy

Andrea Bajcsy is a Ph.D. student in Electrical Engineering and Computer Sciences at the University of California Berkeley. She received her B.S. degree in Computer Science at the University of Maryland and was awarded the NSF Graduate Research Fellowship in 2016. At Berkeley, she works in the Interactive Autonomy and Collaborative Technologies Laboratory researching physical human-robot interaction.

Andrea Bajcsy is a Ph.D. student in Electrical Engineering and Computer Sciences at the University of California Berkeley. She received her B.S. degree in Computer Science at the University of Maryland and was awarded the NSF Graduate Research Fellowship in 2016. At Berkeley, she works in the Interactive Autonomy and Collaborative Technologies Laboratory researching physical human-robot interaction.

Dylan P. Losey

Dylan P. Losey received the B.S. degree in mechanical engineering from Vanderbilt University, Nashville, TN, USA, in 2014, and the M.S. degree in mechanical engineering from Rice University, Houston, TX, USA, in 2016.

He is currently working towards the Ph.D. degree in mechanical engineering at Rice University, where he has been a member of the Mechatronics and Haptic Interfaces Laboratory since 2014. In addition, between May and August 2017, he was a visiting scholar in the Interactive Autonomy and Collaborative Technologies Laboratory at the University of California, Berkeley. He researches physical human-robot interaction; in particular, how robots can learn from and adapt to human corrections.

Mr. Losey received an NSF Graduate Research Fellowship in 2014, and the 2016 IEEE/ASME Transactions on Mechatronics Best Paper Award as a first author.

Links

- InterACT Lab

- MAHL Lab

- Andrea Bajcsy’s website

- Dylan’s Losey’s website

- Subscribe to Robots using iTunes

- Subscribe to Robots using RSS

- Support us on Patreon

- Download MP3 (14.9 MB)

tags: Actuation, Algorithm AI-Cognition, Algorithm Controls, c-Industrial-Automation, human-robot interaction, podcast, Research, Robotics technology