Robohub.org

Rehabilitation and Environmental Monitoring with Lei Cui

Transcript included.

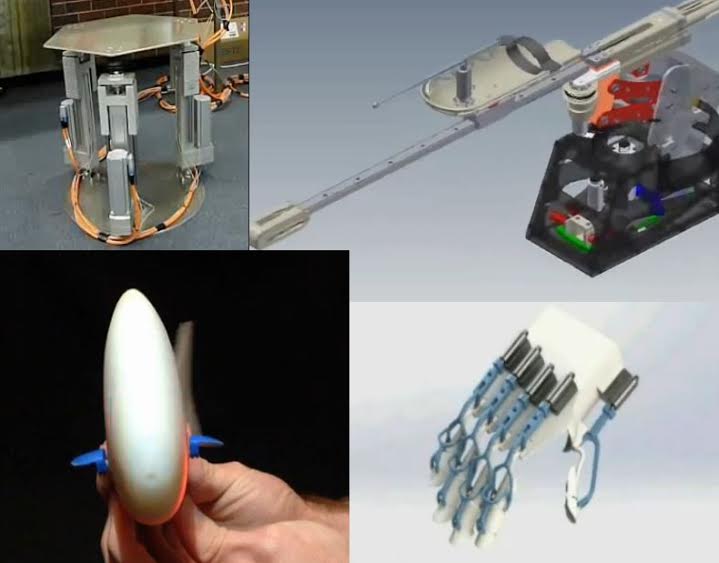

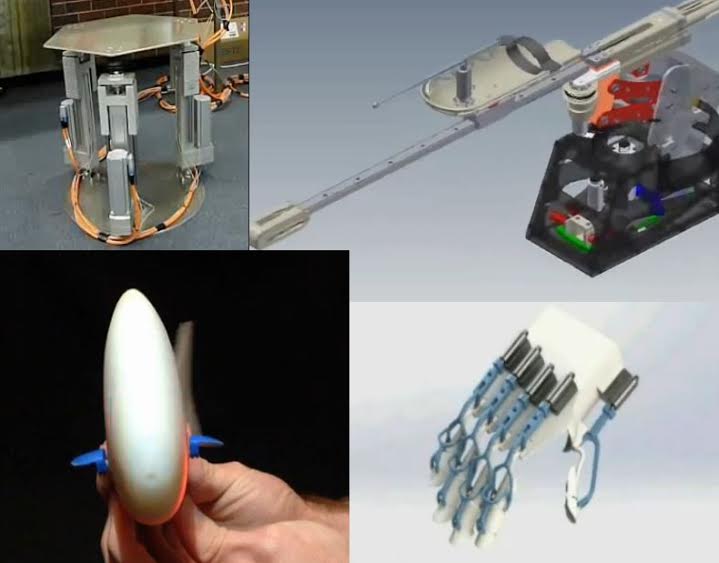

In this episode, Ron Vanderkley speaks with Dr. Lei Cui from Curtin University about his team’s work on 3D printable hand orthosis for rehabilitation, a task-oriented 4-DOF robotic device for upper-limb rehabilitation and a 3-DOF platform providing multi-directional perturbations for research into balance rehabilitation. They also discuss a high-speed untethered robotic fish for river monitoring and an amphibious robot for monitoring the Swan-Canning River System.

A 3D Printable Parametric Hand Exoskeleton for Finger Rehabilitation

ComBot: a Compact Robot for Upper-Limb Rehabilitation

A 3-DOF Robotic Platform for Research into Multi-Directional Stance Perturbations

AmBot: A Bio-Inspired Amphibious Robot for Monitoring the Swan-Canning Estuary System

Lei Cui

Dr. Lei Cui completed his PhD degree in Mechanical Engineering in July 2010 from the Centre for Robotics Research, King’s College London (UK). He continued his employment as Postdoctoral Research Associate after graduation. In February 2011 he moved to the Robotics Institute, Carnegie Mellon University(US) and worked as Postdoctoral Fellow until July 2012. He joined the Department of Mechanical Engineering, Curtin University (AU) and was appointed Lecturer in Mechatronics, which he currently holds.

Links:

Transcript

Ron Vanderkley: Dr. Lei, we can start by asking you to introduce yourself to the listeners.

Dr. Lei Cui: Sure. My name is Lei Cui, I’m a lecturer in Mechatronics. I’m with the department of Mechanical Engineering at Curtin University. I joined Curtin about two-and-a- half years ago. Before that I got my PhD from King’s College, London, and I did my second Post-Doc at Carnegie Mellon University.

Ron Vanderkley: Let’s talk about the research that you’re doing at Curtin University. I know there are two facets to it. We can start with the first one: robot rehabilitation.

Dr. Lei Cui: I worked on robot rehabilitation when I was doing my PhD. After I joined Curtin, I decided it was a good time to pick this research up again, because the Mechanical Engineering Department is now shifting its research focus to rehabilitation and medical engineering. In the last two and a half years I’ve developed a 3D printable hand exoskeleton for rehabilitation, in collaboration with a colleague. We patented a finger design just three months ago. The unique feature of this hand exoskeleton is that each finger has three active joints, and, so, three active outputs, but with only one actuator – there’s one input. It’s the first design of its kind in the world.

It’s a joint linkage, I don’t know if you’re familiar with the concept of linkage? We applied the same linkage to all five fingers, so each can be operated by one linear actuator, but has three output joints corresponding to the three joints in each finger. Another feature is the whole assembly can be printed out using a 3D printer. No assembly work needs to be done, it’s for mass customization.

Ron Vanderkley: That’s a general 3D printer, printing in any kind of polymer plastic?

Dr. Lei Cui: No, we need a high precision printer. We need high resolution.

Ron Vanderkley: It’s not a hobbyist or …

Dr. Lei Cui: No, it’s not, any low level 3D printer cannot do that.

Ron Vanderkley: You told us that it’s at the point of being patented or patented already, are you collaborating with any other organization?

Dr. Lei Cui: Yes, we’re now working with a company in the US, from MIT. They’re also developing this hand exoskeleton, but our design is much better than theirs so we’ve been talking about how they could buy our design, or we could just print some collaboration frames so we can work with them.

Ron Vanderkley: You also spoke about the second project that you’re currently working on?

Dr. Lei Cui: Another project is an upper limb, for arm rehabilitation. On this project I have been working with Kerry Allison, a professor at Curtin from the Health Science School. Mainly, we’ve concentrated on task-oriented training. When patients do training they don’t focus on each individual joint in terms of rehabilitation. Usually, they focus on the task. For example, they want to move the hand from point A to point B: they normally look at the target first then move the hand from A to B. They don’t focus on each individual joint.

So that’s the idea behind our design. The ideal training would be for the user, or the patient, to look at the target, move to it and it would have false feedback to test the patient’s performance.

Ron Vanderkley: So it’s a variable device?

Dr. Lei Cui: No, it’s not variable, it’s a hand infector type. Not an exoskeleton type.

Ron Vanderkley: The primary use for that is to teach stroke victims?

Dr. Lei Cui: Yes, mainly for stroke patients.

Ron Vanderkley: What stage are you at with that process?

Dr. Lei Cui: We have developed a prototype and, currently, I’ve got one PhD student working on this project, but he’s only at the first stage. Now, we are combining force torque sensing and EMG sensing to do a patient intention estimation, it’s the first step. Previous work has used a predefined trajectory, meaning that the subjects first perform the task and they record the predefined trajectory; so they’ve try to force or assist the patient to follow the same trajectory. Our idea was to do a patient intention estimation first and combine EMG and force sensing. So, we don’t rely on the predefined trajectory, we want to generate it on the fly. This is the first step.

The second step would be to apply adaptive impedance control to assist training, as needed.

Ron Vanderkley: Thirdly, you are working on a three directional platform, is that correct?

Dr. Lei Cui: Yes, a three dimensional platform. That’s also a collaboration between Professor Gary Alison and myself. Basically, it’s his idea; he’s been working on this project for many years. What he wanted was a platform that could be multidirectional for the patients. This platform destabilizes the user. So, the user will be standing on top of the platform, it will move and the user has to move his or her foot to regain stability. That’s the part we need to control well.

Ron Vanderkley: What’s the end goal of it? Why are you creating it?

Dr. Lei Cui: Professor Alison’s research focuses mainly on how to prevent old people from falling. To study human posture and balance, you need to provide a well-controlled multidirectional platform for patients. That’s why it’s pretty large: 600mm in diameter. A person with an average weight of 100kg can stand on top of it comfortably. Also, they can shift a foot and regain their balance; we’ve provided enough space for that, and also enough speed and acceleration.

Ron Vanderkley: The other area of expertise you’re looking at is Biomimicry, could you tell us where you are going with that?

Dr. Lei Cui: Biomimicry is a fascinating research field; it’s also quite a hot topic. If we take the normal approach, using mathematical modeling, it’s very hard to realize some functions, that’s why we take inspiration from nature. Because nature – fish and other animals – has evolved over millions and millions of years. I’m focusing on applying Biomimicry design to monitoring.

The first project I worked on was called Amphibious Robot. We took inspiration from amphibious centipedes because they have multiple feet. These feet provide buoyancy and actuation, our design was decided with this in mind. It’s a track driven vehicle, but at each point of the two tracks, we’ve fixed ballpoint blocks so that, when the robot is in water, they provide buoyancy and actuation. When it’s on land, it can also effectively drive the robot.

Ron Vanderkley: At what stage are you at with that?

Dr. Lei Cui: We have finished the first stage, that’s the mechanical design and the empirical study; the paper’s been accepted by the ASME Journal of Mechanical Design. Now, some of my students are designing autonomous navigation systems, using an android form that also provides sensors, an accelerometer, gyros and a video camera.

Another project is the Robotic Fish.

Ron Vanderkley: Yes, we’ve heard a lot about the fish.

Dr. Lei Cui: In fact, this robotic fish is quite fast. It’s the fastest robotic fish in the world in terms of body length, per second and we’ve achieved three body lengths per second. A review paper in 2012 held the previous record: 1.6 body lengths per second.

Ron Vanderkley: So, it’s a first here in Western Australia?

Dr. Lei Cui: Yes, definitely, we just haven’t published anything yet. We want to wait until we collect enough data and it’s accepted by a good journal.

Ron Vanderkley: The propulsion of the fish…Biomimicry, is that using actuators?

Dr. Lei Cui: Yes. In fact, those kind of fish are in [the local] Swan Canning River, they’re called Bream. It’s quite a popular fish in this river. We effectively morphed this fish. If you put our robotic fish in the river, it would be very difficult to discern which is the real fish. The appearance is the same and the size.

Ron Vanderkley: Is there a plan to monitor the schools of fish?

Dr. Lei Cui: Yes. Our idea is that our fish could mix with biological fish seamlessly, because they could be made to look real. We hope, in the future, to use it to monitor the fish population in the Swan Canning River, for example.

Ron Vanderkley: Would you be able to mount sensors on them to take salinity tests, for example?

Dr. Lei Cui: Yes. Now we’re studying the actuation of the fish; we hope to further improve the speed, that’s the first task. The second task is to develop electrical field sensing technology for the fish, because they are un-tethered, small in size and battery powered. Currently, the batteries last for about 45 minutes but we need to make the fish autonomous. We’ve taken inspiration from some fish using electrical fields to sense their environments. I’ve got one PhD student and several undergrad students developing this form of bio-sensing, using electrical field sensing.

[0:17:53]

Ron Vanderkley: That’s very fascinating and impressive. I haven’t read anything on that for quite a while.

Dr. Lei Cui: Thank you.

Ron Vanderkley: We’d be very interested to find out how that’s developing, once you get going on it.

Now, the question that we ask everybody is this: Where do you think robotics will be, say, in 10 or 20 years from now? What kind of direction do you think we are heading in?

Dr. Lei Cui: I think robotics covers a lot of areas: artificial intelligence, mechanical design, sensing technologies; many of them are a combination of these. In my opinion, currently, sensing technology is holding back development in robotics. I would assume that, in the next 10 or 20 years, more people will be focusing on sensing. In terms of artificial intelligence, IBM has built machines to beat world chess champions. Artificial intelligence to do a single task is, I think, quite mature, but to multitask? Developing robotic animals that behave like the real thing still has a long way to go, it needs 50 -100 years, I’d guess.

Ron Vanderkley: Obviously, we’ve seen lots of examples, but predefined scripts give the audience the idea that some robots are more intelligent than they are?

Dr. Lei Cui: I’ll give just one example. While I was working at CMU, on the DARPA sponsored ARM [Autonomous Robotic Manipulation] project, one of my tasks was on the robot arm to hold a key, insert it into a lock and then unlock the door. If you watch the video, it seems quite intelligent. It appears as if it can feel what it’s doing, but my approach was to use force torque sensing on the wrist of the robot and then to insert the key into the hole. It looks intelligent but, in fact, as you’ve said, it’s defined by algorithms.

Ron Vanderkley: To me, that always confuses people and detracts from the research, that should be the focus. It presents the wrong impression. Similarly, movies like Terminator, also paint the wrong picture.

Dr. Lei Cui: Yes, definitely. I think it would take 200 years, at least, for Terminator to be a threat.

Ron Vanderkley: So, I’ll wrap it up by saying thank you very much for taking the time to do the podcast, and we hope to chat with you again in the future.

Dr. Lei Cui: Sure, thank you.

All audio interviews are transcribed and edited for clarity with great care, however, we cannot assume responsibility for their accuracy.

tags: Actuation, bio-inspired, c-Research-Innovation, cx-Health-Medicine, podcast, Research, robot, robots for eldercare, Service Professional Medical Prosthetics